We can perform digital processing perfect to within any arbitrary specification.

Who is "we?" If you mean a PC software developer with Gigahertz processors, sure. You can do what you like. But if you are a DAC chip designer – the topic we are discussing – every gate costs money and you are not going for broke as far as digital processing resolution. More below.

It's incorrect to call oversampling or resampling 'interpolation', as no interpolation is happening.

Of course there is interpolation happening. Hell would break lose if it were not

. Even a simple google search would show you tons of hits as to relevance of this term and fundamental role it plays in many DACs:

http://www.analog.com/static/imported-files/tutorials/MT-017.pdf

"OVESAMPLING

INTERPOLATING DACS

The basic concept of an

oversampling/interpolating DAC is shown in Figure 2. The N-bit words

of input data are received at a rate of fc. The digital interpolation filter is clocked at an

oversampling frequency of Kfc, and inserts the extra data points. The effects on the output

frequency spectrum are shown in Figure 2. In the Nyquist case (A), the requirements on the

analog anti-imaging filter can be quite severe. By oversampling and interpolating, the

requirements on the filter are greatly relaxed as shown in (B)."

http://en.wikipedia.org/wiki/Digital-to-analog_converter

"Oversampling DACs or

interpolating DACs such as the delta-sigma DAC, use a pulse density conversion technique."

http://www.eetimes.com/electrical-e...erpolation-Filters-for-Oversampled-Audio-DACs

"Most audio DACs are oversampled devices requiring an

interpolation filter prior to its noise shaped requantization. "

http://www.eetimes.com/design/signa...DSP-part-2-Interpolating-and-sigma-delta-DACs

“In a DAC-based system (such as DDS), the concept of interpolation can be used in a similar manner. This concept is common in digital audio CD players, where the basic update rate of the data from the CD is about 44 kSPS. "Zeros" are inserted into the parallel data, thereby increasing the effective update rate to four times, eight times, or 16 times the fundamental throughput rate. The 4×, 8×, or 16× data stream is passed through a

digital interpolation filter, which generates the extra data points. "

http://www.essex.ac.uk/csee/research/audio_lab/malcolmspubdocs/C21 Noise shaping IOA.pdf

"The techniques describe in section 2 can also be used in digital to analog conversion. However, in this case the Nyquest samples must first be converted to a higher sampling rate using a process of interpolation, whereby noise shaping can then be used to reduce the sample amplitude resolution, hence removing redundancy, by relocating and requantisation of noise into the oversampled signal space."

So not only is the term correct but critical to function of such oversampling DACs.

Leaving the terminology debate aside, 'implementation constraints' itself implies that these constraints are somehow of damning practical concern. Once you're 160dB-180dB deep before even resorting to floating point, I should think anyone's requirements for a DAC have been met.

I think you are confusing a DAC *chip* with a DAC device or PC software implementations of resampling. They are not at all the same thing. We were discussing DAC chips. High volume DACs sell for cents rather than dollars and have severe manufacturing costs constraints. They use hardwired multiply/add circuits purpose built to have just the right precision below the spec they like to achieve for the DAC and no more. Don't confuse them with what goes on a PC processor which goes for $30 to $300 just for the computational engine or even a DSP that sells for $5. The interpolator needs to cost pennies. I can give you hundreds of references for this. Here is an example:

http://www.aes.org/e-lib/browse.cfm?elib=6816

"A digital audio example will demonstrate this computational burden. Consider

interpolating 16-bit digital audio data from a sample rate of 48kHz to a rate 4x faster, or

192kHz. A digital audio quality FIR filter [interpolator] operating at 4x may have length N=128 with 14-

bit coefficient precision. The resulting computational load is 32 (16-bit x 14-bit)

multiplies/adds at 192kHz. Of course, the computation rate must double for a stereo

implementation; consequently, a digital audio quality filter interpolating by a modest 4x

needs a (16-bit x 14-bit) multiplier operating at 12.288MHz - a rate that requires a

dedicated, parallel, hardware multiplier in state-of-the-art CMOS technology."

As you see its multiplier is fixed point customized for input of 16 bits and output of 14 bits. Another example:

http://www.ee.cityu.edu.hk/~rcheung/papers/fpt03.pdf

” Figure 10 shows the basic implementation of the SDM. The a(i), b(i) and c(i) coefficients are all floating point numbers. However, the several large float-point multipliers which calculate the intermediate value between the input data and these coefficients would eventually use up all the resources of the FPGA chip. As a result, we extract the mantissa part of these coefficients and transform all the floating-point multiplication into fixed point multiplication.”

Yes, there are DAC *devices* with external DSPs to achieve better filter response but as a rule, you can’t assume super high resolution implementations especially in run of the mill DACs.

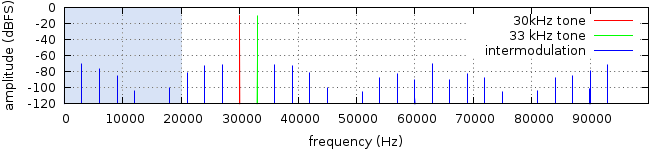

Digital resampling is practically free of distortion (again, we're talking about 180dB down, not 60dB).

If I have a DAC that has 100 db SNR, why would I build its interpolator to go down to 180db? Why wouldn’t I reduce the accuracy down to my final spec and save the gates/space on chip together with reduced power consumption? Reality is that the chip designers do exactly that.

No, it does not. Taking ASYNC mode ISOCHRONOUS USB as an example, the DAC always clocks its samples to a high accuracy sample clock. The samples are taken from a buffer (FIFO) that holds a few ms of audio. If the DAC clock is a little faster than the host clock, the fill level of the FIFO drops slowly and the host is requested to increase the number of bytes per packet to keep up. If the FIFO is slowly filling, the host is requested to slightly reduce packet size. There is no distortion caused by this mechanism as it's completely divorced from the DAC. It can go wrong if the FIFO underruns or overruns, but we all agree this is an unmistakable catastrophe.

Asynchronous USB and asynchronous sample rate converters are two different things. The latter is a signal processing device and the subject of my post there. That just because said module was digital, it didn’t mean that it generated perfect results.