As requested by

@J.R. Boisclair I copied my post down here from AM thread.

Even though there may be problems like test record quality, interface etc, I still believe that AM software is best for cartridge setup process. I consider visual methods like using a zenith protractor/tool, eyeballing are far more primitive compared to AM. There are too many variables, more precisely too many assumptions.

For example when you align zenith using a zenith tool you think that you’re all set as long as you’ve sent your cartridge before to Wally and you know it’s zenith error. But there are multi assumptions in this solution. Lets say your cartridge has 2.2 degrees zenith error reported by Wally.

- First assumption is trusting that it’s measured precisely. Considering difficulties of measurements with microscope I wouldn't take it granted.

- When you try to correct it using zenith tool you need to align cantilever to 2 degree line which is very hard to do visually. No matter what people say it’s incredibly hard to align cantilever parallel to a line on a mirror surface. Thinking that you aligned cantilever exactly parallel to respective line is second assumption. Additionally you can not align 2.2 degrees as far as I know. Not a great deal but you have to choose 2 or 2.5 lines.

- Cantilever angle (zenith) changes according to anti-skating. If you use Wally skater you set anti-skating according to VTF. When you play a record depending on the stiffness of suspension (soft or hard) the cantilever may skew more or less so cantilever may not be parallel to the lines you set before. Expecting cantilever's skew to match anti-skating you set earlier is another assumption.

- One side of suspension can be softer which is very common even if suspension is in great condition. In that case cantilever's skew angle will be effected by soft side when playing a record. Again zenith will be different than visually set. More assumptions.

If everything is perfect those assumptions are ok but nothing is perfect. That's why arc protractors are great cause it gets rid of assumptions by showing the actual arc that should be drawn by stylus. It is simple, no assumptions. Other protractors like smartractor or Feickert rely on assumptions such as; you can perfectly land one end of protractor over the pivot point and perfect overhang will be set when you align stylus to land over the dot.

When you rely on static alignment procedures for a dynamic system based on pre conceptions like Wally solutions (except arc protractor), multi assumptions are inevitable. In this zenith example; when you accept overhang, VTA/SRA and azimuth are set perfectly in advance there are still problems with zenith which will arise when the record is played. On the other hand when you use AM with a test record you align it dynamically and all factors (VTA, azimuth, ati skating etc) are at play. You only need to know where to look and to learn what the numbers are telling. I set zenith using AM V1 test record and check with AP test record. Track locations are different and cut at different places but both lead you to same zenith alignment. Even with AM V2 test record at hand being low in quality, it still gives same zenith results with V1. That’s why I said “far fetched“ for Wally’s multi assumption zenith solution. Those are my humble opinions.

Thank you for posting your points here,

@mtemur. They are certainly worth discussion.

Of course, the WAM Engineering process has many variables but they are of a known quantity and therefore controlled for as much as possible. I think by the end of this post you may see why they are no more numerous (actually LESS) but far more controlled for than using a test record and assessing the electro-mechanical output.

It would appear that your underlying claim is that the “visual-mechanical” method (WAM Engineering) of cartridge optimization has too many variables and assumptions to be as accurate as the fully electro-mechanical process used by AM and therefore it is less able to provide performance optimization (defined here as the *highest possible* level of performance from a given system, as opposed to simply an improvement with an unknown degree of unrealized performance improvement).

Fair enough. Let’s take that apart and see what’s under the hood.

The first two concerns relate to whether an accurate job of measuring can be done consistently well using microscopy and, secondly, the zenith error correction can be done to no less than

0.5 degree discretization allowed by the WallyZenith.

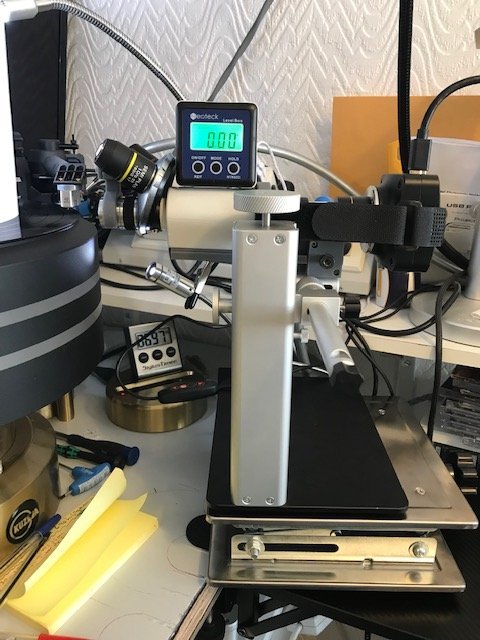

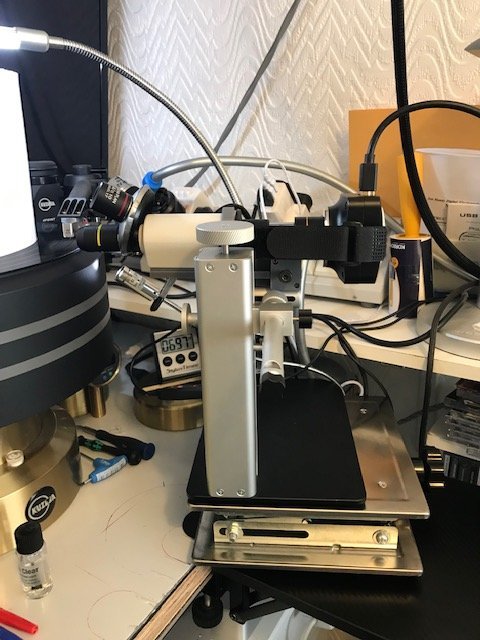

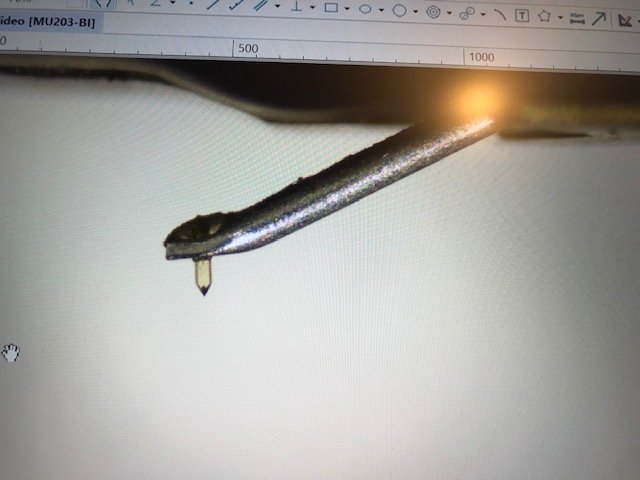

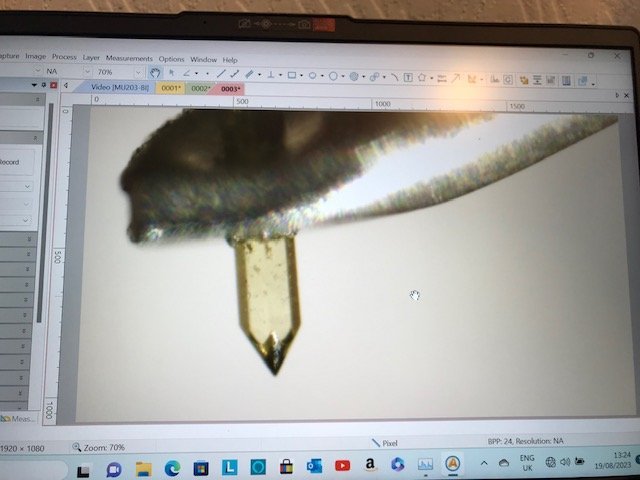

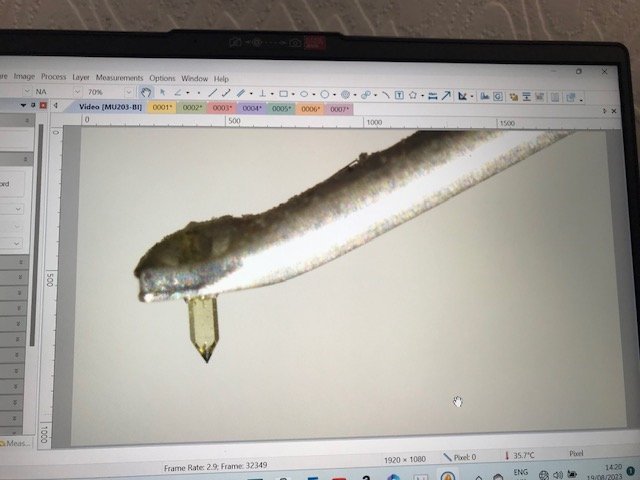

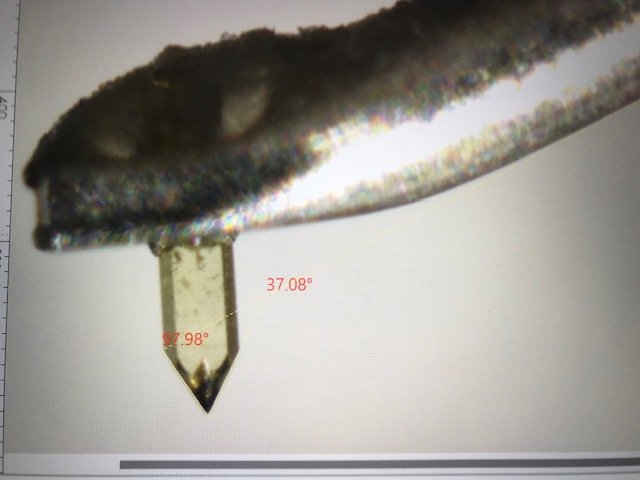

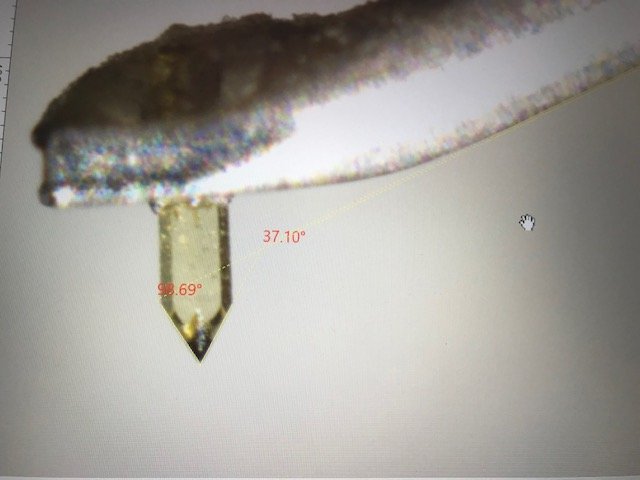

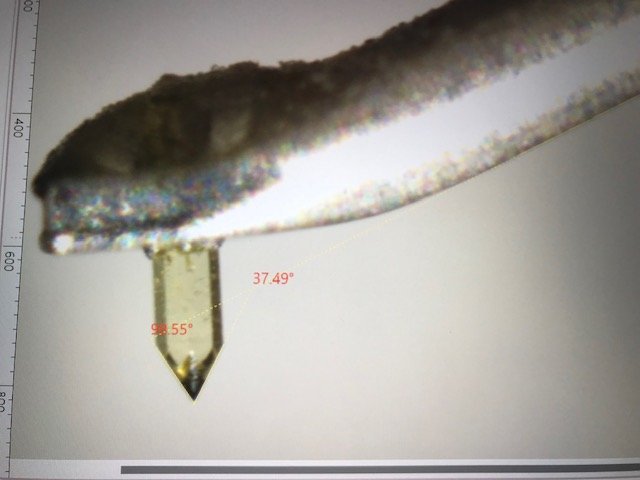

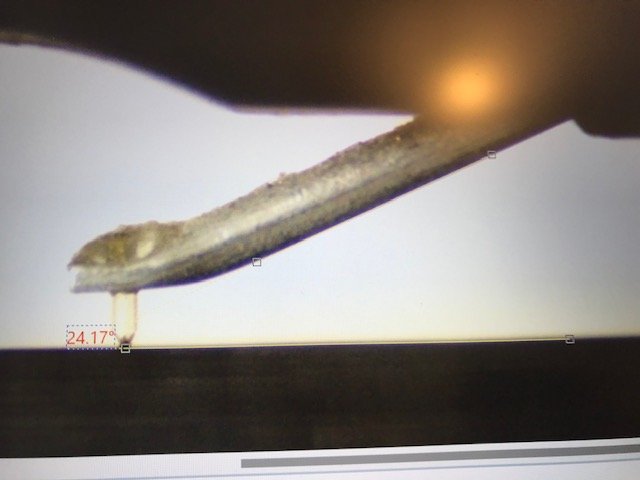

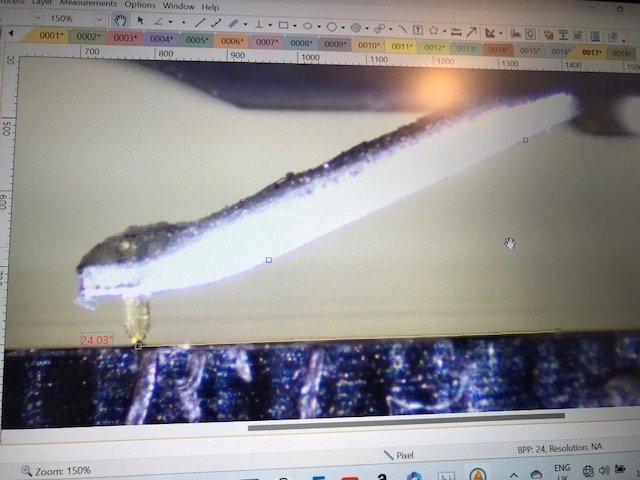

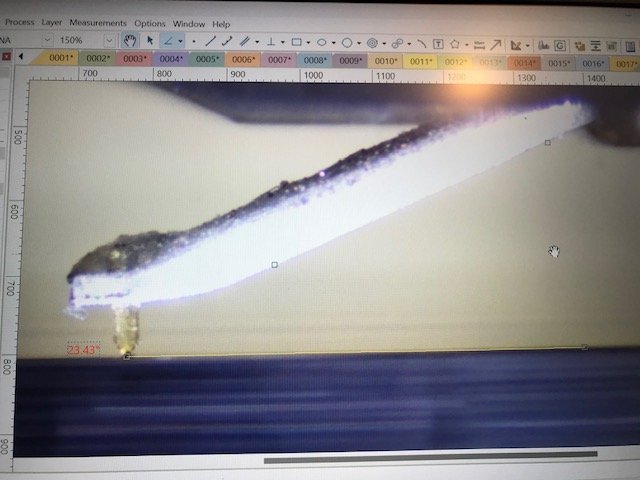

The question of measuring error in microscopy is a valid concern and something the four engineers I’ve been working with (one of them a PHD with decades of experience in theoretical and applied optics) have helped me immensely to grapple with. Some degree of measurement error is inescapable but we have been able to actually determine what our limits of accuracy are by doing the tedious work of static and dynamic repeatability tests. These tests involve remeasuring the same thing many multiple times WITH (“dynamic”) and WITHOUT (“static”) changing the position of the subject under test as well as changing the settings of the microscope. We then tabulate the data captured and assess the standard deviation and limits of all measurements to determine our overall measurement accuracy. For example, following this work, we know that we are able to comfortably measure zenith error optically within +/-0.25 degrees tolerance, often better.

So, how good is that? As a practical matter, it is LESS than the limits of my ability to make a change in cartridge position on the headshell to adjust for zenith error correction. The smallest gradient I am able to adjust is about

0.5 degrees. A

0.5 degree rotation is NOT something I can feel happen. I can only SEE it happen if - and only if - I have an unobstructed view to the far end of the cartridge pins. Since these are the points attached to the cartridge which are the furthest away from the point of rotation, I can just BARELY see them move when I apply pressure and then I know I’ve hit

0.5 degrees (plus or minus a couple tenths of a degree at worst). How do I know this? By observing the change on the WallyZenith and by doing electrical tests.

YES!, we do electrical tests as part of our research. In fact, we’ve put in hundreds of hours so far this year doing such tests. But doing these electro-mechanical tests involving test records has underscored how problematic the effort is.

?For example, recall the static repeatability tests mentioned above: we found if we change NOTHING AT ALL with the cartridge, tonearm or playing radius and record a given track 10 times and assess for the variation in results among the dataset, we will see a few tenths of a degree shift in the data. It shouldn’t be happening, but it is. This is consistently happening, by the way, not just once in a while. From test record to test record, we see the variation in the data even though we have changed nothing at all. We are calling this the “noise floor”.

I have heard similar comments from AM users that the data will be different from repeat to repeat despite not having changed any mechanical parameter.

Remainder of my thoughts on next post...