Honestly, I'm not entirely sure why you might care, but I'll do my best to make the read worthwhile

It is pretty much impossible to navigate the modern world without, at some point, being exposed to the fundamental computing concepts of Bits and Bytes. Sometimes these words are used metaphorically, as a way of saying "down in the details" - for instance, "I'm not interested in the Bits and Bytes". In other cases, they are used in a general technical sense, for example: digital audio bitstream, 10 Bit video, 24 Bit audio recording, 64 Bit processor, 100 GigaByte disk. And there are other words directly related to the concept of Bits: "Ones and Zeros", "Binary", "Digital". We use those concepts and words all the time, but what do they really mean?

A "Bit", in computing, represents the smallest unit of information that can be represented - the smallest bit of information, get it? Bits are to computing what protons, neutrons, and electrons are to physical matter, the basic building blocks from which all, more complex structures are created. Just like the atomic particles can be used to "build" everything from Hydrogen to Mount Everest, Bits have an essentially infinite capacity to represent the different kinds of information, aka "data", in the computing universe.

And what is a Bit, exactly? Well, it is a piece of data with exactly two possible values (aka "states"). These values are often referred to as "zero" and "one", or "off" and "on". The "off" and "on" wording gives you a major clue as to why the Bit was chosen as the fundamental unit of data for computing. An electrical switch has two possible values: off and on. And computers are mostly, in the most reductionist sense, collections of electrical switches. Millions of them. That's what a "chip" is - a collection of interconnected electrical switches.

If you walk over to the nearest light switch right now and flip it on or off, you are setting the value of one "Bit" of information. How do you know what the value is? Well, either zero volts comes out of the switch, or about 120 volts comes out (assuming you're in North America). So 0 volts = Off, 120 volts = On. The light bulb is the "detector" that tells us which is which. In a modern computer, the switches are implemented as microscopic transistors, and they mostly transmit voltages to other switches rather than light bulbs, but the fundamental concept is largely the same.

You may wonder about the above analogy. What if we have a dimmer switch, instead? Then the voltage can vary anywhere between 0 and 120 volts. And we could, say, arbitrarily scale that range into steps of 10 volts, and our switch could then represent 12 different values instead of two. Or we could divide it up even more finely. Wouldn't that be a more efficient use of a single switch? Yes, indeed, it would, and there is a quite different concept of computing called "analog computing" which essentially does exactly that. The problem with analog computing is that it is too dependent on uncontrollable factors in the computing environment. To use the dimmer switch analogy: what if your air conditioner switches on and briefly drops your voltage to 100 volts? Oops, your scale is now off and your data is corrupt. What happens when the dimmer wears, and doesn't put out the same voltage for a given switch position as when it was new? What happens when we have to transmit our dimmer signal halfway around the world on the internet - how good will our scale work then? Now multiply that uncertainty by hundreds, thousands, millions of switches - it's not going to work.

The powerful thing about "binary data" representation - ones and zeros, on and off - is that it greatly increases the margin of error when interpreting our data. In the case of our light switch, 0 volts or 1 volt or 2 volts are all trivially interpreted as "off", and 80 volts or 140 volts or whatever is still trivially interpreted as "on". Binary data representation, in a favorite word of computer scientists, is highly "robust" - highly immune to the kinds of variations present in all real world systems.

OK, so now we've established why a Bit is a piece of data with exactly two possible values. Still, what the heck are we going to do with that? We're going to make numbers out of it, that's what. Observe this number: 111. How many objects does that number represent? One hundred and eleven, of course. Of course? Let's break it down a bit, going back to that grade school math. Remembering our addition and subtraction rules, we know that the number 111 has a 1 in the "ones" column, 1 in the "tens" column, and 1 in the "hundreds" column. 1 times 1 plus 1 times 10 plus 1 times 100 is one hundred eleven. We use the same rule for 999, or other combinations of three digits. By using those ten possible digit values in each column, combined with those simple rules, we can represent any number.

The computer doesn't play by those rules. It mocks our choice of ten possible values for each digit as an arbitrary rule originating in the unimaginative observation that the median human has ten fingers. So easy to lose track of how many fingers you've used, and how many times. Here's how the computer interprets 111: 1 times 1 plus 1 times 2 plus 1 times 4. In english, seven. Each "column" in the number is a multiple of 2 over the previous column, rather than a multiple of ten. By simply using a different rule, called a "base-2" or "binary" number system, rather than our "base-10" system, the computer can use a sequence of bits to represent any number. Using 8 bits, the computer can represent numbers from 0-255, using 16 bits, 0-65535, and on. The bigger the number you want to represent, the more bits you need, but there is no theoretical limit on how many bits you can use.

It may not be so obvious, but once you've got numbers, you've got pretty much everything you need to represent any kind of data you might want. Text, for instance. Computers have to do lots and lots of processing with text. Fortunately, text in any language can be described with a finite number of symbols; all you need to do is assign each character or punctuation mark or other symbol a number. IBM computer scientists observed that the range of numbers 0-255 - eight bits - was enough to assign a number to each symbol used in common English text, and defined a table of number-to-character correspondence called EBCDIC. In EBCDIC, for instance, "a" is assigned the number 129. Today, in a global internet-connected world, language symbols are usually represented by 16 bit numbers, due to the greatly larger number of symbols needed for languages like Chinese and Japanese. But for a long time, given the Western origins of modern computers, and the limited memory available in early computers, eight bits for a text character was standard. So standard, in fact, that eight bits got its own shorthand name: "Byte".

So a Byte is just eight bits, and represents the smallest unit of information that is actually useful to a computer user, rather than the computer itself. As such, the Byte became the unit of measure for memory and storage in a computer. A kilobyte is 1000 bytes, megabyte is a million bytes, gigabyte is a billion bytes. To give you a feeling for how far we've come, a typical Mac or IBM PC in the early 80s would have 32 or 64 kilobytes of memory. A "starter" laptop today usually has 2 gigabytes of memory - more than 30,000 times as much!

There are many other creative ways that numbers can be used to represent data - audio, video, pictures, and other physical and virtual realities. We don't have space in this already long post to go into all of them - we'll get to some later. But I want to end by addressing a philosophical objection which often arises in discussions of using numbers or digits or bits to represent aspects of the "real world". This objection is, of course, well known to audiophiles. Roughly stated, the objection is that "chopping up" reality into bits is inherently a flawed, imprecise process and therefore inferior to older, analog-style representations of reality. If we hear a digital recording and hear things we don't like compared to analog recordings, or see a digital photograph and see things we don't like compared to film, we might naively attribute those things to the "discontinuous" nature of digital representation compared to the "continuous" representation of the older analog medium. The truth is, reality itself is discontinuous, and so are any representations of it. Analog audio recordings are captured as bits of magnetic media, and vinyl records are composed of very large (by atomic standards) chains of molecules. Pictures and film are composed of small particles of pigmented chemicals. And so on. There is nothing "unnatural" about breaking down reality into bits; in fact, it is the foundation of that magnificent computer we call the Universe.

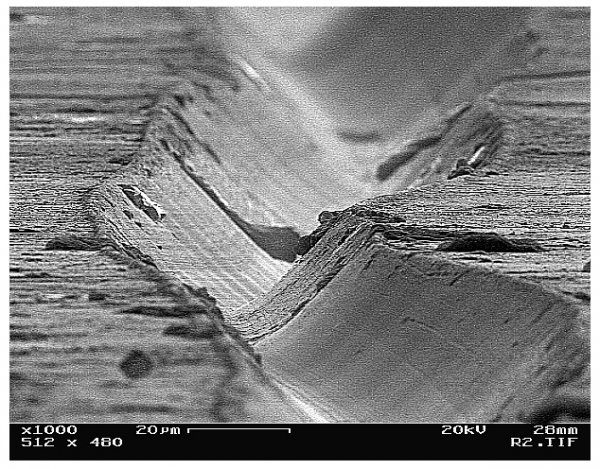

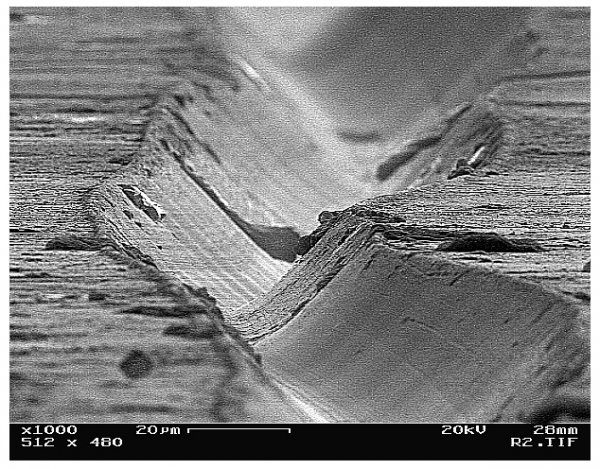

To put the final meta stamp on this post, I leave you with a digital visual representation of an analog vinyl record.

It is pretty much impossible to navigate the modern world without, at some point, being exposed to the fundamental computing concepts of Bits and Bytes. Sometimes these words are used metaphorically, as a way of saying "down in the details" - for instance, "I'm not interested in the Bits and Bytes". In other cases, they are used in a general technical sense, for example: digital audio bitstream, 10 Bit video, 24 Bit audio recording, 64 Bit processor, 100 GigaByte disk. And there are other words directly related to the concept of Bits: "Ones and Zeros", "Binary", "Digital". We use those concepts and words all the time, but what do they really mean?

A "Bit", in computing, represents the smallest unit of information that can be represented - the smallest bit of information, get it? Bits are to computing what protons, neutrons, and electrons are to physical matter, the basic building blocks from which all, more complex structures are created. Just like the atomic particles can be used to "build" everything from Hydrogen to Mount Everest, Bits have an essentially infinite capacity to represent the different kinds of information, aka "data", in the computing universe.

And what is a Bit, exactly? Well, it is a piece of data with exactly two possible values (aka "states"). These values are often referred to as "zero" and "one", or "off" and "on". The "off" and "on" wording gives you a major clue as to why the Bit was chosen as the fundamental unit of data for computing. An electrical switch has two possible values: off and on. And computers are mostly, in the most reductionist sense, collections of electrical switches. Millions of them. That's what a "chip" is - a collection of interconnected electrical switches.

If you walk over to the nearest light switch right now and flip it on or off, you are setting the value of one "Bit" of information. How do you know what the value is? Well, either zero volts comes out of the switch, or about 120 volts comes out (assuming you're in North America). So 0 volts = Off, 120 volts = On. The light bulb is the "detector" that tells us which is which. In a modern computer, the switches are implemented as microscopic transistors, and they mostly transmit voltages to other switches rather than light bulbs, but the fundamental concept is largely the same.

You may wonder about the above analogy. What if we have a dimmer switch, instead? Then the voltage can vary anywhere between 0 and 120 volts. And we could, say, arbitrarily scale that range into steps of 10 volts, and our switch could then represent 12 different values instead of two. Or we could divide it up even more finely. Wouldn't that be a more efficient use of a single switch? Yes, indeed, it would, and there is a quite different concept of computing called "analog computing" which essentially does exactly that. The problem with analog computing is that it is too dependent on uncontrollable factors in the computing environment. To use the dimmer switch analogy: what if your air conditioner switches on and briefly drops your voltage to 100 volts? Oops, your scale is now off and your data is corrupt. What happens when the dimmer wears, and doesn't put out the same voltage for a given switch position as when it was new? What happens when we have to transmit our dimmer signal halfway around the world on the internet - how good will our scale work then? Now multiply that uncertainty by hundreds, thousands, millions of switches - it's not going to work.

The powerful thing about "binary data" representation - ones and zeros, on and off - is that it greatly increases the margin of error when interpreting our data. In the case of our light switch, 0 volts or 1 volt or 2 volts are all trivially interpreted as "off", and 80 volts or 140 volts or whatever is still trivially interpreted as "on". Binary data representation, in a favorite word of computer scientists, is highly "robust" - highly immune to the kinds of variations present in all real world systems.

OK, so now we've established why a Bit is a piece of data with exactly two possible values. Still, what the heck are we going to do with that? We're going to make numbers out of it, that's what. Observe this number: 111. How many objects does that number represent? One hundred and eleven, of course. Of course? Let's break it down a bit, going back to that grade school math. Remembering our addition and subtraction rules, we know that the number 111 has a 1 in the "ones" column, 1 in the "tens" column, and 1 in the "hundreds" column. 1 times 1 plus 1 times 10 plus 1 times 100 is one hundred eleven. We use the same rule for 999, or other combinations of three digits. By using those ten possible digit values in each column, combined with those simple rules, we can represent any number.

The computer doesn't play by those rules. It mocks our choice of ten possible values for each digit as an arbitrary rule originating in the unimaginative observation that the median human has ten fingers. So easy to lose track of how many fingers you've used, and how many times. Here's how the computer interprets 111: 1 times 1 plus 1 times 2 plus 1 times 4. In english, seven. Each "column" in the number is a multiple of 2 over the previous column, rather than a multiple of ten. By simply using a different rule, called a "base-2" or "binary" number system, rather than our "base-10" system, the computer can use a sequence of bits to represent any number. Using 8 bits, the computer can represent numbers from 0-255, using 16 bits, 0-65535, and on. The bigger the number you want to represent, the more bits you need, but there is no theoretical limit on how many bits you can use.

It may not be so obvious, but once you've got numbers, you've got pretty much everything you need to represent any kind of data you might want. Text, for instance. Computers have to do lots and lots of processing with text. Fortunately, text in any language can be described with a finite number of symbols; all you need to do is assign each character or punctuation mark or other symbol a number. IBM computer scientists observed that the range of numbers 0-255 - eight bits - was enough to assign a number to each symbol used in common English text, and defined a table of number-to-character correspondence called EBCDIC. In EBCDIC, for instance, "a" is assigned the number 129. Today, in a global internet-connected world, language symbols are usually represented by 16 bit numbers, due to the greatly larger number of symbols needed for languages like Chinese and Japanese. But for a long time, given the Western origins of modern computers, and the limited memory available in early computers, eight bits for a text character was standard. So standard, in fact, that eight bits got its own shorthand name: "Byte".

So a Byte is just eight bits, and represents the smallest unit of information that is actually useful to a computer user, rather than the computer itself. As such, the Byte became the unit of measure for memory and storage in a computer. A kilobyte is 1000 bytes, megabyte is a million bytes, gigabyte is a billion bytes. To give you a feeling for how far we've come, a typical Mac or IBM PC in the early 80s would have 32 or 64 kilobytes of memory. A "starter" laptop today usually has 2 gigabytes of memory - more than 30,000 times as much!

There are many other creative ways that numbers can be used to represent data - audio, video, pictures, and other physical and virtual realities. We don't have space in this already long post to go into all of them - we'll get to some later. But I want to end by addressing a philosophical objection which often arises in discussions of using numbers or digits or bits to represent aspects of the "real world". This objection is, of course, well known to audiophiles. Roughly stated, the objection is that "chopping up" reality into bits is inherently a flawed, imprecise process and therefore inferior to older, analog-style representations of reality. If we hear a digital recording and hear things we don't like compared to analog recordings, or see a digital photograph and see things we don't like compared to film, we might naively attribute those things to the "discontinuous" nature of digital representation compared to the "continuous" representation of the older analog medium. The truth is, reality itself is discontinuous, and so are any representations of it. Analog audio recordings are captured as bits of magnetic media, and vinyl records are composed of very large (by atomic standards) chains of molecules. Pictures and film are composed of small particles of pigmented chemicals. And so on. There is nothing "unnatural" about breaking down reality into bits; in fact, it is the foundation of that magnificent computer we call the Universe.

To put the final meta stamp on this post, I leave you with a digital visual representation of an analog vinyl record.

Last edited: