Hello everyone. This is an in-depth article I wrote for the latest issue of Widescreen Review magazine, taking a deep dive into myriads of technologies and features facing us in the next generation video delivery formats in general, and Ultra-HD variation of Blu-ray discs slated to be released by this holiday season. You are going to run into one or more of these technologies when you shop for your next Television. And read about them frequently in the news. Yet there is next to no real explanation of what they are. And hence this article. The write-up is getting very positive reviews in the industry so I thought I share it here. As always, appreciate all feedback or questions.

-----

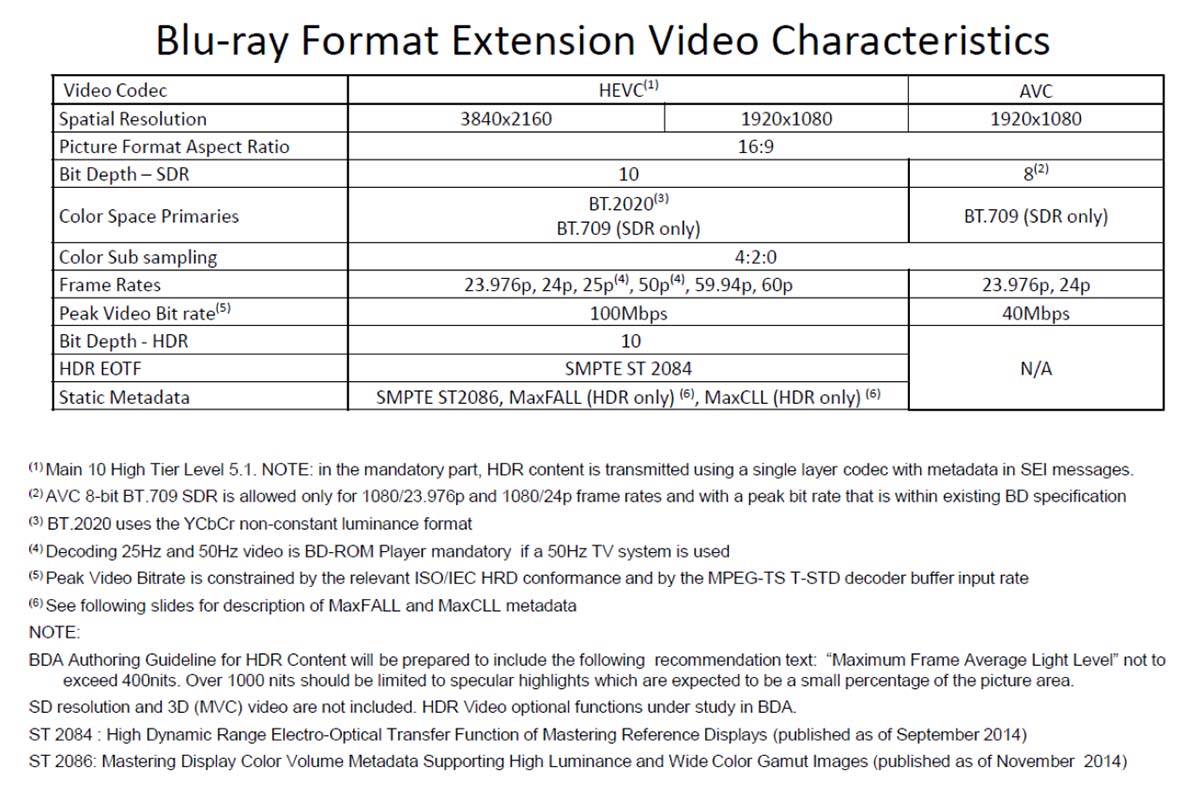

Support of “4K” resolution in Blu-ray disc (BD) has been of special interest to home theater enthusiasts since the introduction of 4K projectors and displays with the hopes that Blu-ray association (BDA) would update its specifications to not only increase the resolution to match but other enhancements for picture quality. Those wishes were answered in September of 2014 with BDA announcing that it is working on crafting such a standard, and providing a list of features anticipated to be there. Ratification of the specification is due to occur by mid-year and products in time for Christmas 2015 selling season.

This article is based on the latest version of what we know, some of which may be subject to last minute change by BDA before licensing commences.

Instead of just listing the features, I am providing fairly deep dive of what the current state of affairs are, and how the change will impact the perceived quality. A list of references at the end of the article will provide you with the next level of information. Most if not all of them should be available online.

As you will see all the changes are in video domain. Audio is left as is. And there is one deletion: there is no mention of 3-D support in UHD Blu-ray feature list! If you want 3-D, you need to stick with 1080p, using the current specification.

Backward compatibility is assured by requiring UHD Blu-ray players to play existing Blu-ray discs. And no, there is no firmware upgrade path to update an existing Blu-ray player to play UHD Blu-ray discs. Almost every aspect of the new spec requires new hardware which obviously cannot be inserted into your current machine with new programming.

Resolution and Frame Rate

At the risk of stating the obvious, resolution is increased from maximum of 1920x1080 (Horizontal x vertical) in the current Blu-ray specification to twice as many pixels in each dimension or 3840x2160. This is the official consumer “4” resolution better known as UHD.

There is much debate as to the value of higher resolution than 1080p. That viewers are probably sitting too far relative to their current sets to even fully appreciate 1080p let alone UHD. That may be so. Still, you can opt to get a larger set as I did when I bought my UHD TV, jumping to 65 inches despite our rather short viewing distance. But even if you did not, I think it still makes sense to get all the fidelity that may exist in the production of content. I don’t want to re-buy my library of content just because one day I decide to get a larger display or sit closer than I did before.

Typical rule of thumb is that you need to sit at 1.4 to 1.5 times the height of display to see the resolution of UHD. You are better off in my opinion figuring this out for yourself. Get a resolution chart that goes up to UHD resolution, put it on a thumb drive and take it to your favorite UHD TV retailer. Display the image there and start by standing in front of the TV at short distance and focus on the image, paying attention to the separation of the pixels. Now keep stepping back until the pixels merge together and you can no longer resolve them. If you sit at that distance or farther, the UHD is of no value to you on that size set.

Frame rate story is pretty straightforward as the new spec allows it to go all the way up to 60 frames per second.

Physical Layer

Currently Blu-ray discs can have one or two layers, each having 25 Gigabytes for a maximum of 50 Gigabytes. Data is stored using “land and pit” (binary value 1 and 0 respectively) which represent the “optical bits.” The lands are reflective of the laser light and pits are not.

Physical characteristics of current generation (and for posterity sake, that of HD DVD) are shown in Figure 1.

In Blu-ray, binary digital data to be recorded on disc (stamped in the case of pre-recorded media) is first converted to a new series of bits using a process called “17pp” modulation. Why don’t we store the bits as is? There are a number of reasons. One for example is to disallow long chain of zeros or ones. Think of the dashed lines on the freeway separating the lines. Should the distance between segments become very large, you may no longer know where one lane ends and the next one starts. Same here. By forcing transitions from one to zero or vice versa, we get know our position on the optical disc.

Why did I just explain the physical structure and modulation? Because those are the likely techniques behind increased per layer size in UHD Blu-ray to 33 Gigabytes. BDA has not disclosed how they have managed that but common techniques involve improving the efficiency of modulation as to require fewer optical bits to store the same set of digital data. And to pack the land and pits closer as to increase the per-track density. Combine the two and it is not hard to achieve 33 Gigabytes from a starting position of 25.

UHD Blu-ray discs have minimum of two layers giving us 66 Gigabytes. A third layer is allowed, bringing the total to 100 Gigabytes. No, I don’t know where they got the extra Gigabyte to go from 99 to 100 . That is how the industry has announced the two capacities so we just have go along with it. Either the 33 is really 33.33 or the 100 is really 99. Either way the difference is not worth losing sleep over.

. That is how the industry has announced the two capacities so we just have go along with it. Either the 33 is really 33.33 or the 100 is really 99. Either way the difference is not worth losing sleep over.

Figure 2 shows the specific steps for creating up to a three-layer disc using the German replicator’s new BluLine III system. The capability was announced in the fall of 2013. The slide I am showing in Figure 2 is from company’s 2014 presentation (one of the few bits of information available on the new three-layer UHD Disc process). To make it their information easier to understand, I have categorized the process steps for each major component in color boxes and English translation on the right.

Could you have added more layers and say, create a 200 Gigabyte disc? In theory sure. You can have a hundred layers if all you have to do is draw them up on paper. The reality though is the yield will be negatively impacted as you add more layers due to contamination, lack of evenness of the incremental layers, etc. Remember that the base/lower layers must be read through the fog of the upper ones.

Even if there is no incremental degradation from addition of more layers, your yields keep going down as you add layers. Take the simple case of each layer having a yield of 90% with no interference from each other. To compute the overall yield, we multiply the yield for each layer by each other. 0.9*0.9*09 = .73 or just 73%. Imagine if you kept going. You would quickly find yourself in a situation where the yields become too low for the process to be economical. BDA companies likely performed such an analysis and decided that three layers was as far as they could dare to go.

So 100 Gigabytes is it but is that enough? You will have to wait for that answer until I cover some other aspects of the specification later in the article.

Besides yield, the other consideration is the so called “cycle time” or how long it takes to produce a single disc. Singulus’ current BD replicator machine (BluLine II) produces single layer discs in 4 seconds and two layer ones in 4.5 seconds. Just extrapolating, the triple layer discs should take in the order of 5 seconds to produce. As a way of reference, Singulus rates their DVD replication machine at 2.3 seconds. To get the same production rate (forgetting about yield differences for now) on triple layer UHD Blu-ray discs you would need two machines. Blu-ray discs have always cost more than DVD and three-layer UHD Blu-ray discs will cost even more. How much that translates into the retail cost is hard to compute at this time without yield data.

Video Sampling

Current Blu-ray format like DVD before it and every form of consumer video delivery uses “4:2:0” color sampling (see http://www.madronadigital.com/Library/Video %20Basics.html). Briefly here, the three numbers separated by colons is the relative sampling rate of black and white aspect of each pixel, called the Luminance/Luma, and two color different subpixels called Chrominance/Chroma. Maximum value is 4. As such, 4:4:4 would mean that all components are treated the same. Such is the case with RGB computer video where you have equal number of each Red, Green and Blue subpixels.

Next step down would be 4:2:2 which means that the color resolution is half as much as black and white. 4:2:0 is cutting the color resolution down yet again. This has been considered a proper compromise in the way the eye has lower resolution in color than it does in black and white.

Note that your display has full color resolution in that it has equal pixels in red, green and blue. As such, when we play for example 4:2:0 video, we have to “interpolate” (generate) the missing color data. Interpolation however does not get us back what we discarded at encoding. It simply enlarges the number of color samples but not their fidelity.

4:2:0 is never used in production of video. 4:2:2 is the minimum and most common format (4:4:4 is used when we need to separate colors such as green screening/weatherman effect). This should tell you that there is a fidelity loss when we go below 4:2:0 and indeed there is. Display SMPTE color bars (Figure 3) from a calibration disc such as Joe Kane’s Video Essentials on your TV and pay attention to the line separating the color bars. You likely see them softer than the separation of black and white patches.

SMPTE color bars are computer generated so their transition from one color to another is instantaneous and by definition as sharp as it can possibly be. The softness is therefore due to 4:2:0 encoding. Black and white content is passes through as is and hence it maintains its single pixel transition from one shade of brightness to another.

Up until now we had no choice to live with 4:2:0 video since as I mentioned, it encompasses the entire world of consumer video delivery. Whether it is US digital TV broadcast, cable, satellite, Internet delivery, Blu-ray or DVD, this was the sampling ratio of Luma to Chroma. But now we have more choices in the form of UHD Blu-ray. It supports for 4:2:2 sampling and if the preliminary information is correct, even 4:4:4 is supported.

Note that depending on type of camera used to capture the video, the full resolution of color may not be there. An example would be the common Bayer pattern sensors where similar technique to video encoding is used to reduce the required number of subpixels. While such a camera may produce 4:4:4 rate of Luma and Chroma samples, the actual resolution of color is lower than black and white. So encoding it at 4:4:4 may be wasteful.

Furthermore let’s remember that higher color sampling sharply increases the total amount of data we need to encode on top of increased UHD resolution. If sufficient bandwidth is not given to the video codec to create a visually transparent image, we will most definitely be creating artifacts in the Luma channel which will take us backward, not forward in the overall fidelity of the image.

So higher video encoding rate should be used judiciously. A 3-D computer animation such as Despicable Me will benefit from it due to very sharp edges created by the software (more so than any camera could capture) while the picture is noise-free and hence easier to encode. A grainy dark movie on the other hand will not as it likely could benefit from the noise reduction that color filtering at lower sampling rates would provide. Enough salt makes a meal taste good but too much, unpalatable.

Pixel Depth

With audio each sample takes 16 bits. With video, we have to allocate bits to each subpixel. Luminance gets its bits and chroma pair gets its own. Once again the world of consumer delivery has standardized on one value: 8 bits per subpixel. So if our encoding were 4:4:4, we would have thee 8-bit components for a total of 24 bits per video pixel. For 4:2:0, the number of bits for each component is still 8 bits but we have far fewer of them for color. If we averaged them across every pixel, we would still have 8 bits for Luma but the color would average out to 4 for the pair of Chroma samples. This means our total number of bits per pixel is just 12 bits. So 4:2:0 sampling has cut the raw/uncompressed data rate in half relative to 4:4:4 encoding.

8 bits is a computer “byte” which has a range of values from 0 to 255. But the full range is not used in video. Instead, the only valid range of values is for video pixels 16 to 235. 16 is black and 235 is white for example. There are historical and technical justifications for this with the most well-known being able to see “blacker than black” and “whiter than white” in diagnostic patterns (so called Pluge pattern for setting your display black level is one example).

As an aside, this is why it is important to tell your TV if the feed is from a computer or video source. Computers operate in 0 to 255 range so setting the your video source to computer mode, will cause the display to think level “16” is a shade of gray, not black (that is 16 points above zero). So you get washed out images. So be sure to set your TV mode to the right input encoding. Sadly the terminology here is not standardized but you should be able to figure it out in the setup menu and looking at the image.

Back to our bit depth, while 8 bits is also very common in production and capture stage, highest fidelity calls for 10 bits or even 12, 14 or 16 bits. Again, remember these are the bit depths for each subpixel in video. A 4:4:4 encoding at 16 bits means you actually have 3x16 or 48 bits/pixel. With our current distribution formats the bit depth must be reduced to 8 bits per subpixel.

You might think that going from high resolution to low simply requires discarding the extra resolution in the low order bits of each subsample. But that would be unwise. Doing so will likely cause banding/contouring in video as we jump from one 8 bit sample to another rather than the smoother graduations that existed in higher bit depth numbers.

The right way to perform the conversion is judicious addition of noise prior to truncation of the extra low order bits. In doing so we replace banding/contouring with random noise which is much less noticeable to the eye. This is called dithering.

There is no free lunch though when it comes to dither for video because of the mandatory use of video compression (unlike audio). Video compression gains its stunning data reduction ratios by taking advantage of redundancies within an image and between video frames. Random noise interferes with this process by creating constantly varying pixel values. The upshot is that the dither will cause compression efficiency to go down, resulting in perhaps more compression artifacts. In some sense then starting with higher bit depth than 8 may be a bad thing!

A better solution and one that is adopted in UHD Blu-ray is to support 10 bits per sample. That means we can pass through the most common high fidelity bit depth in video as is without conversion and hence dither to 8 bits. We would still need to add dither if the bit depth is higher than 10 but the level of noise added is lower and compression efficiency less compromised.

Comparing 10 bit encoding to 10 bit dithered to 8 bits, the overall bit rate may work out to be the same. The former has more data but the latter has more noise. What this means is that we get 10 bits “almost for free!” This has been one of my pet complaints about Blu-ray since start. 10-bit support should have been there from day one.

Video Compression

Now that we know the bits and bytes, let’s perform a fun exercise and figure out how much space our uncompressed movie requires prior to video compression. Let’s assume a 90 minute movie encoded in the current Blu-ray 1080p format of 8-bit/4:2:0 and two versions of UHD using 10-bit/4:2:2 and 10-bit/4:4:4. The results are in Table 1. As expected there is a huge difference here between current Blu-ray spec and that of UHD Blu-ray. The former needs “only” 0.4 Terabytes of storage, whereas the two versions of UHD need 2.7 and 4.0 Terabytes.

The story becomes more interesting when we compare the above numbers to the amount of storage we have available in each specification. Let’s use the maximum of 50 Gigabytes for the current Blu-ray and 100 Gigabytes for UHD. For Blu-ray we are at 12% of the data being kept and 88% thrown away. For the two versions of UHD, we are only allowed to keep 2 to 4% of total and must throw away whopping 96% to 98% of the original source bits! And this is assuming there is no audio. Huston, we (may) have a problem!

OK, you can sit back on your chair again. The facts of life as they say, are the facts of life. We have quadrupled the number of pixels alone. And then topped it with higher bit depth and more color samples. The simple math is brutal here in growth of pixel data relative to modest increase in the physical capacity of the disc (2X over Blu-ray).

Now that I have made you scared, let’s talk about a new ally we have on our side namely, the next generation video compression standard called H.265 or HEVC (High-Efficiency Video Codec). As the name implies, this is the follow up to the highly successful H.264 video codec used in the current Blu-ray Disc which also had a second name of MPEG-4 AVC. As with MPEG-4 AVC, HEVC is a joint standardization effort between to major organizations: ITU-T and MPEG.

The project goal for HEVC was to double the efficiency of MPEG-4 AVC – a tall order given how good MPEG-4 AVC already is. A call for proposal was made and large number of entries received. Getting your patents in such a critical component of a video distribution chain is the modern-day gold rush so every company in the world tries to get their bits in there. We have a huge clash of technologies and company/organization politics to deal with. Fortunately we could not have better generals to manage than Jens-Rainer Ohm (chair of Communications Engineering at RWTH Aachen University) and Gary Sullivan whom I had the privilege of having on my team while I was at Microsoft. If anyone could it, these two would given the same accomplishment they had under their belt by co-chairing the development of MPEG-4 AVC.

So did they get there? And how do we measure if they have or have not? Evaluating performance of video codecs can either be done objectively in the form of computing a metric called PSNR (Peak Signal-to-Noise-Ratio) or subjective using human blind visual tests. A computer algorithm determines PSNR so the process is fast and repeatable. The drawback is that PSNR does not always correlate well with human perception of video fidelity or else that is all we would ever do since it is a lot cheaper than human trials.

In the case of the case of HEVC, PSNR measurements seem to represent a more pessimistic of its fidelity than subjective evaluations. This is evident in one of the first papers comparing the fidelity of HEVC against other codecs such as MPEG-4 AVC.[2] Across a range of clips, HEVC demonstrated 35% bit rate reduction for the same PSNR quality relative to MPEG-4 AVC. Subjective tests however demonstrated 49% reduction or essentially the target rate of 50%.

Figure 4 shows an example from that paper using the PSNR metric. Bit rate savings are around 55% at lower encoding rates, dropping to about 40% for higher rates/better perceptual quality. The asymptotic performance of the codecs on the left is typical in both audio and video in that once you approach the fidelity of the source through higher bit budgets, increasing the rate further shows smaller and smaller rates of improvement.

A corollary of this is that the performance difference between codecs starts to narrow as none of them are working hard anymore. If I give you an hour to walk one mile, you could do it as well as an Olympic marathon runner. If I gave you just 5 minutes, then the men would be separated from the boys. Same is true for video codecs. The best only shine when the bit budget allocated becomes too small. Low efficiency codecs will then demonstrate a lot more compression artifacts such as blocking (“pixilation”) and ringing (halos around sharp edges).

Back to our benchmark, subjective (human) performance evaluation for the same clip is shown in Figure 5 using MOS or Mean Opinion Score. This time the graph represents the differential score between HEVC and MPEG-4 AVC rather than absolute values. We see bit deductions ranging from nearly 60% at lower bit rates to about 50%. This would be representative of what would see in streaming 1080p content online. It doesn’t give us direct data for UHD encoding as we are talking about bit rates higher by a factor or 10 to 80 and of course far more pixels.

For UHD performance we can look at tests by Percheron et al in Figure 6, right. [3] The testing went up to nearly 30 megabits/sec with HEVC roughly maintaining 2:1 efficiency while producing similar PSNR as compared to MPEG-4 AVC. Or looked at differently, 2 dB of picture quality improvement using the same bit rate (significant difference considering the maximum score of 42 dB).

Yet another set of tests were performed by Bordes and Sunna [4] this time using content shot with high fidelity Sony F65 camera (with true 4:4:4 UHD capture). Test clips were generated using live recordings at, 3840x2160@50 fps, using 8 and 10-bit (4:2:0 encoding). See Figure 7 for characteristics of each.

Here is their conclusion:

“This contribution reportedly shows that considering 3840x2160 (QFHD) material, HEVC (HM10.0) Main and Main 10 profiles coding outperforms H.264/AVC (JM18.4) equivalent profiles coding in objective measure (BD-rate) of around 25 % for All Intra, 45 % for Random Access, 45% for Low Delay B on average.”

Our main interest is in “Random Access” mode where 45% efficiency gain was achieved. So very close to our 50% target improvement over HEVC.

Digging further into the results, 10 bit content encoding shows efficiency gain of 47% for luminance versus 23% and 31% for color difference components. The comparatively reduced improvement for color is to be expected due to use of 4:2:0 color encoding. We have much less color resolution and hence, lower amount to gain relative to MEPG-4 AVC. Had the test been at 4:2:2 the efficiency improvements would have likely risen for color also to (hopefully?) match that of luminance.

We could go on but suffice it to say, directionally we can assume 2:1 efficiency of HEVC video codec over MPEG-4 AVC. But we are not done yet. There is more help here in the form of peak data rate as explained in the next section.

Peak Data Rate

All the benchmark data so far has used what is called Constant Bit Rate (CBR) encoding. This is common use profile for online streaming and broadcast digital video. Video encoded in Blu-ray (and DVD before it) is done using Variable Bit Rate (VBR) encoding. Instead of keeping the bit rate constant and letting the quality fluctuate as is the case with CBR, the quality is kept (more or less) constant and bit rate is allowed to change to track the difficulty of the scene. As such, while a Blu-ray clip may have an average of say, 22 megabits/sec, its peaks can hit 40 megabits/sec allowed in non-3-D version of the spec.

UHD Blu-ray ups this to 100 megabits/sec or double the peak rate of Blu-ray. This is a great addition and a much needed one. Combined with the effectiveness of HEVC video codec and using hand waving technique, we now have a 4X headroom over Blu-ray and MPEG-4 AVC (2X for peak data rate and 2X for codec efficiency).

Is this enough if you turn on all the lights with full 3840x2160 at 60 fps, 4:4:4 and 10-bit encoding? Simple answer is that transparency may not be achieved for all content. The ratio of source pixel count and allowed storage is still too lopsided.

Let me cheer you up by mentioning that online streaming of UHD content is running around 15 megabits/sec by likes of Netflix. Yes, it is nearly one third the data rate of Blu-ray at 1080p! And they are trying to push four times the pixels? Right… Every demo of 4K streaming I have seen has been underwhelming. UHD Blu-ray should easily outperform such streaming content and by a good mile.

Color Gamut and Fidelity

Before I get into the specifics of this topic, let me explain what a “gamut” is. In the simplest form, the gamut is a range of colors that the digital video numbers are allowed to represent. This is an artificial limit put on the range of colors that the digital video samples could represent.

The mapping of colors to and from digital data happens at the extremes of the chain: video production and display. The colorist/talent adjust the tone of the image until they like what they see on their production display. The output of that process is just a set of numbers stored in a digital file. By themselves they have no “color.” Like computer data they are just bits and bytes. The way they get the meaning and snap into real images is by using the same representation of colors that existed in the production monitor. If the two displays/chains match then we would see the same colors that were seen in production. Reality is not so simple of course but the theory is.

It might come as a shock but the gamut of today’s HDTV/Blu-ray harkens back to the introduction of analog color TV some 60 years ago! Due to constant desire to be backward compatible with the installed base of displays at the introduction of new formats, we have never dared making the color gamut much different than that ancient standard.

In specific terms, Blu-ray conforms to the ITU-R Recommendation BT.709, usually abbreviated to “Rec 709” (pronounced “wreck 709”). When compared to CIE color chart in Figure 9 which represents the full range of colors that we can see, it is clear that a lot has been left behind. The reduced gamut takes away the realism of the reproduced image, taking us away from the goal of “being there.”

In an ideal world we would define our gamut to be the same as the entire CIE graph and add a bit more for good measure. That way, we would be assured that we can transmit all that we can see if we were standing the same place the camera was. We have such a thing in audio today with our ability to fully capture the audio spectrum we can hear. Not so with video. Why? Business and to some extent technology reasons. The display hardware industry likes standards it can create in volume and at economical prices given how price sensitive consumers are when it comes to televisions. So big corners were cut in the gamut and that has been the norm for literally decades.

So if we can’t or won’t be allowed to have the full gamut of what we can see, how about what we want to capture in nature? What would that gamut look like? Dr. Pointer working for Kodak in 1980 performed exactly this exercise by measuring the color spectrum of 4089 samples in nature. That data is summarized and overlaid on top of the CIE chart in Figure 8. [6][7][8] Even though the surveyed data is considerably smaller than the CIE chart, our current Rec 709 gamut used in HDTV and Blu-ray is woefully inadequate to cover it.

I am happy to report that we are going to make significant progress in this area. BDA has selected ITU Rec 2020 color gamut for UHD Blu-ray. Figure 9 compares this new gamut with that of 709. Biggest expansion comes in the direction of greens where we were severely deficient. If you are a photographer, you likely know about Adobe RGB gamut which also expands the gamut in this direction. Rec 2020 though even past Adobe RGB in greens and also expands in the direction of reds and a bit more of blues. It even exceeds the gamut of P3 used for digital cinema! Happiness all around.

Going back to Pointer’s research Figure 10 shows that Rec 2020 almost completely covers his surveyed data just the same.

It is worth mentioning that prior to adopting of Rec 2020 by BDA, that gamut was thought to be way too large to be adoptable in real products anytime soon. Difficult or not, that is now in our future. With UHD Blu-ray launch literally around the corner, I suspect the path of display development has already changed to build displays that can comply with Rec 2020. Such a compliance will be a major marketing talking point due its association with UHD Blu-ray. So sit back and enjoy the advancements to come.

Why was this considered such a challenge up to now? Let’s review the most dominant display technology today, namely the LCD. In its simplest form, the LCD is comprised of a backlight which provides the source of light, three transistors that each act as a valve to control how much light is transmitted, and a red, green and blue filter in front of each transistor. On paper, this setup should produce any color we like given the RGB triplet.

In reality we face two problems here in achieving a wide and accurate gamut. The first is the quality of the light produced by the backlight which is mostly LED based these days. Instead of three equal peaks of color in its spectrum which is all that we want for our RGB filters, the LED backlight produces a continuous spectrum and one that is deficient in red and green spectrums (see Figure 12 top graph). Without any compensation, what comes out of the RGP triplets is uneven and not capable of reproducing the extremes of our gamut.

The obvious solution of getting better backlighting or turning up the lumens until we have enough greens and reds runs counter to economics of display manufacturing as explained. Furthermore, brighter backlights use more power causing US Energy Star compliance (and stricter standards in California) to potentially be lost which would be a major setback for manufacturers. The sets would also run hotter which again is not a good thing from marketability of TVs.

The second problem is the non-ideal response of the RGB filters on the output of the LCD panel. Instead of just letting through the red, green and blue, they also leak out some amount of other colors produced by the backlight. If your backlight has these other spectrums of light, which current LCDs, we will be producing inaccurate colors and limited in how far we can reach the extremes of the CIE chart which are made up of nearly pure primary colors. If you don’t have perfect green for example, how are you going to extend the gamut toward pure hues of green? Better color filters exist and are used in manufacturing of professional displays but as with better backlight, they increase the display cost in addition to reducing display efficiency (more you filter, the less light that comes out).

Fortunately there are two solutions already available, albeit not yet dominant in the market. The first one is the emissive OLED display technology. The individual subpixels in OLED create light themselves so there is no issue of backlight spectrum, filter accuracy, etc. OLEDs also have exceptional contrast as like the CRTs they can turn a pixel completely off whereas with the LCD, even in off position some amount of light leaks through.

The issue with OLEDs is cost and display life, both of which have been dealt with to some extent but more work is necessary to make OLEDs commonplace in TV displays. Good news is that in the smaller form factors of tablets and phones, these are not show stoppers and one can hope to see incredible implementation of Rec 2020 in them.

Another important development is the “Quantum Dot” technology. This is a discovery out of Bell Labs in 1980s where they found that certain nanometer-scale crystals can accept blue light and then shift its spectrum to another wavelength and hence color. Not only that, the shift can be controlled very precisely by simply changing the size of the crystal.

As shown in Figure 11, when blue photons strike a ~3 nanometer quantum dot, it emits a saturated green light. When blue photons strike a ~6 nanometer dot, it will produce a saturated red light.[9][10]

Using a trio of such crystal sizes we can create highly pure and tuned red, green and blue primaries. In so doing, the LCD subpixels each get exactly the amount and spectrum of light they desire (see Figure 12). Since the light source comprises of the three primaries and not a mixture of them, the non-ideal aspect of the LCD color filter is no longer material. There are no other spectrums to leak through (ideally speaking). The result is much expanded gamut, color accuracy and efficiency. All at much lower cost than OLED.

To make it easier to apply to current LCD manufacturing process, companies like 3m and Nanosys have developed a film that takes the place of the diffuser that exists between the current LCD backlight and the panel itself. Quantum Dot crystals are suspended in that film using a special process to keep them separate from each other (otherwise you get a mixture of colors emitted getting us back to square one). The film is sandwiched between two other protective layers that keep oxygen and moisture out which is the enemy of the crystals. Claimed reliability is 30,000 hours which matches the typical life of a TV (10 years at 8 hours/day).

Referring back to Figure 10, we see that Quantum Dot technology gets us pretty close to Rec 2020. Nanosys states that they are able to achieve 97% of the gamut of Rec 2020 as shown in that CIE graph. Pretty darn good for a specification that people thought was way too hard to achieve!

Of course there is no free lunch as Quantum Dot film increases the cost of the backlight and hence the LCD panel. Manufacturers are currently using Quantum Dot in their premium TVs which means they retail for more money as of this wring. But hey, who said quality comes for free? Get a good display and it should last you years so in my book, that is fine.

High Dynamic Range

Dynamic range is the ratio of brightest to the darkest part of the image. Current TV standards assume a range of 0.1 to 100 nits (cd/m^2) that once again dates back to capability of CRT displays. Today’s displays can be way, way brighter but just like the color gamut, current video standards have stayed with this limited dynamic range. Just put the display in its “Vivid” or “Dynamic” mode and you see a picture with far more “pop” but obviously incorrect colors (and sharpness).

The limited dynamic range means simultaneous bright objects like reflections, the sun, blue daylight sky, etc. are all “blown out” and lacking in detail. Or the exposure is optimized for them but the darkerparts sink into black with no detail.

High Dynamic Range or HDR aims to solve this problem. Still-image photographers are no doubt familiar with this technology. Multiple images are used to create a single composite that has far more contrast range than a single image can capture. In that sense, they are even pushing beyond what the capture equipment can create. In TV technology, the capture gear even without HDR is way ahead of our display and transmission standards. The aim is to finally take advantage of the much higher light output in our displays to produce more (simultaneous) contrast.

While there are proprietary HDR solutions such as Dolby Vision, BDA decided to support the SMPTE ST 2084 standard as mandatory in UHD Blu-ray specifications. Commercial solutions such as Dolby’s can be supported optionally in the standard as long as they are layered in backward compatible way.

The approach taken in ST 2084 (and Dolby Vision) is to encode the video in such a manner as to match the sensitivity of the human vision when it comes to perception of contrast. In order to do this, we need a model of human vision as it does not work linearly. That is, if you increase the light level by X, you don’t perceive proportional increase in brightness to that increase of electrical activity. Perception and level of stimulus follow their own, “non-linear” function.

Research in contrast/brightness detection dates back to one of the pioneers in the area, Ernst Weber who in late 1800s tried to quantify our sense of perception. This is now known as the Weber-Fechner Law (Fechner was his student who worked to generalize his work).

The idea behind this law is surprising simple but rather profound. To understand it, let’s go through a simple thought exercise. Let’s say I give you two stones, one that weighs 0.1 pound and another that weighs 0.2 pounds and I ask you if you can tell the difference in how much they weigh. You most likely will be able tell one weighs twice as much. Now I give you two different stones, one that weighs 10 pounds and another that is 10.1 pounds – same incremental difference as the first two sets. I bet now you will have a hard time telling if they weigh different by just feeling them.

For a third scenario, I give you a stone that weighs 10 pounds that and another that weighs 20. Now the difference becomes obvious again, just like the 0.1 and 0.2. What is the difference? Before I kept the incremental amount the same. Here, I am keeping the incremental ratio the same. The latter matched our human perception far, far more than the former.

Turns out this is how most of our senses work. They go by the ratio of the difference as the base magnitude changes. Putting this in mathematic terms for our light intensity activity, we get: dp = ?I/I.

“dp” is the change in perceived intensity, ?I is the amount of light intensity changed and “I” is the Intensity prior to change.

In our first examples we have dp = (0.2-0.1)/0.1 = 1 and in the third, dp = (10-20)/10=1. In other words the perceived difference is the same for the 0.1 and 0.2 stones and 10 and 20 pound stones. If we compute the value for the second example we get dp = (10.1 – 10)/10 = 0.01. In other words, the perceived differential weight between two stones that weigh 10 and 10.1 is almost non-existant. Which makes intuitive sense.

The above ratio is called the Weber fraction. Experiments show the Weber Fraction to be 0.02 or 2% for perception of light intensity. In other words, it takes a 2% change in brightness for the eye to detect a change.

Applying a bit of college math to our weber fraction, we get another form of it: p = k * ln(I/I0). “p” is the perceived contrast. “k” is a constant determined experimentally. “ln” is the natural log. “I” is the Intensity of the image once again. And I0 is the lowest Intensity that can be perceived.

The new formula gives us the “transfer function” which is a technical phrase to describe the relationship between input and output signals of a system. In this case the transfer function describes the relationship between an electrical change, i.e. brightness value, and human change, perceived difference in brightness to a human.

Figure 13 shows this non-linear response using a linear X axis.[14] Using a log axis would have rendered a straight line.

As in my stone example, we see that when the Intensity is low, much smaller changes are perceivable than when intensity is higher. The difference between a 100 watt and 101 watt light bulbs will not be perceptible whereas going from 1 to 2 watts, will certainly be.

It would be great if life were so simple but it is not. The rod and cone optical detectors together with the neurons and cognitive part of the brain create a much more complex situation than represented in Weber fraction. Figure 14 demonstrates what happens if for example you just change background intensity (please excuse the fuzziness of the image) on the transfer function. We see a series of curves, all different from each other now. Even when the background light level is kept constant, the weber fraction only holds for middle ranges of light intensity and not the two extremes.[14]

Jumping way ahead, the most recognized work in modeling human Contrast Sensitivity Function (CSF) comes courtesy of Barten [12]. The components of what goes in that model is shown in Figure 15

SMPTE ST 2084 standard uses this model to encode brightness levels per Slide 1 of Dolby SMPTE proposal and presentation. [11]

No, you don’t need to memorize that formula. Or even the much simplified approximation of it in Slide 2. The key to pay attention to is the heading of that slide: “Perceptual Quantizer.”

Quantization if you recall from my audio articles, is the process of taking an analog value, like changing intensity of an image, and converting it to distinct digital steps (quantization). Perceptual Quantization (PQ) therefore means we use a model of human perception, i.e. Barten’s, to decide which digital value corresponds to which level of brightness. Instead of using this descriptive and accurate phrase, SMPTE and industry have sadly opted to use the obscure abbreviation OETF (Opto-Electrical Transfer Function).

The Dolby presentation goes on to compute the Just Noticeable Difference (JND) or the smallest step that would represent a perceptible contrast difference. They then map that to 12 bit video samples, showing that even a huge range of brightness from 0 to 10,000 nits can be covered with each step being 0.9 JND or just below threshold of detection. What this translates into is that the individual steps in that 12 bit value cannot create banding or contouring since by definition they are less than level of perception. As long as we are below Barten curve, we are good to go in principal.

Yes, we have a problem with that slide and computation as we don’t have 12 bits for each subpixel. Instead, we are limited to 10 bits in UHD Blu-ray. Using that depth as shown in Slide 3, we fail the mission with our steps exceeding the threshold of our visual system (our curve is now above Barten minimum threshold levels at those brightness values).

The glass half full version is that we are still way better off than staying with the current gamma system as represented by Rec 1886 seeing how it is way, way above the Barten threshold for lower intensities of light. So PQ has done us some good but has not done away with the problem completely.

So far our analysis has been theoretical and mathematical. We need to verify our assumptions using subjective viewing tests. Fortunately Dolby has done that work for us as presented in Slide 4. Using a synthetic contrast threshold detection still image, viewers voted on the least amount of change in brightness that was visible. As the table shows, we need 11 bits for the darker parts of the image so once again exceeding our 10 bit allowance. Once more the good news is that gamma based system as in Rec 1886 would have required more than 12 bits and hence even higher potentials for banding or with utilization of dither, more noise we have to compress with our video codec. So we are still ahead of the game.

As if to have felt our pain, Dolby proceeded with testing of natural images instead of synthetic threshold test pattern. Results are in Slide 5. Yes, we can go home and celebrate now as even a huge contrast range of 0 to 10,000 doesn’t need more than 10 bits per video subpixel for smooth, banding-free representation.

How representative Dolby test content is, is something we need to evaluate when we get a lot more experience/content encodings under our belt. For now we can say that while 12 bit would have been ideal, PQ encoded 10 bit is darn good and major step forward from 8-bit gamma system of today.

Final Thoughts

UHD Blu-ray spec takes major strides forward in how it has extended video performance specifications of current Blu-ray format. They could have just increased the resolution to UHD and stop there but they did not. They deserve a ton of credit in this regard. Yes, we did not get the absolute best possible specs. We would have needed even more capacity per disc, 12 bit video samples, full CIE gamut, etc. But what we got has surprised just about everyone in the industry including myself. Life is good if you are a home theater enthusiast.

Of note, features like HDR, 10-bit encoding, Rec 2020 gamut and to some extent, 4:2:2 encoding, will all be readily noticed improvements even if UHD resolution is not. And the differences would be readily apparent which should make UHD Blu-ray appealing in store demos and such.

The bad news is that the world is a very different place than the introduction of Blu-ray from business atmosphere point of view. Very different. General consumer is fast moving away from physical media to online and on-demand consumption of video. Convenience in the form of immediate access has always trumped fidelity with general public and this occasion is no different. So the whole notion of a disc format is a backward and obsolete idea as far as bill-paying mass consumer is concerned.

Adding to this challenge is availability of UHD masters. When Blu-ray came out, studios had been smart enough to have archived their movie transfers in 1080p resolution even though DVD only needed 1/6 as many pixels. Not so now. Yes, there is fair bit of content shot in “4K.” But to save money, editing and creation of the final product is occurring in 2K. Creating a 4K master requires all the editing to be done over and talent approvals sought. If this were a hot new market, this would be in the cards but per above, it is not. If they wanted to save the money even in the production of what was to be used in the theater, what hope is there for UHD Blu-ray?

Then there is the issue of funding and promotion. During the launch of Blu-ray, a ton of money was thrown at different parts of the ecosystem from disc production to movie encoding in order to win the race against HD DVD. The companies in Blu-ray camp were very committed to winning this race and the potential of the market for Blu-ray. Recall the inclusion of expensive Blu-ray drive in PS3 game station and the massive investment of BD replication capacity by Sony.

The world is very different now. Core Blu-ray founders are all struggling and any hopes they had of Blu-ray being their savior is long gone dream. Panasonic for example is all but out of consumer electronics business. Philips, the other core member existed much earlier before Blu-ray even launched. And the third core member, Sony, is destined to follow the same path given their huge losses in this product category.

Put all of this together I would be shocked if 5% of the resources are there to advance this incompatible version of Blu-ray forward if that. In the process of writing this article, I could not even find an official announcement or any mention whatsoever in BDA’s own website. It takes incredible amount of resources to launch an incompatible format like UHD Blu-ray and there is not anything near enough for a large scale market from the BDA.

Given these factors, the adoption and success of the format will fall squarely on our shoulders – the home theater enthusiasts. I don’t expect the cost of the hardware to be a barrier but content is another matter. Are we ready as a group to pay even more per movie for UHD Blu-ray content? How much will we buy? If it is not enough, this format may die like Laserdisc did. Buying million dollar replicators is not going to happen with just a few thousand titles sold.

All in all, it is hard to get excited about the prospects of UHD Blu-ray from business point of view. At least that is the case with me. Where the excitement does lie is in the way UHD Blu-ray may drive advancements in display technology and adoption of much higher fidelity video specifications. The halo effect should benefit us across the board whether it benefits UHD Blu-ray itself or not. Ultimate form delivery here may be UHD Blu-ray spec encodings, not discs, we download (ala what Kaleidescape is doing today with Blu-ray digital distribution).

For now, let’s end this on a positive note that we are witnessing one of the most significant steps forward in delivery of high fidelity video to enthusiasts. Job well done BDA. And SMPTE. And ITU. And MPEG. And all the individuals who pushed for better than “good enough.”

References

[1] Comparison CD DVD HDDVD BD" by Cmglee - Own work. Licensed under CC BY-SA 3.0 via Wikimedia Commons - http://commons.wikimedia.org/wiki/F...diaviewer/File:Comparison_CD_DVD_HDDVD_BD.svg

[2] Comparison of the Coding Efficiency of Video Coding Standards—Including High Efficiency Video Coding (HEVC), Jens-Rainer Ohm (IEEE Member), Gary J. Sullivan (IEEE Fellow), Heiko Schwarz, Thiow Keng Tan (IEEE Senior Member), and Thomas Wiegand (IEEE Fellow)

[3] HEVC, the key to delivering an enhanced television viewing experience “Beyond HD,” Sophie Percheron, Jérôme Vieron (PhD), SMPTE 2013 Annual Technical Conference & Exhibition

[4] Comparison of Compression Performance of HEVC Draft 10 with AVC for UHD-1 material, Bordes (Technicolor), Sunna (RAI)

[5] Recommendation ITU-R BT.2020-1 (06/2014) Parameter values for ultra-high definition television systems for production and international programme exchange: http://www.itu.int/dms_pubrec/itu-r/rec/bt/R-REC-BT.2020-1-201406-I!!PDF-E.pdf

[6] CIE Color chart from Jeff Yurek’s blog: http://dot-color.com/category/color-science/

[7] The Pointer's Gamut: the coverage of real surface colors by RGB color spaces and wide gamut displays, TFT Central: http://www.tftcentral.co.uk/articles/pointers_gamut.htm

[8] The gamut of real surface colours, Color Research and Application, M. R. Pointer (1980), Research Division of Kodak Limited

[9] Quantum-Dot Displays: Giving LCDs a Competitive Edge Through Color, Information Display 2013, Jian Chen, Veeral Hardev, and Jeff Yurek, Nanosys corporation

[10] Quantum Dot Enhancement of Color for LCD Systems, Derlofske, Benoit, Art Lathrop, Dave Lamb, Optical Systems Division 3M Company, USA

[11] Perceptual Signal Coding for More Efficient Usage of Bit Codes, Scott Miller, Mahdi Nezamabadi, Scott Daly, Dolby Laboratories, Inc.

[12] Contrast Sensitivity of the HUMAN EYE and Its Effects on Image Quality, Peter G. ]. Barten, Chapter 3

[13] Anatomy And Physiology Of The Eye, Contrast, Contrast Sensitivity, Luminance Perception And Psychophysics, Steve Drew

[14] Weber and Fechner’s Law, http://www.cns.nyu.edu/~msl/courses/0044/handouts/Weber.pdf

Amir Majidimehr is the founder of Madrona Digital (www.madronadigital.com) which specializes in custom home electronics. He started Madrona after he left Microsoft where he was the Vice President in charge of the division developing audio/video technologies. With more than 30 years in the technology industry, he brings a fresh perspective to the world of home electronics.

-----

Support of “4K” resolution in Blu-ray disc (BD) has been of special interest to home theater enthusiasts since the introduction of 4K projectors and displays with the hopes that Blu-ray association (BDA) would update its specifications to not only increase the resolution to match but other enhancements for picture quality. Those wishes were answered in September of 2014 with BDA announcing that it is working on crafting such a standard, and providing a list of features anticipated to be there. Ratification of the specification is due to occur by mid-year and products in time for Christmas 2015 selling season.

This article is based on the latest version of what we know, some of which may be subject to last minute change by BDA before licensing commences.

Instead of just listing the features, I am providing fairly deep dive of what the current state of affairs are, and how the change will impact the perceived quality. A list of references at the end of the article will provide you with the next level of information. Most if not all of them should be available online.

As you will see all the changes are in video domain. Audio is left as is. And there is one deletion: there is no mention of 3-D support in UHD Blu-ray feature list! If you want 3-D, you need to stick with 1080p, using the current specification.

Backward compatibility is assured by requiring UHD Blu-ray players to play existing Blu-ray discs. And no, there is no firmware upgrade path to update an existing Blu-ray player to play UHD Blu-ray discs. Almost every aspect of the new spec requires new hardware which obviously cannot be inserted into your current machine with new programming.

Resolution and Frame Rate

At the risk of stating the obvious, resolution is increased from maximum of 1920x1080 (Horizontal x vertical) in the current Blu-ray specification to twice as many pixels in each dimension or 3840x2160. This is the official consumer “4” resolution better known as UHD.

There is much debate as to the value of higher resolution than 1080p. That viewers are probably sitting too far relative to their current sets to even fully appreciate 1080p let alone UHD. That may be so. Still, you can opt to get a larger set as I did when I bought my UHD TV, jumping to 65 inches despite our rather short viewing distance. But even if you did not, I think it still makes sense to get all the fidelity that may exist in the production of content. I don’t want to re-buy my library of content just because one day I decide to get a larger display or sit closer than I did before.

Typical rule of thumb is that you need to sit at 1.4 to 1.5 times the height of display to see the resolution of UHD. You are better off in my opinion figuring this out for yourself. Get a resolution chart that goes up to UHD resolution, put it on a thumb drive and take it to your favorite UHD TV retailer. Display the image there and start by standing in front of the TV at short distance and focus on the image, paying attention to the separation of the pixels. Now keep stepping back until the pixels merge together and you can no longer resolve them. If you sit at that distance or farther, the UHD is of no value to you on that size set.

Frame rate story is pretty straightforward as the new spec allows it to go all the way up to 60 frames per second.

Physical Layer

Currently Blu-ray discs can have one or two layers, each having 25 Gigabytes for a maximum of 50 Gigabytes. Data is stored using “land and pit” (binary value 1 and 0 respectively) which represent the “optical bits.” The lands are reflective of the laser light and pits are not.

Physical characteristics of current generation (and for posterity sake, that of HD DVD) are shown in Figure 1.

In Blu-ray, binary digital data to be recorded on disc (stamped in the case of pre-recorded media) is first converted to a new series of bits using a process called “17pp” modulation. Why don’t we store the bits as is? There are a number of reasons. One for example is to disallow long chain of zeros or ones. Think of the dashed lines on the freeway separating the lines. Should the distance between segments become very large, you may no longer know where one lane ends and the next one starts. Same here. By forcing transitions from one to zero or vice versa, we get know our position on the optical disc.

Why did I just explain the physical structure and modulation? Because those are the likely techniques behind increased per layer size in UHD Blu-ray to 33 Gigabytes. BDA has not disclosed how they have managed that but common techniques involve improving the efficiency of modulation as to require fewer optical bits to store the same set of digital data. And to pack the land and pits closer as to increase the per-track density. Combine the two and it is not hard to achieve 33 Gigabytes from a starting position of 25.

UHD Blu-ray discs have minimum of two layers giving us 66 Gigabytes. A third layer is allowed, bringing the total to 100 Gigabytes. No, I don’t know where they got the extra Gigabyte to go from 99 to 100

Figure 2 shows the specific steps for creating up to a three-layer disc using the German replicator’s new BluLine III system. The capability was announced in the fall of 2013. The slide I am showing in Figure 2 is from company’s 2014 presentation (one of the few bits of information available on the new three-layer UHD Disc process). To make it their information easier to understand, I have categorized the process steps for each major component in color boxes and English translation on the right.

Could you have added more layers and say, create a 200 Gigabyte disc? In theory sure. You can have a hundred layers if all you have to do is draw them up on paper. The reality though is the yield will be negatively impacted as you add more layers due to contamination, lack of evenness of the incremental layers, etc. Remember that the base/lower layers must be read through the fog of the upper ones.

Even if there is no incremental degradation from addition of more layers, your yields keep going down as you add layers. Take the simple case of each layer having a yield of 90% with no interference from each other. To compute the overall yield, we multiply the yield for each layer by each other. 0.9*0.9*09 = .73 or just 73%. Imagine if you kept going. You would quickly find yourself in a situation where the yields become too low for the process to be economical. BDA companies likely performed such an analysis and decided that three layers was as far as they could dare to go.

So 100 Gigabytes is it but is that enough? You will have to wait for that answer until I cover some other aspects of the specification later in the article.

Besides yield, the other consideration is the so called “cycle time” or how long it takes to produce a single disc. Singulus’ current BD replicator machine (BluLine II) produces single layer discs in 4 seconds and two layer ones in 4.5 seconds. Just extrapolating, the triple layer discs should take in the order of 5 seconds to produce. As a way of reference, Singulus rates their DVD replication machine at 2.3 seconds. To get the same production rate (forgetting about yield differences for now) on triple layer UHD Blu-ray discs you would need two machines. Blu-ray discs have always cost more than DVD and three-layer UHD Blu-ray discs will cost even more. How much that translates into the retail cost is hard to compute at this time without yield data.

Video Sampling

Current Blu-ray format like DVD before it and every form of consumer video delivery uses “4:2:0” color sampling (see http://www.madronadigital.com/Library/Video %20Basics.html). Briefly here, the three numbers separated by colons is the relative sampling rate of black and white aspect of each pixel, called the Luminance/Luma, and two color different subpixels called Chrominance/Chroma. Maximum value is 4. As such, 4:4:4 would mean that all components are treated the same. Such is the case with RGB computer video where you have equal number of each Red, Green and Blue subpixels.

Next step down would be 4:2:2 which means that the color resolution is half as much as black and white. 4:2:0 is cutting the color resolution down yet again. This has been considered a proper compromise in the way the eye has lower resolution in color than it does in black and white.

Note that your display has full color resolution in that it has equal pixels in red, green and blue. As such, when we play for example 4:2:0 video, we have to “interpolate” (generate) the missing color data. Interpolation however does not get us back what we discarded at encoding. It simply enlarges the number of color samples but not their fidelity.

4:2:0 is never used in production of video. 4:2:2 is the minimum and most common format (4:4:4 is used when we need to separate colors such as green screening/weatherman effect). This should tell you that there is a fidelity loss when we go below 4:2:0 and indeed there is. Display SMPTE color bars (Figure 3) from a calibration disc such as Joe Kane’s Video Essentials on your TV and pay attention to the line separating the color bars. You likely see them softer than the separation of black and white patches.

SMPTE color bars are computer generated so their transition from one color to another is instantaneous and by definition as sharp as it can possibly be. The softness is therefore due to 4:2:0 encoding. Black and white content is passes through as is and hence it maintains its single pixel transition from one shade of brightness to another.

Up until now we had no choice to live with 4:2:0 video since as I mentioned, it encompasses the entire world of consumer video delivery. Whether it is US digital TV broadcast, cable, satellite, Internet delivery, Blu-ray or DVD, this was the sampling ratio of Luma to Chroma. But now we have more choices in the form of UHD Blu-ray. It supports for 4:2:2 sampling and if the preliminary information is correct, even 4:4:4 is supported.

Note that depending on type of camera used to capture the video, the full resolution of color may not be there. An example would be the common Bayer pattern sensors where similar technique to video encoding is used to reduce the required number of subpixels. While such a camera may produce 4:4:4 rate of Luma and Chroma samples, the actual resolution of color is lower than black and white. So encoding it at 4:4:4 may be wasteful.

Furthermore let’s remember that higher color sampling sharply increases the total amount of data we need to encode on top of increased UHD resolution. If sufficient bandwidth is not given to the video codec to create a visually transparent image, we will most definitely be creating artifacts in the Luma channel which will take us backward, not forward in the overall fidelity of the image.

So higher video encoding rate should be used judiciously. A 3-D computer animation such as Despicable Me will benefit from it due to very sharp edges created by the software (more so than any camera could capture) while the picture is noise-free and hence easier to encode. A grainy dark movie on the other hand will not as it likely could benefit from the noise reduction that color filtering at lower sampling rates would provide. Enough salt makes a meal taste good but too much, unpalatable.

Pixel Depth

With audio each sample takes 16 bits. With video, we have to allocate bits to each subpixel. Luminance gets its bits and chroma pair gets its own. Once again the world of consumer delivery has standardized on one value: 8 bits per subpixel. So if our encoding were 4:4:4, we would have thee 8-bit components for a total of 24 bits per video pixel. For 4:2:0, the number of bits for each component is still 8 bits but we have far fewer of them for color. If we averaged them across every pixel, we would still have 8 bits for Luma but the color would average out to 4 for the pair of Chroma samples. This means our total number of bits per pixel is just 12 bits. So 4:2:0 sampling has cut the raw/uncompressed data rate in half relative to 4:4:4 encoding.

8 bits is a computer “byte” which has a range of values from 0 to 255. But the full range is not used in video. Instead, the only valid range of values is for video pixels 16 to 235. 16 is black and 235 is white for example. There are historical and technical justifications for this with the most well-known being able to see “blacker than black” and “whiter than white” in diagnostic patterns (so called Pluge pattern for setting your display black level is one example).

As an aside, this is why it is important to tell your TV if the feed is from a computer or video source. Computers operate in 0 to 255 range so setting the your video source to computer mode, will cause the display to think level “16” is a shade of gray, not black (that is 16 points above zero). So you get washed out images. So be sure to set your TV mode to the right input encoding. Sadly the terminology here is not standardized but you should be able to figure it out in the setup menu and looking at the image.

Back to our bit depth, while 8 bits is also very common in production and capture stage, highest fidelity calls for 10 bits or even 12, 14 or 16 bits. Again, remember these are the bit depths for each subpixel in video. A 4:4:4 encoding at 16 bits means you actually have 3x16 or 48 bits/pixel. With our current distribution formats the bit depth must be reduced to 8 bits per subpixel.

You might think that going from high resolution to low simply requires discarding the extra resolution in the low order bits of each subsample. But that would be unwise. Doing so will likely cause banding/contouring in video as we jump from one 8 bit sample to another rather than the smoother graduations that existed in higher bit depth numbers.

The right way to perform the conversion is judicious addition of noise prior to truncation of the extra low order bits. In doing so we replace banding/contouring with random noise which is much less noticeable to the eye. This is called dithering.

There is no free lunch though when it comes to dither for video because of the mandatory use of video compression (unlike audio). Video compression gains its stunning data reduction ratios by taking advantage of redundancies within an image and between video frames. Random noise interferes with this process by creating constantly varying pixel values. The upshot is that the dither will cause compression efficiency to go down, resulting in perhaps more compression artifacts. In some sense then starting with higher bit depth than 8 may be a bad thing!

A better solution and one that is adopted in UHD Blu-ray is to support 10 bits per sample. That means we can pass through the most common high fidelity bit depth in video as is without conversion and hence dither to 8 bits. We would still need to add dither if the bit depth is higher than 10 but the level of noise added is lower and compression efficiency less compromised.

Comparing 10 bit encoding to 10 bit dithered to 8 bits, the overall bit rate may work out to be the same. The former has more data but the latter has more noise. What this means is that we get 10 bits “almost for free!” This has been one of my pet complaints about Blu-ray since start. 10-bit support should have been there from day one.

Video Compression

Now that we know the bits and bytes, let’s perform a fun exercise and figure out how much space our uncompressed movie requires prior to video compression. Let’s assume a 90 minute movie encoded in the current Blu-ray 1080p format of 8-bit/4:2:0 and two versions of UHD using 10-bit/4:2:2 and 10-bit/4:4:4. The results are in Table 1. As expected there is a huge difference here between current Blu-ray spec and that of UHD Blu-ray. The former needs “only” 0.4 Terabytes of storage, whereas the two versions of UHD need 2.7 and 4.0 Terabytes.

The story becomes more interesting when we compare the above numbers to the amount of storage we have available in each specification. Let’s use the maximum of 50 Gigabytes for the current Blu-ray and 100 Gigabytes for UHD. For Blu-ray we are at 12% of the data being kept and 88% thrown away. For the two versions of UHD, we are only allowed to keep 2 to 4% of total and must throw away whopping 96% to 98% of the original source bits! And this is assuming there is no audio. Huston, we (may) have a problem!

OK, you can sit back on your chair again. The facts of life as they say, are the facts of life. We have quadrupled the number of pixels alone. And then topped it with higher bit depth and more color samples. The simple math is brutal here in growth of pixel data relative to modest increase in the physical capacity of the disc (2X over Blu-ray).

Now that I have made you scared, let’s talk about a new ally we have on our side namely, the next generation video compression standard called H.265 or HEVC (High-Efficiency Video Codec). As the name implies, this is the follow up to the highly successful H.264 video codec used in the current Blu-ray Disc which also had a second name of MPEG-4 AVC. As with MPEG-4 AVC, HEVC is a joint standardization effort between to major organizations: ITU-T and MPEG.

The project goal for HEVC was to double the efficiency of MPEG-4 AVC – a tall order given how good MPEG-4 AVC already is. A call for proposal was made and large number of entries received. Getting your patents in such a critical component of a video distribution chain is the modern-day gold rush so every company in the world tries to get their bits in there. We have a huge clash of technologies and company/organization politics to deal with. Fortunately we could not have better generals to manage than Jens-Rainer Ohm (chair of Communications Engineering at RWTH Aachen University) and Gary Sullivan whom I had the privilege of having on my team while I was at Microsoft. If anyone could it, these two would given the same accomplishment they had under their belt by co-chairing the development of MPEG-4 AVC.

So did they get there? And how do we measure if they have or have not? Evaluating performance of video codecs can either be done objectively in the form of computing a metric called PSNR (Peak Signal-to-Noise-Ratio) or subjective using human blind visual tests. A computer algorithm determines PSNR so the process is fast and repeatable. The drawback is that PSNR does not always correlate well with human perception of video fidelity or else that is all we would ever do since it is a lot cheaper than human trials.

In the case of the case of HEVC, PSNR measurements seem to represent a more pessimistic of its fidelity than subjective evaluations. This is evident in one of the first papers comparing the fidelity of HEVC against other codecs such as MPEG-4 AVC.[2] Across a range of clips, HEVC demonstrated 35% bit rate reduction for the same PSNR quality relative to MPEG-4 AVC. Subjective tests however demonstrated 49% reduction or essentially the target rate of 50%.

Figure 4 shows an example from that paper using the PSNR metric. Bit rate savings are around 55% at lower encoding rates, dropping to about 40% for higher rates/better perceptual quality. The asymptotic performance of the codecs on the left is typical in both audio and video in that once you approach the fidelity of the source through higher bit budgets, increasing the rate further shows smaller and smaller rates of improvement.

A corollary of this is that the performance difference between codecs starts to narrow as none of them are working hard anymore. If I give you an hour to walk one mile, you could do it as well as an Olympic marathon runner. If I gave you just 5 minutes, then the men would be separated from the boys. Same is true for video codecs. The best only shine when the bit budget allocated becomes too small. Low efficiency codecs will then demonstrate a lot more compression artifacts such as blocking (“pixilation”) and ringing (halos around sharp edges).

Back to our benchmark, subjective (human) performance evaluation for the same clip is shown in Figure 5 using MOS or Mean Opinion Score. This time the graph represents the differential score between HEVC and MPEG-4 AVC rather than absolute values. We see bit deductions ranging from nearly 60% at lower bit rates to about 50%. This would be representative of what would see in streaming 1080p content online. It doesn’t give us direct data for UHD encoding as we are talking about bit rates higher by a factor or 10 to 80 and of course far more pixels.

For UHD performance we can look at tests by Percheron et al in Figure 6, right. [3] The testing went up to nearly 30 megabits/sec with HEVC roughly maintaining 2:1 efficiency while producing similar PSNR as compared to MPEG-4 AVC. Or looked at differently, 2 dB of picture quality improvement using the same bit rate (significant difference considering the maximum score of 42 dB).

Yet another set of tests were performed by Bordes and Sunna [4] this time using content shot with high fidelity Sony F65 camera (with true 4:4:4 UHD capture). Test clips were generated using live recordings at, 3840x2160@50 fps, using 8 and 10-bit (4:2:0 encoding). See Figure 7 for characteristics of each.

Here is their conclusion:

“This contribution reportedly shows that considering 3840x2160 (QFHD) material, HEVC (HM10.0) Main and Main 10 profiles coding outperforms H.264/AVC (JM18.4) equivalent profiles coding in objective measure (BD-rate) of around 25 % for All Intra, 45 % for Random Access, 45% for Low Delay B on average.”

Our main interest is in “Random Access” mode where 45% efficiency gain was achieved. So very close to our 50% target improvement over HEVC.

Digging further into the results, 10 bit content encoding shows efficiency gain of 47% for luminance versus 23% and 31% for color difference components. The comparatively reduced improvement for color is to be expected due to use of 4:2:0 color encoding. We have much less color resolution and hence, lower amount to gain relative to MEPG-4 AVC. Had the test been at 4:2:2 the efficiency improvements would have likely risen for color also to (hopefully?) match that of luminance.

We could go on but suffice it to say, directionally we can assume 2:1 efficiency of HEVC video codec over MPEG-4 AVC. But we are not done yet. There is more help here in the form of peak data rate as explained in the next section.

Peak Data Rate

All the benchmark data so far has used what is called Constant Bit Rate (CBR) encoding. This is common use profile for online streaming and broadcast digital video. Video encoded in Blu-ray (and DVD before it) is done using Variable Bit Rate (VBR) encoding. Instead of keeping the bit rate constant and letting the quality fluctuate as is the case with CBR, the quality is kept (more or less) constant and bit rate is allowed to change to track the difficulty of the scene. As such, while a Blu-ray clip may have an average of say, 22 megabits/sec, its peaks can hit 40 megabits/sec allowed in non-3-D version of the spec.

UHD Blu-ray ups this to 100 megabits/sec or double the peak rate of Blu-ray. This is a great addition and a much needed one. Combined with the effectiveness of HEVC video codec and using hand waving technique, we now have a 4X headroom over Blu-ray and MPEG-4 AVC (2X for peak data rate and 2X for codec efficiency).

Is this enough if you turn on all the lights with full 3840x2160 at 60 fps, 4:4:4 and 10-bit encoding? Simple answer is that transparency may not be achieved for all content. The ratio of source pixel count and allowed storage is still too lopsided.

Let me cheer you up by mentioning that online streaming of UHD content is running around 15 megabits/sec by likes of Netflix. Yes, it is nearly one third the data rate of Blu-ray at 1080p! And they are trying to push four times the pixels? Right… Every demo of 4K streaming I have seen has been underwhelming. UHD Blu-ray should easily outperform such streaming content and by a good mile.

Color Gamut and Fidelity

Before I get into the specifics of this topic, let me explain what a “gamut” is. In the simplest form, the gamut is a range of colors that the digital video numbers are allowed to represent. This is an artificial limit put on the range of colors that the digital video samples could represent.

The mapping of colors to and from digital data happens at the extremes of the chain: video production and display. The colorist/talent adjust the tone of the image until they like what they see on their production display. The output of that process is just a set of numbers stored in a digital file. By themselves they have no “color.” Like computer data they are just bits and bytes. The way they get the meaning and snap into real images is by using the same representation of colors that existed in the production monitor. If the two displays/chains match then we would see the same colors that were seen in production. Reality is not so simple of course but the theory is.

It might come as a shock but the gamut of today’s HDTV/Blu-ray harkens back to the introduction of analog color TV some 60 years ago! Due to constant desire to be backward compatible with the installed base of displays at the introduction of new formats, we have never dared making the color gamut much different than that ancient standard.

In specific terms, Blu-ray conforms to the ITU-R Recommendation BT.709, usually abbreviated to “Rec 709” (pronounced “wreck 709”). When compared to CIE color chart in Figure 9 which represents the full range of colors that we can see, it is clear that a lot has been left behind. The reduced gamut takes away the realism of the reproduced image, taking us away from the goal of “being there.”

In an ideal world we would define our gamut to be the same as the entire CIE graph and add a bit more for good measure. That way, we would be assured that we can transmit all that we can see if we were standing the same place the camera was. We have such a thing in audio today with our ability to fully capture the audio spectrum we can hear. Not so with video. Why? Business and to some extent technology reasons. The display hardware industry likes standards it can create in volume and at economical prices given how price sensitive consumers are when it comes to televisions. So big corners were cut in the gamut and that has been the norm for literally decades.

So if we can’t or won’t be allowed to have the full gamut of what we can see, how about what we want to capture in nature? What would that gamut look like? Dr. Pointer working for Kodak in 1980 performed exactly this exercise by measuring the color spectrum of 4089 samples in nature. That data is summarized and overlaid on top of the CIE chart in Figure 8. [6][7][8] Even though the surveyed data is considerably smaller than the CIE chart, our current Rec 709 gamut used in HDTV and Blu-ray is woefully inadequate to cover it.

I am happy to report that we are going to make significant progress in this area. BDA has selected ITU Rec 2020 color gamut for UHD Blu-ray. Figure 9 compares this new gamut with that of 709. Biggest expansion comes in the direction of greens where we were severely deficient. If you are a photographer, you likely know about Adobe RGB gamut which also expands the gamut in this direction. Rec 2020 though even past Adobe RGB in greens and also expands in the direction of reds and a bit more of blues. It even exceeds the gamut of P3 used for digital cinema! Happiness all around.