Gentlemen, with all due respect, few of us here know what the hell you are talking about. This is a very esoteric conversation. At the very least, can you please dumb it down for the rest of us?

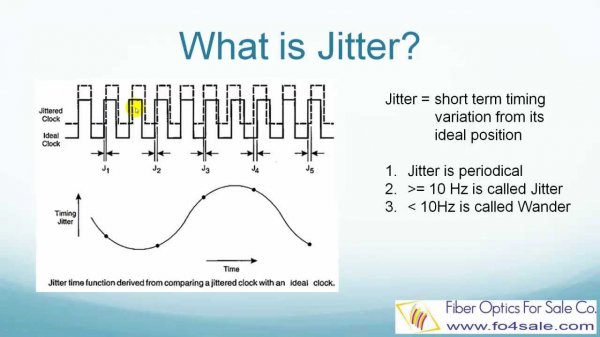

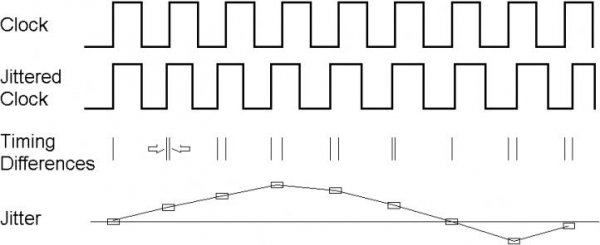

Here is an explanation. To convert digital audio to analog sound, you need three ingredients: 1) PCM audio samples, 2) Reference Voltage, 3) Clock signal. #1 comes from the recording and you have no control over it. #2 can actually create jitter like noise and distortion but is not the subject of this discussion. The topic here is a narrow aspect of #3, namely, what happens if you have random variations of the clock signal at very low frequencies. And when I say very low frequencies I mean below tens of hertz.

Mivera/Mike started this argument by saying there are high-precision clock sources designed for radar applications and such that have lower amounts of random jitter at low frequencies. As you can imagine, in a radar application where you are measuring timing, and you are operating at very high frequencies, these clock sources become important. The manufacturer is not touting the use of such clocks for audio but as is the case in DIY audio world, they chase parts that have better specs with no regard as to advantages/disadvantages for audio.

So measurements of said oscillators are put forward and you are expected to take a leap that what goes into the DAC, is what comes out of it and therefore you better one the best oscillator there is. At some level, i.e. engineering excellence, this is fine

as long as measurements are show that the output of the DAC meaningfully changes. No such measurements have been put forward. Worse yet, claim has been made that they don't show up in the best audio measurement tools we have. This is complete hogwash and no evidence has been put forward of any such inability.

Another such claim has been that such random, low frequency jitter noise is readily audible. Both John and Mike say they have heard it yet neither has put forward details of any such experiments. They then put forward word of design engineer Bruno saying such noise is easily audible (both in AES paper and elsewhere). Alas, he too fails to provide any evidence whatsoever of such audibility tests were conducted. If they are conducted at all. In his case, he says the problem manifest itself in such things as smearing of the stereo image. To a lay person that kind of makes sense: timing error must be mean errors in stereo imaging.

Unfortunately lots of crimes are committed there. Specifically they are ignoring the fact that such listening tests have been performed and threshold of hearing random jitter is way, way higher than any DAC regardless of price! More importantly, years of research into how we hear explains the listening test results both on basis of threshold of hearing and masking. Please see the second link I provided in my first post.

Now, it is easy to dismiss all of this as technical gobbledygook and continue to cling to notion of timing error causing audible problem. For that, I actually have thousands of audiophiles to testify otherwise, with vast number from this forum! Yes, if you are into analog sound, you already have ample evidence that all of this is nonsense. Analog formats have horrible timebase accuracy as compare to digital. The reason we don't consider the outcome anything remotely horrible is because of the psychoacoustics. Here is a post I just wrote on this topic:

http://www.audiosciencereview.com/forum/index.php?threads/close-in-jitter.1621/page-9#post-40863

Let me see if I can copy and paste it here:

====

One other way to intuitively evaluate the audibility of such random, low frequency jitter if you are into analog sound. Let's look at the measurement of a Linn turntable:

http://www.stereophile.com/content/...power-supply-measurements#bA0gIcPIt6uEPkxt.97

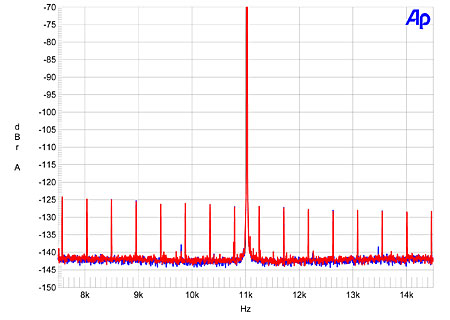

Here an LP with a 1 Khz tone was used as the source signal for the measurement. Again, in an idealized situation we would have a single, super sharp spike at 1 Khz and nothing else.

We clearly do not have that here. There are "shoulders" or skirts around our main 1 Khz tone and that indicates random speed fluctuations or in digital lingo, "jitter." We know they are random because if they were not, they would show up as spikes as I have indicated (deterministic/periodic jitter).

Focusing back on the random, close-in jitter that is the topic of this thread, we see massive amounts of it here. The main 1 Khz tone is broadened even at levels of just -10 db! We have large amounts of it by the time we get to -50 db.

The measurements of jitter we have been showing use source signal of 10,000 Hz, not 1,000 Hz like is used above. The higher the frequency, the more pronounced the level of jitter. It is a simple matter to compensate though. To have -50 db of jitter, we need to have a timing error of 2 microseconds at 1 Khz! This is 2,000,000 picoseconds!!! Far, far higher cry than 0.5 picoseconds advocated by Mike.

As we all know, there are tons of advocates of LP playback. None complain of their stereo image being smeared even though they suffer from random jitter that is two million times higher than being advocated.

Why is that case? Reasons we have mentioned before: threshold of hearing and masking. Without these two psychoacoustic effects, no one would be able to enjoy analog formats. They have horrendous timing errors yet with good content, they are delightful to listen to. And even more so for their advocates who consider it better than digital.

The levels in analog jitter can be so high that we can just analyze them in time domain where the actual level of sound can noticeably change. More on this in another post.

=====

So please don't listen to these campaigns. These characters abuse objective audio science to promote myths by creating fear and doubt. They give the whole audio science a bad name. It is classic "measurebating" in my book to show oscillator clock noise spectrum instead of showing us what comes out of our DAC.