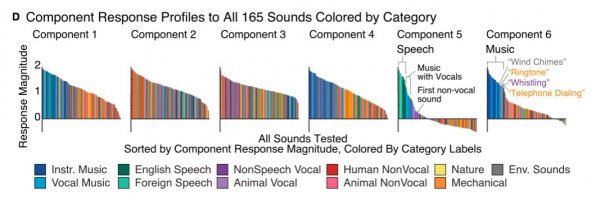

Indeed. It is dubious to rely on a traditional or 'classical' definition of music. Also, some ambient music, e.g. with slowly modulating electronic drones, has barely anything that qualifies as rhythm on a 'normal' time scale. You can also use speech from a radio and lay imitative/modulating speech over it as musical process, as happens in some avant-garde music. Rhythm in that case becomes a very broad or opaque concept.

Music in its most general idea is organization of sounds in time. Nothing more, nothing less.

i respectfully beg to differ. Music in its most general idea is an organisation of sounds within rhythm, pitch and time. Not quite the same thing.

If language and music are not shared processes, and are in fact wholly distinct and autonomous functions within the brain, is it any wonder that when we use one to describe the other we fall short?