I don't think the discussion of an architecture needs to have a proof point that way Tim. The example I used in my Widescreen Review article that just came out on Jitter gives an analogy. If you take a 1080p display and sit far enough it will look the same as a 720p display. I don't think anyone then advocates that we should ignore the difference in pixels in the two displays (2:1). Nor can we say that the difference does not matter with certainty.

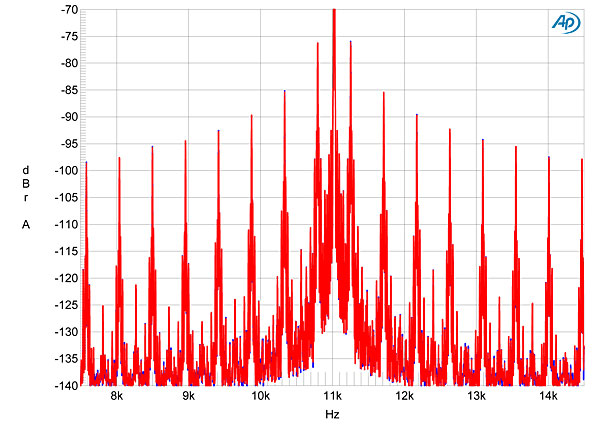

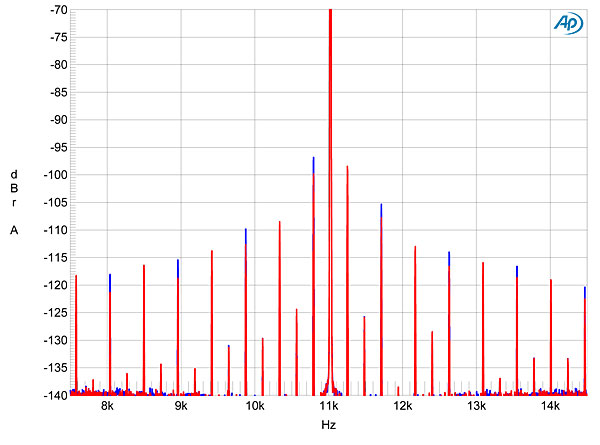

On jitter sound, it has no sound. It is a data-dependent distortion. What sound it creates is jitter+src which makes it highly non-linear and challenging to spot due to extreme variations in theme. Further, in digital systems jitter can cause aliasing due to requirement that the system maintain fixed bandwidth which can be violated by jitter distortions. For an eye opening demonstration of this, go and listen to the clips in this thread: http://www.whatsbestforum.com/showthread.php?3808-The-sound-of-Jitter. There, we have a src frequency > 20 Khz and a jitter frequency > 20 Khz. The jitter level is at the very low levels that occurs in current equipment. I am pretty sure you will hear jitter there as an audible tone, even though none of the contributing frequencies were audible!

This is why when people ask me what does jitter sound like I tell them that question has no answer. Jitter is one of the least quantifiable things as far as what it sounds like.

Going back to what started this thread, because audio is so broken with no way to measure fidelity to the music heard in production, we have no easy way of pointing out the contributions it is making. And hence your comment.

On jitter sound, it has no sound. It is a data-dependent distortion. What sound it creates is jitter+src which makes it highly non-linear and challenging to spot due to extreme variations in theme. Further, in digital systems jitter can cause aliasing due to requirement that the system maintain fixed bandwidth which can be violated by jitter distortions. For an eye opening demonstration of this, go and listen to the clips in this thread: http://www.whatsbestforum.com/showthread.php?3808-The-sound-of-Jitter. There, we have a src frequency > 20 Khz and a jitter frequency > 20 Khz. The jitter level is at the very low levels that occurs in current equipment. I am pretty sure you will hear jitter there as an audible tone, even though none of the contributing frequencies were audible!

This is why when people ask me what does jitter sound like I tell them that question has no answer. Jitter is one of the least quantifiable things as far as what it sounds like.

Going back to what started this thread, because audio is so broken with no way to measure fidelity to the music heard in production, we have no easy way of pointing out the contributions it is making. And hence your comment.