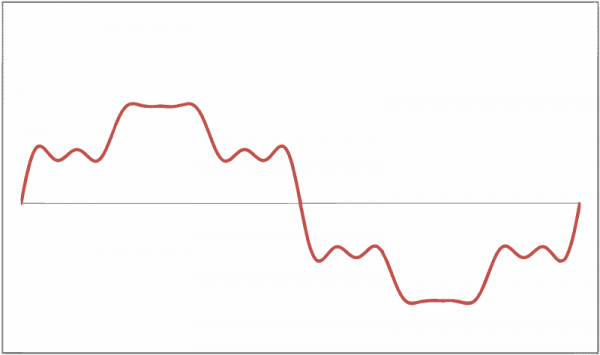

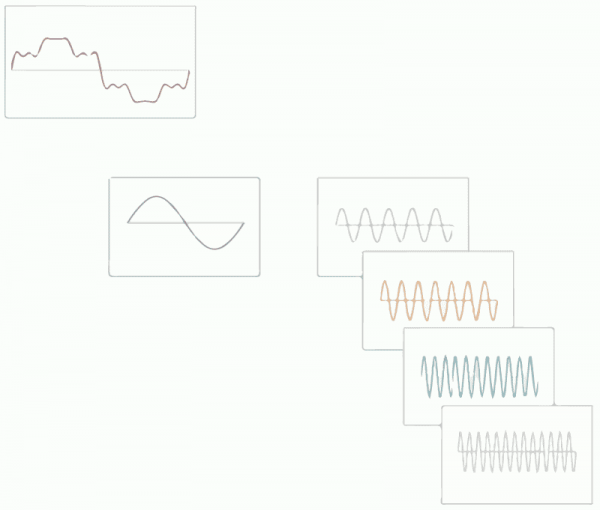

Thought experiment: a perfect, zero distortion bass transducer is fed a signal which is combination of 20Hz, and 40Hz sine waves at a level where the sensitivity of the driver means the output measures 90db, and the 40Hz element is down 20dB compared to the 20Hz. What do you think the listener can hear? And how does that differ from a real world transducer, that generates 10% 2nd harmonic distortion, 20dB down, of a pure 20Hz input?

Last edited: