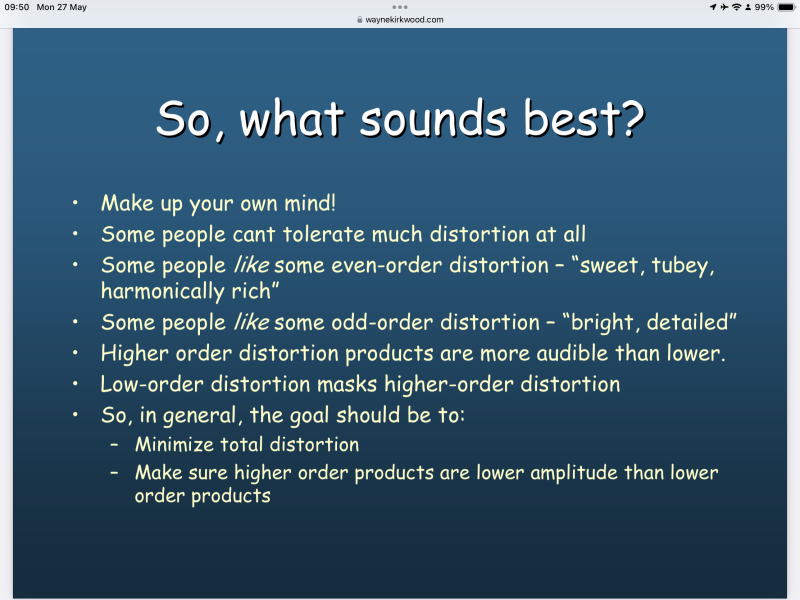

I particularly like this slide from his presentation…Even now 20 years later, Pete Millett’s presentation is an easy and an accessible way for many to learn about audio electronic distortions:

The Sound of Distortion

Pete’s presentation will help others for decades to come. I will add that everything in audio has a transfer function and just like Pete, having control over those transfer functions allows for predictable outcomes.

Why, oh why, does vinyl continue to blow away digital?

- Thread starter godofwealth

- Start date

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

What sounds best may be an individual choice, but what sound is most accurate is not, also stated in that slide.I particularly like this slide from his presentation…

Last edited:

I don’t disagree. However, this thread is asking why vinyl still sounds better to the OP, not which format is more accurate. It does feel like we are teasing out some possibilities…What sounds best may be an individual choice, but what sound is most accurate is not, also stated in that slide.

Hi Carlos,What sounds best may be an individual choice, but what sound is most accurate is not, also stated in that slide.

The OP started this thread with a disparaging comment about the sound of digital “why do I feel all digital sucks”, adding that despite the distortion, he prefers the sound of analogue.

Since that original post, the debate seems to me to be which (analogue sans digital step in processing vs that which has a digital processing step) sounds more real. In looking over several of your contributions, you seem to be arguing over which (analogue sans digital vs analogue from digital) is more “accurate”.

I am admittedly ignorant of the technology behind the very best digital, and have no idea of how bits and bytes are more “accurate” than a D2D recording of voice, let alone more “real” sounding (if you are even claiming that as I do not believe you used that term in your arguments).

Could you briefly explain to this luddite how an analogue signal that is converted into computer language then converted again back into an analogue signal will be more “ accurate “ than an analogue signal being directly cut to lacquer? How is that measured?

Also, do you feel that including a digital process in recording music makes it sound more “real” to listeners than analogue only, or have no opinion on that.

That's not quite a fair comparison...Could you briefly explain to this luddite how an analogue signal that is converted into computer language then converted again back into an analogue signal will be more “ accurate “ than an analogue signal being directly cut to lacquer? How is that measured?

A fair comparison would be cutting into lacquer versus digitizing, ie the "encoding" of the analogue waveform.

The next fair comparison would be reading from the lacquer and reconstructing the analogue from digital, ie the "decoding" of the analogue waveform.

The "how is that [comparison] measured?" question is valid for both.

I still like the distortions inherent in analog so much, that I like listening to vinyl much more than digital. Especially if the vinyl version is the ONLY analog step in the recording process.

Come at me. I ain’t afraid.

Mcsnare, Could you be a bit more specific about what you mean by “like”? You prefer listening to vinyl over digital. How do they sound different to you and does one sound more convincing or real to you? If so how and why do you think that is?

Hi Carlos,

The OP started this thread with a disparaging comment about the sound of digital “why do I feel all digital sucks”, adding that despite the distortion, he prefers the sound of analogue.

Since that original post, the debate seems to me to be which (analogue sans digital step in processing vs that which has a digital processing step) sounds more real. In looking over several of your contributions, you seem to be arguing over which (analogue sans digital vs analogue from digital) is more “accurate”.

I am admittedly ignorant of the technology behind the very best digital, and have no idea of how bits and bytes are more “accurate” than a D2D recording of voice, let alone more “real” sounding (if you are even claiming that as I do not believe you used that term in your arguments).

Could you briefly explain to this luddite how an analogue signal that is converted into computer language then converted again back into an analogue signal will be more “ accurate “ than an analogue signal being directly cut to lacquer? How is that measured?

Also, do you feel that including a digital process in recording music makes it sound more “real” to listeners than analogue only, or have no opinion on that.

That's not quite a fair comparison...

A fair comparison would be cutting into lacquer versus digitizing, ie the "encoding" of the analogue waveform.

The next fair comparison would be reading from the lacquer and reconstructing the analogue from digital, ie the "decoding" of the analogue waveform.

The "how is that [comparison] measured?" question is valid for both.

I answered this back in post #373:

Post in thread 'Why, oh why, does vinyl continue to blow away digital?'

https://www.whatsbestforum.com/thre...ntinue-to-blow-away-digital.38800/post-972141

I am now. The first 20 years of my career was as a recording engineer, mixer, and producer.Dave is a Mastering Engineer and not a Recording Engineer. A better question would be which commercial release sounds most like your mastering.

FYI - there is so much that happens between a live feed and a commercial release on the recording and production process that they are so far removed from each other, even after the recording engineers hands it off to the mixing engineer, who then hands it off to the mastering engineer.

Dave is a Mastering Engineer and not a Recording Engineer. A better question would be which commercial release sounds most like your mastering.

FYI - there is so much that happens between a live feed and a commercial release on the recording and production process that they are so far removed from each other, even after the recording engineers hands it off to the mixing engineer, who then hands it off to the mastering engineer.

Does all of the art involved to get that predictable outcome result in a listening experience that is more or less like that of the original event?

Does all of the art involved to get that predictable outcome result in a listening experience that is more or less like that of the original event?

In general, Mastering engineers are working on the behest of the producers and artist to “polish” what’s is sent to them and to get it ready for commercial release. I see that Dave is online so he can state for “himself” what his frame of mind and objectives are when he is approaching a project.

Typically the mastering engineers are not present during the recordings.

Could you briefly explain to this luddite how an analogue signal that is converted into computer language then converted again back into an analogue signal will be more “ accurate “ than an analogue signal being directly cut to lacquer? How is that measured?

The absolute accuracy is not really open for discussion. A signal is preserved orders or magnitude better going through a digital process than an analogue one. Consider the following:

Digital:

sound waves -> voltage at mic membrane -> preamps and manipulation -> high resolution ADC -> bit perfect storage -> high resolution DAC -> voltage at preamp input to feed your system.

Analog:

sound waves -> voltage at mic membrane -> preamps and manipulation -> to storage:

a) tape: voltage induces magnetic encoding in a magnetizable support. Timing is encoded by motor motion pulling a tape trough the place encoding takes place (the head)

b) direct to metal vinyl: voltage induces magnetic fluctuations that move a hard needle across a metal or lacquer support. Timing information is again entrusted to a motor speed. Encoding is performed by either active or passive elements to apply an eq curve to the signal (usually RIAA)

c) tape to vinyl: sum the two processes before and add the tape read

-> storage in material media directly -> to read

a) tape: feed again the tape, run motor and read magnetic fluctuations on tape to induce voltage coming out of a read head -> perform any corrections for used curves via passive or active electronics

b) vinyl: run motor and use a stylus to create voltage coming from shaking a magnet or coil, amplify that by orders of magnitude (40 to 80db?), re-apply equalization to undo the previous one that was applied in recording by passive or active components -> voltage at preamp input to feed your system.

It should be clear that the digital step is infinitely more accurate, there are no moving parts, very few parts at all, and they are all quite quantifiable, trackable and easy to account for.

Every part of the analog process is difficult and adds errors: signal goes though a incredible number of magnetic reconstructions, speed relies on motors all the way, everything has inertia (stylus, platters, bearings,...). There is no such thing as a 'pure' analog recording any more that a pure digital recording. The signal is tortured all the way from the mic to the final medium, and then tortured back. It is rebuilt so many times on the other side of a transformer, equalized in lossy processes and then back again to be produced, it is absolutely comparable to a (haphazard) digitalization process, just in analog media. There is nothing magical about it.

ADC is effectively transparent. DAC can be made effectively transparent as well. This is trivial to measure and quantify, don't get confused by people that say otherwise. Digital processes run the world and their resolution is not something we should trivialize with annecdote. 24 bits gives you just short of 17 million discrete intervals to categorize something.

Just a bit of fun, those 24 bits, how do they compare to the potential of vinyl? the smallest groove is about 0.04 mm, so half that divided by 24bits is ~1.2e-11. That's the information size, in meters, you'd need to get to reproduce 24bits on a record. So do we get it? A small molecule is about ~1e-9 meters. We're in about two orders of magnitude off, in favor of a simple 24bit recording. It is, at minimum, 100x more resolving than analog. This is the reason why people digitize tape to DSD and 'it sounds the same'. The tape signal literally fits within the digital signal, with headroom to spare, and that is only possible with the ubiquitous high quality ADCs and DACs we have.

So, digital is more accurate, in absolute terms.

Now here comes the kicker, after all this blabber: does it matter? Are we even making the right questions? Is absolute accuracy relevant or is there something else?

It is more relevant if we are considering just 'information'. But what about 'music', and more generally, sound?

Remember this all starts at the microphone and ends in our ears (assuming acoustic music of course). And that's important, they are the gatekeeper for all of this. A mic is a deeply flawed device: quite frequency selective, saturates quickly, directional, has internal resonances and so on, and those are always there, independent of the downstream being digital or analog. Our ears are laden with eons of evolutionary pressure to be also very selective, typically at the expense of linearity in most processes. There are a number of psychoacoustic clues as to why we prefer a given type of euphonic presentation over another, as to why a given type of noise renders details more present and trackable, and so on. Some of these things are natively (by coincidence) present in analog chains, and that seems to be a reason why they score high in the preference score, even trying to discount the cultural and ritualistic effects innate to using the medium. So it could be that analog is more accurate to the music under certain conditions.

As a user of both, I generally don't share that opinion. I can get digital to sound analogue but not the other way around, and that tells me something. I certainly entertain these ideas and they inform my design choices. But I'm not sure we are debating a meritorious problem, at least in it's current typical format. There is nothing inherent about any of the two processes that elevates it above the other, in relative terms. There is too many music recorded natively in each of the processes to disregard, and I have to agree with Mike, above a, certainly diffuse but nonetheless real, threshold of quality, the differences are difficult to pin down, highly release dependent and quickly left at the door and you just enjoy the outcome.

In general, Mastering engineers are working on the behest of the producers and artist to “polish” what’s is sent to them and to get it ready for commercial release. I see that Dave is online so he can state for “himself” what his frame of mind and objectives are when he is approaching a project.

Typically the mastering engineers are not present during the recordings.

I am trying to better understand your past comments about accuracy. I asked Dave a different question. You explained well the different people who do the art and use their tools to achieve predictable outcomes. You do the same with your tool in your system. Is the purpose of the artistic steps to editorialize and create an outcome or is it to be faithful or accurate to the original music event? It is called a recording. Perhaps we should call it an interpretation. What does accuracy really matter if those involved are creating some predictable outcome?

Last edited:

At the mastering stage, I have no idea what it sounded like to the people in the recording “studio.” I use that term loosely cause these days very few releases have spent any time in what most folks here would consider a studio. For quite awhile now most all recordings are done in private (or commercial) home studios of every quality conceivable level.Which of the DDD commercial releases that you've mastered would you say sound like the live feed?

When I was exclusively a recording engineer I hated the sound of early (and mid!) digital so I worked in analog as much as I could. We were in the minority back then. Most artists and engineers loved digital for all its many advantages - even if it subtlety sounded like edgy cardboard.

But I also CLEARLY heard the loss of even the first playback of tape compared to the console feed. Only digital of the last 10-15 years has gotten to the point of refining out the nastiness and dryness for me to use it - BUT even early digital sounded more like the feed in a few important ways, than tape did. The end of pro analog gear sales of consoles and tape decks effectively ended over 20 years ago, so it pretty much had to improve.

Today, using tape is a creative decision that some like to do when possible.

To Rexp’s question, I don’t think anything I’ve worked on has stayed in it’s earliest, freshly recorded state to the very end but there very a few jazz things where the release sounded pretty close if not the same, as what we heard as it went down.

I done a lot of mixing where we mixed to tape and digital and everyone preferred the digital as it captured the mix better.

It’s also an analytical way of thinking like an engineer does. I agree with you on positive, tangible evaluation.Understood. Triangulation is easier for me.

I never listen to suspend disbelief. More or less real is not on my wish list. I simply connect with the music better when it has the distortions inherent in the cutting and playback process of vinyl.Mcsnare, Could you be a bit more specific about what you mean by “like”? You prefer listening to vinyl over digital. How do they sound different to you and does one sound more convincing or real to you? If so how and why do you think that is?

Last edited:

I spent 20 years as a submariner. I did all the officer duties including Chief Engineer, Executive Officer and Commanding Officer. All I can say about the above claim is BS.It is my understanding that the developer of HQPLAYER developed dynamic filters for the Finnish military to listen for Russian submarines. The special filters and techniques required for that type of military detection and surveillance have an application in extracting low level detail from recordings.

I don’t know for sure how much of the claim is true, but what I can tell you with certainty is that users of military detection and surveillance systems are very different than the designers and developers of these systems. I worked for Lockheed Martin designing space and military electronics. Which defense contractor did you work for and what type of submarine electronics did you design and develop?I spent 20 years as a submariner. I did all the officer duties including Chief Engineer, Executive Officer and Commanding Officer. All I can say about the above claim is BS.

... Which defense contractor did you work for and what type of submarine electronics did you design and develop?

Great post.The absolute accuracy is not really open for discussion. A signal is preserved orders or magnitude better going through a digital process than an analogue one. Consider the following:

Digital:

sound waves -> voltage at mic membrane -> preamps and manipulation -> high resolution ADC -> bit perfect storage -> high resolution DAC -> voltage at preamp input to feed your system.

Analog:

sound waves -> voltage at mic membrane -> preamps and manipulation -> to storage:

a) tape: voltage induces magnetic encoding in a magnetizable support. Timing is encoded by motor motion pulling a tape trough the place encoding takes place (the head)

b) direct to metal vinyl: voltage induces magnetic fluctuations that move a hard needle across a metal or lacquer support. Timing information is again entrusted to a motor speed. Encoding is performed by either active or passive elements to apply an eq curve to the signal (usually RIAA)

c) tape to vinyl: sum the two processes before and add the tape read

-> storage in material media directly -> to read

a) tape: feed again the tape, run motor and read magnetic fluctuations on tape to induce voltage coming out of a read head -> perform any corrections for used curves via passive or active electronics

b) vinyl: run motor and use a stylus to create voltage coming from shaking a magnet or coil, amplify that by orders of magnitude (40 to 80db?), re-apply equalization to undo the previous one that was applied in recording by passive or active components -> voltage at preamp input to feed your system.

It should be clear that the digital step is infinitely more accurate, there are no moving parts, very few parts at all, and they are all quite quantifiable, trackable and easy to account for.

Every part of the analog process is difficult and adds errors: signal goes though a incredible number of magnetic reconstructions, speed relies on motors all the way, everything has inertia (stylus, platters, bearings,...). There is no such thing as a 'pure' analog recording any more that a pure digital recording. The signal is tortured all the way from the mic to the final medium, and then tortured back. It is rebuilt so many times on the other side of a transformer, equalized in lossy processes and then back again to be produced, it is absolutely comparable to a (haphazard) digitalization process, just in analog media. There is nothing magical about it.

ADC is effectively transparent. DAC can be made effectively transparent as well. This is trivial to measure and quantify, don't get confused by people that say otherwise. Digital processes run the world and their resolution is not something we should trivialize with annecdote. 24 bits gives you just short of 17 million discrete intervals to categorize something.

Just a bit of fun, those 24 bits, how do they compare to the potential of vinyl? the smallest groove is about 0.04 mm, so half that divided by 24bits is ~1.2e-11. That's the information size, in meters, you'd need to get to reproduce 24bits on a record. So do we get it? A small molecule is about ~1e-9 meters. We're in about two orders of magnitude off, in favor of a simple 24bit recording. It is, at minimum, 100x more resolving than analog. This is the reason why people digitize tape to DSD and 'it sounds the same'. The tape signal literally fits within the digital signal, with headroom to spare, and that is only possible with the ubiquitous high quality ADCs and DACs we have.

So, digital is more accurate, in absolute terms.

Now here comes the kicker, after all this blabber: does it matter? Are we even making the right questions? Is absolute accuracy relevant or is there something else?

It is more relevant if we are considering just 'information'. But what about 'music', and more generally, sound?

Remember this all starts at the microphone and ends in our ears (assuming acoustic music of course). And that's important, they are the gatekeeper for all of this. A mic is a deeply flawed device: quite frequency selective, saturates quickly, directional, has internal resonances and so on, and those are always there, independent of the downstream being digital or analog. Our ears are laden with eons of evolutionary pressure to be also very selective, typically at the expense of linearity in most processes. There are a number of psychoacoustic clues as to why we prefer a given type of euphonic presentation over another, as to why a given type of noise renders details more present and trackable, and so on. Some of these things are natively (by coincidence) present in analog chains, and that seems to be a reason why they score high in the preference score, even trying to discount the cultural and ritualistic effects innate to using the medium. So it could be that analog is more accurate to the music under certain conditions.

As a user of both, I generally don't share that opinion. I can get digital to sound analogue but not the other way around, and that tells me something. I certainly entertain these ideas and they inform my design choices. But I'm not sure we are debating a meritorious problem, at least in it's current typical format. There is nothing inherent about any of the two processes that elevates it above the other, in relative terms. There is too many music recorded natively in each of the processes to disregard, and I have to agree with Mike, above a, certainly diffuse but nonetheless real, threshold of quality, the differences are difficult to pin down, highly release dependent and quickly left at the door and you just enjoy the outcome.

It seems to me, the quality of the final product that we have available to listen to, whether in an analog or digital form, is far more dependent on all the myriad production decisions, and the skills of those involved, from recording to mastering and beyond, than the format the product is delivered in or the gear we use to play it back on.

Everything we spend so much time kvetching over — particularly with regards to the so called digital/analog debate- is just the icing on the cake.

You are right. Contractors, designers and developers of military systems have little knowledge about how to use them in the operational environment. They know a little about operational or tactical usage because of keeping certain things classified. That’s normal because their function is to design the product up to specification. Beyond that is above their head. it’s the procuring military’s job. Actually they’re not too different than developers working for other industries in this respect. And when it comes to detecting submarines with a filter similar to HQPlayer’s…Well, good luck with that.I don’t know for sure how much of the claim is true, but what I can tell you with certainty is that users of military detection and surveillance systems are very different than the designers and developers of these systems. I worked for Lockheed Martin designing space and military electronics. Which defense contractor did you work for and what type of submarine electronics did you design and develop?

If it has been true that those filters play an important role for Finnish Navy to detect Russian submarines would it be announced publicly? Would they let it be shared here? Maybe he did something for the Navy but catching submarines with the help of a filter is a very long shot.

Last edited:

| Steve Williams Site Founder | Site Owner | Administrator | Ron Resnick Site Owner | Administrator | Julian (The Fixer) Website Build | Marketing Managersing |