Yeah, I'm not feeling the love...

Tim

if I had some rainbows and unicorns i'd throw them your way too.....

Yeah, I'm not feeling the love...

Tim

if I had some rainbows and unicorns i'd throw them your way too.....

(...)

My current sig is much more indicative of my point of view, thanks.

Tim

Thanks, but they wouldn't have been much help once you implied that I am dishonest and have some kind of hidden agenda. As I've told you before, I don't have an agenda. I have nothing to sell, and I don't really care that some disagree with me. I do have a point of view, however, and I express it, just like the rest of the active members of WBF. The problem, I think, is that my POV often aligns with the available data on the subject and I'm not afraid to point that out. Seems to annoy some people. But we're talking about people now, not audio, so I'll leave it at that.

My current sig is much more indicative of my point of view, thanks.

Tim

Thanks for that post Mike. It is the spirit that we like to see in WBF. I hope it is acceptable to Tim. It was very apropos for a thread that starts with " Introspection."Tim,

I was trying to be clever and funny and had no mean spirited intensions. if I crossed the line into something personal please accept my apologies. I respect your approach to things and consider you an articulate intellectual who goes his own way. no doubt a few years back I miss interpreted your approach as some sort of 'anti-me' perspective. but that was then.

have a nice weekend.

Tim,

I was trying to be clever and funny and had no mean spirited intensions. if I crossed the line into something personal please accept my apologies. I respect your approach to things and consider you an articulate intellectual who goes his own way. no doubt a few years back I miss interpreted your approach as some sort of 'anti-me' perspective. but that was then.

have a nice weekend.

Tim,

IMHO your signature is a permanent ambiguous and unfriendly accusation on the high-end people. Just because you do not want to consider the facts as reported in good faith and analyze them does not imply they do not exist.

Take any music encoder, AAC, MP3, WMA, etc. They all work in frequency domain. The take your music with its arbitrary waveform, decompose it in to individual frequencies that make it up (using DCT), perform data reduction and then convert it all back to time domain in decoder/player. What comes out of that system is clearly music which demonstrates that decomposition into individual frequencies works as theory proves it does.

Phelonious Ponk said:You didn't actually answer the question, but I'll take that as a no.

Phelonious Ponk said:If you're right, I wonder how audio engineers and designers develop/improve/QC the equipment they build and sell. They must listen to every chip, every tube, every driver. These sub components are, after all, consistent or not based on measurements and chosen on specs. These builders must listen to every component before selling it, and throw those that don't sound like the reference component in the dumpster. Given all of that, high-end is a heck of a bargain.

Fitzcaraldo215 said:Well, I do not see that you have proven anything, in spite of your caveats. Yes, of course, all acoustic instruments, and even most electronic ones, have their own complex spectrum of harmonics on top of the fundamental. So, comparing pure, electronically generated tones containing no harmonics with an actual instrument containing many is weak as a starting point, though you try to explain it. Why would you even start your argument with that obvious spectral mismatch?

But, sheer complexity of instrument and musical harmonic spectra, in and of itself, does not invalidate Fourier, though you claim, somehow, it does. Sure, it makes the Fourier analysis more complex in the process of trying to get down to the sine/cosine wave analytical components of the waveform, but if I have a choice between believing Fourier and believing you, hmmm. Fourier has lasted for a long time and you have not won the Nobel Prize, not yet, at least.

Phelonious Ponk said:...whether or not (I) think thorough sine wave measurement is indicative of the performance of equipment when playing music...

Don Hills said:… as will any combination of sine waves, as you illustrated.

But the complexity of "real" music compared with sine waves is irrelevant to the point, that "the perfomance of the system when playing music" can be judged by measuring how accurately the "amplitude-over-time" relationship of the output of the system matches the input. You can accurately measure that with sine waves. (I'll grant you'll need more than one.) The goal is "a straight wire with gain". Of course, the devil is in the details of achieving that goal.

Groucho said:It is a perennial dilemma in discussions of this sort: state that a system is linear and open oneself up to the 'gotcha' that a real world distortion level of -10000000dB means it is not linear hence the argument is invalidated. Or to pre-empt the point with a qualification such as "to all intents and purposes", "not literally" etc. in which case one invokes the 'gotcha' oneself.

Groucho said:A real world amplifier does, indeed, distort the signal (as does the very air between speakers and ears) and to a higher level when driving a difficult load. It is a question of being sensible about what constitutes audible distortion, and also doing something about it. I do know that active speakers greatly reduce the load on the amplifier, so my system is active. Is yours? If not, why not?

Amirm said:I hear you but it seems that a bunch of us are arguing 853guy which seems a bit unfair. Let's take it a bit easy guys.

Phelonious Ponk said:I’m still trying to ascertain 853guy's position. When I do, then maybe I'll argue with him. But I'll be gentle. ?

Maybe we don’t see the world the same way. I consider “fidelity/accuracy/truth to the recording” to be neither ideologically robust nor conceptually possible, and for some of the reasons I’ve mentioned in this thread. I consider sine waves to be incredibly useful as tools for understanding electro-mechanical phenomena, but not to be wholly equivalent with the musical waveforms of a time-based art form, which is problematic in light of the fact that there is no perfectly precise measurement of time. I consider calling something “linear” when it is not to be a form of exaggeration, and intellectually dishonest. I consider a reductionist methodology for choosing components to contain little utility value apropos a mechanism designed primarily to elicit emotions in the listener. Most of all, I consider progress to be non-linear in almost all spheres of life. That is: For every breakthrough and improvement we make in one domain, very often there is a tradeoff in another.

Let me encourage you - again - to ask someone else, other than me, who actually designs and implements concepts regarding audio components (many of whom frequent this very site), rather than persist with an assumption that they “must” engage in a process that appears to offend your sensibilities.

I don't need to ask; I know if they are competent engineers they measure. And I know they listen. Listening doesn't "offend my sensibilities," it is necessary as well.

I’m not asking anyone to believe anything. I have little interest in “proving” something, and zero interest in belief.

If I'm not mistaken, the thread is titled “Introspection and hyperbole control”. Stating a system is linear when it cannot be, or that a component could have a “real world distortion level of -10000000dB” seems a little like hyperbole to me. However, it’s fully possible I may have a different perspective than others on what is hyperbole and what is not.

I don't think there is anyone here who doesn't understand that no system can be perfectly linear, which is why I think everyone here understands how that term is used when discussing audio.

Tim

This is all unrelated to the point I was making. Every lossy audio codec has three stages:Not only that, we loose a whole bunch and gain some new ones, too. Win!

Nine Inch Nails “Satellite” Audiophile Master 24/48 WAV

View attachment 22195

Nine Inch Nails “Satellite” Audiophile Master 16/44.1 192 Kbps MP3

View attachment 22196

I don't need to ask; I know if they are competent engineers they measure. And I know they listen. Listening doesn't "offend my sensibilities," it is necessary as well.

I don't think there is anyone here who doesn't understand that no system can be perfectly linear, which is why I think everyone here understands how that term is used when discussing audio.

amirm said:This is all unrelated to the point I was making. Every lossy audio codec has three stages:

amirm said:You can delete #2 and what you get back is what you put in. This demonstrates that individual frequencies do make up your music which was the discussion at hand. Not what lossy compression does to audio (i.e. #2)….

…Anyway, the theory of decomposition of music into frequency domain and a collection of individual frequencies cannot be doubted. You just used it yourself in that spectrogram.

amirm said:Take any music encoder, AAC, MP3, WMA, etc… What comes out of that system is clearly music which demonstrates that decomposition into individual frequencies works as theory proves it does.

amirm said:So if you are going to complain about loss of fidelity in lossy codecs, you need to show the results of a listening test. Unfortunately vast majority of population including audiophiles fail such tests. Which is as it should be because the lossy codec "knows" what it can take out that should not be audible. Therefore the odds are against most people hearing such massive data reduction (as much as 90% thrown away).

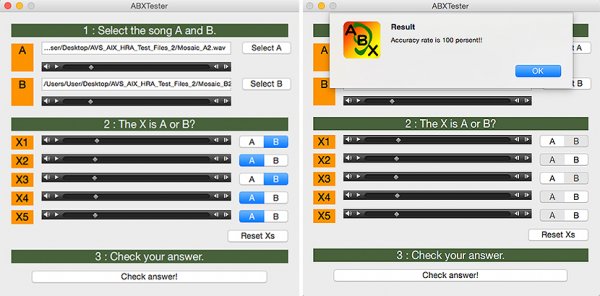

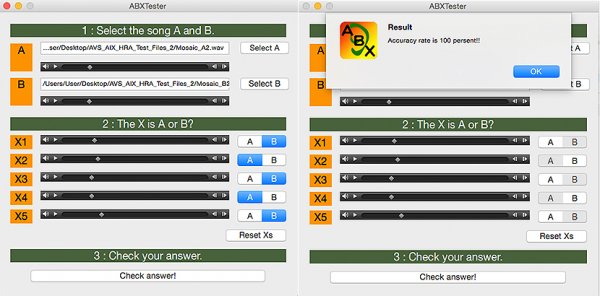

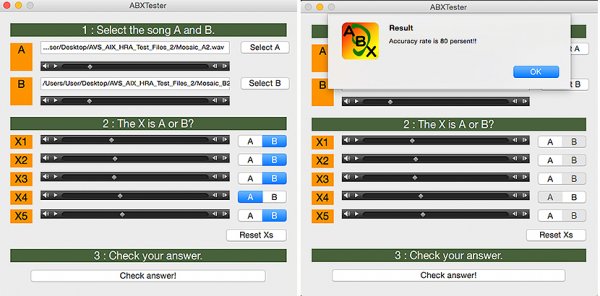

If you doubt what I just said, try to see if you can pass these tests: http://www.whatsbestforum.com/showth...l=1#post279463

Not trying to nitpick. Just trying to better understand where you are coming from. But, also to disagree on that one paragraph in particular.

I am not sure why you reject “fidelity/accuracy/truth to the recording” as lacking ideological robustness. Yes, I agree there are practical problems in knowing exactly what sounds were recorded relative to the live source, starting with the mics. That may be only approximate and subject to skillful choices by recording engineers. But, I think the concept of faithfulness and fidelity to the source is still a good one at every single intermediate step in the recording and reproduction chain, even if we cannot perfectly achieve it in practical terms. Nothing is perfect, but what are the ideals we strive for? And, if fidelity to the input source, step by step for each component, is not the ideal, I am not clear from your posts what the replacement ideal should be or why that would be more robust.

Yes, there is no perfectly precise measure of time. There is no perfectly precise measurement of anything. But, when do the imperfections in precision become undetectable by humans? Surely, human perception is not infinite in its powers of resolution. In audio, there is a threshold of audibility, which can be scientifically arrived at through experimentation. That science, of course, is debated, peer reviewed, experimentally duplicated and verified, so it evolves over time, leading to more accurate and more precise determinations. Eventually, audio equipment becomes close to or beneath the threshold of audibility so that it is "good enough". I am not saying we are there yet in all instances, even with state of the art equipment. But, we have gotten much closer in my lifetime, even noticeably to me within the last decade, as far as I can tell from measurements I see and from my own subjective impressions.

We can further debate whether audio recording and reproduction is "designed primarily to elicit emotions in the listener". Which emotions specifically at what point in a recording and how do we know whether the system is accurately delivering them, if that is indeed its true design objective?

Of course, I disagree. Audio recording and reproduction systems are designed to capture and transmit sound as accurately as possible, ideally to get out of the way of the music, speech or other sounds. The emotion is conveyed by the music or artistic content at a higher psychic level than the sound that carries that content to our lower level sensory inputs, our ears.

The sound is merely the highway carrying the vehicles containing the artistic message and emotions. The perception of the sound is also more consistent, though not identical, from person to person, whereas the perception of the artistry and emotional responses vary all over the place, even in the same person on repeated listening. Many totally external factors affect our moods, emotions and receptivity to the artistic message. And, those responses to the art and the emotions they trigger do not necessarily need first rate sound transmission in order to be appreciated and enjoyed. Though, I grant you, the best and most involving experiences of the art and emotion of music are achieved with the best sound. I would not be here if I did not believe that.

Let me explain the argument again: all lossy codecs rely on representation of single tone sine waves in each frame of audio. The bit stream that they transmit are the coefficients for those sine waves (really cosine but that is not important here). That they sound completely like music with all of its complexities proves that you can indeed represent music in either time domain with its complex visual shape, or in frequency domain with orderly set of frequencies.Actually Amir, it was you who introduced lossy codecs into the discussion, not me, (previous to your post, no one mentioned lossy codecs) unrelated to the point I was making which was to simply suggest that perhaps steady-state sine waves, defined as they are by curves that are smooth and repetitive in oscillation, are not wholly equivalent to musical waveforms which are anything but smooth and repetitive.

Fitzcaraldo215 said:Not trying to nitpick. Just trying to better understand where you are coming from. But, also to disagree on that one paragraph in particular.

Fitzcaraldo215 said:Yes, there is no perfectly precise measure of time. There is no perfectly precise measurement of anything. But, when do the imperfections in precision become undetectable by humans? Surely, human perception is not infinite in its powers of resolution. In audio, there is a threshold of audibility, which can be scientifically arrived at through experimentation. That science, of course, is debated, peer reviewed, experimentally duplicated and verified, so it evolves over time, leading to more accurate and more precise determinations. Eventually, audio equipment becomes close to or beneath the threshold of audibility so that it is "good enough". I am not saying we are there yet in all instances, even with state of the art equipment. But, we have gotten much closer in my lifetime, even noticeably to me within the last decade, as far as I can tell from measurements I see and from my own subjective impressions.

Fitzcaraldo215 said:We can further debate whether audio recording and reproduction is "designed primarily to elicit emotions in the listener". Which emotions specifically at what point in a recording and how do we know whether the system is accurately delivering them, if that is indeed its true design objective?

Fitzcaraldo215 said:Of course, I disagree. Audio recording and reproduction systems are designed to capture and transmit sound as accurately as possible, ideally to get out of the way of the music, speech or other sounds.

Fitzcaraldo215 said:The emotion is conveyed by the music or artistic content at a higher psychic level than the sound that carries that content to our lower level sensory inputs, our ears.

The sound is merely the highway carrying the vehicles containing the artistic message and emotions. The perception of the sound is also more consistent, though not identical, from person to person, whereas the perception of the artistry and emotional responses vary all over the place, even in the same person on repeated listening. Many totally external factors affect our moods, emotions and receptivity to the artistic message. And, those responses to the art and the emotions they trigger do not necessarily need first rate sound transmission in order to be appreciated and enjoyed. Though, I grant you, the best and most involving experiences of the art and emotion of music are achieved with the best sound. I would not be here if I did not believe that.

INTROSPECTION AND HYPERBOLE CONTROL VIOLATOR OF THE MONTH: (...)

I could go on and on, but you probably understand my disappointment by now.

... The discussion at hand was to compare the symmetrical nature of sine waves with the the asymmetrical nature of a musical waveform. Furthermore, it was not to suggest that a musical wave form could not be deconstructed into a collection of individual frequencies, but that music, even when analysed as either a waveform or as a spectrogram, is more than just its constituent frequencies, given that music is always amplitude and pitch over time and constantly modulating. ...

Dear Francisco, It is indeed interesting that we have starkly different reactions to the style of his review.

| Steve Williams Site Founder | Site Owner | Administrator | Ron Resnick Site Owner | Administrator | Julian (The Fixer) Website Build | Marketing Managersing |