Amir,

You present data on the FR of just four speakers.

I post that because that data is public and easily referenced. The actual research has a far larger base. From Dr. Olive's AES paper,

A Multiple Regression Model for Predicting Loudspeaker Preference Using Objective Measurements: Part II - Development of the Model

"A new model is presented that accurately predicts listener preference ratings of loudspeakers based on anechoic measurements. The model was tested using 70 different loudspeakers evaluated in 19 different listening tests. Its performance was compared to 2 models based on in-room measurements with 1/3-octave and 1/20-octave resolution, and 2 models based on sound power measurements, including the Consumers Union (CU) model, tested in Part One. The correlations between predicted and measured preference ratings were: 1.0 (our model), 0.91 (inroom, 1/20th-octave), 0.87 (sound power model), 0.75 (in-room, 1/3-octave), and ?0.22 (CU model). Models based on sound power are less accurate because they ignore the qualities of the perceptually important direct and early reflected sounds. The premise of the CU model is that the sound power response of the loudspeaker should be flat, which we show is negatively correlated with preference rating. It is also based on 1/3-octave measurements that are shown to produce less accurate predictions of sound quality."

There are many known factors that can define sound quality of a speaker - distortion, dynamic compression, resonances of box, delayed resonance.

Those are certainly factors and I mentioned one of them earlier (i.e. dynamic range, bass extension). Can we agree that the 70 speakers tested had widely varying metrics in this regard? Yet, with some small exceptions, they could not trump deficiencies in frequency response of the speaker.

To be sure, Harman has a company pays huge attention to these other factors too. It is just that they start with the right fundamentals: get the total response that reaches a listener -- sum total of direct and some of the reflected energy -- to have a smooth response (not necessarily flat!). Get that right and then focus on other issues you mention.

Some manufacturers consider that these parameters are more important than FR.

They do but then we are at the mercy of a "gray haired" designer thinking he is right. Why not put forward some data that says if you took 10 of us together, we would prefer that design priority over Harman's findings? Why not publish an AES or ASA paper that says Harman is wrong?

What can prove to a non-expert in speaker design that just the FR and dispersion are the two critical factors for my audiophile happiness?

If large scale listening test data, combine with the measurements that correlate with it doesn't do that, then I think we are saying that we like to flip a coin and decide who is right. Who would buy an amp that has a 3 db dip in 2K to 3K vs one that doesn't? Who can't hear that dip if we took the flat response speaker and subjected it to that?

To be sure, there is absolutely room for additional factors that impact speaker performance. There is a reason Harman builds a range of products using completely different technologies. But they all share a principal of goodness. Of note, this change did not come easy to Harman. With different divisions all thinking they had the answer (think JBL and Revel), getting everyone to agree was hard. But they eventually come.

It is also true that Dr. Toole does take this idea to point of importance that perhaps is a bit too far. I think that is necessary to get the point across because there is so much disbelief out there. I have had arguments over validity of their double blind tests with some of the biggest champions of double blind testing such as Ethan and Arny. Both seemed to think you don't need double blind tests of speakers because the difference is too large! Once there though, and we let go of our assumptions, then it is OK to deviate some from this thinking. Surely the person at Harman sweating the new tweeter thinks beyond just accomplishing this goal with respect to frequency response.

Did you hear about the Frog and the Scientist?

Unless we have all the details we can never be sure of the conclusions.

Question becomes what I put to Myles. Do we then give up because we are not sure? At work, we get tons of speakers that come through our shop for evaluation. Is it by accident that they can't outperform the Revels when we put them side by side? My team is always ecstatic about these new brands. But as soon as we AB, it becomes clear that if you use proper research, you get better product. Again, there are ways to beat Revel speakers. If cost is no object and neither is size, you can do things that you can't do with a diminutive of a Revel speaker. But imagine how much better that other speaker would be if they also followed other factors that matter.

As you participated in the tests and have access to privileged Harman information, perhaps things are more clear to you. For example I still have doubts even on the interpretation of you first bar graph - considering that N people participated in the tests does it imply that X/N preferred x type? This seems scientific market research for me, not in depth audio science research.

If you are asking if 100% of the people agreed with one speaker being the best, no. As the data above shows, there is high correlation but not absolute conclusions. Whether that is due to people being poor judges of quality at times, or some other factors in play, it is hard to say. What is not hard to say is that those factors do not in any way trump the research results presented. If you deviate from them, you better have darn good reason and research to back your counter approach. A glossy brochure and impressive looking speakers don't do it.

BTW, I have the same type of doubts on most scientific data presented by many other manufacturers - Harman are more exposed to my criticism because they use it openly in their marketing.

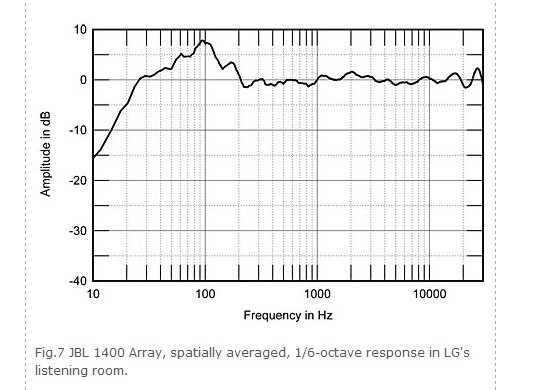

There is no marketing here. Harman's research can be used to heavily hurt their business. Dr. Tool in his classes and books talks about $500 speakers that follow this scheme. Yet the company makes $25K speakers in Revel line and up to $65K in JBL Synthesis. What separates these are some of the factors you mention. The JBL has dynamics that literally cause your ceiling to fall down if you are not careful

. And the lack of distortion in the Salon 2 is remarkable. Sure, there is some implicit marketing here. I won't deny that. But I think one would be ignoring very good data to hang one's hat on this notion.

Thinks are not easy also because there is a large difference between science and technology, and most of the time marketing mix them.

And how is the mix with the high-end companies that Myles listed? I would say the percentage of marketing is off the chart there. They use technical buzzwords to be sure, but that is where it ends. Ultimately they show no objective data that proves the efficacy of the design. Surely if the goal is that we achieve better results with their speakers, they show some comparative listening tests that proves that point. But they show none.

Let me finish by saying that I am not trying to sell you on Harman as a company or products they manufacture. Just the notion that we are not lost in the woods, trying to interpret every manufacturer's claims independently. We do have a measure of goodness here and let's use it to evaluate products and see if in our mind, they do correlate. I have done this independently of Harman's research. I have gone with speakers that had the right buzzwords, that sounded convincing as a better approach to speaker design. Then I had my nose bashed in when I could not convince customers, that they were better. In our case, we had them side-by-side in our main theater behind the curtain and with a flip of a switch, we could go from JBL to the other brand. And the other brand lost, despite being more expensive.