Sanders Sound Systems - electrostatic

- Thread starter kach22i

- Start date

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Another great post.

But could you explain how did you prove that "No human can hear ... " and what you mean exactly by a "properly produced digital PCM recording"? CD, 24bit 192 kHz? Apologies if you explained it in another topic and I missed it. But stated exactly as written without justification it seems to distort the line you were so well presenting.

Disclaimer 2 - I am a subscriber of the Tape Project

Disclaimer 3 - I read all TELDC and even built a small panel in the 90's! Great fun.

[FONT="]You ask two excellent questions. You first asked exactly what I mean by a "properly produced" digital PCM recording and wanted more details. The main issue with making digital (or analog) recordings from analog sources is the recording level. You must optimize the recording level so that the S/N (Signal to Noise ratio) is maximized.[/FONT]

[FONT="]The "signal" is the music in the form of an electrical wave form. You want the signal to be much louder than the noise so that you have a silent background. [/FONT]

[FONT="]You can only make the signal so loud before you reach the limit of the medium to record it without distortion. This upper limit is called "saturation." It is similar to "clipping" an amplifier.[/FONT]

[FONT="]When you reach saturation on magnetic, analog tape, you get a "soft", rather gradual increase in distortion. It is possible to record a little above the saturation level before you start to hear obvious distortion. In other words, analog is somewhat forgiving when you reach saturation and the maximum recording level.[/FONT]

[FONT="]But if you reach saturation in a digital system, you immediately get massive amounts of "hard" distortion. You are actually running out of bits to record on and the result is a harsh, crackling sound that ruins the recording. So when recording on digital media, you must be extremely careful to never exceed the maximum recording level.[/FONT]

[FONT="]All recording media have an inherent noise floor. The noise floor is much lower in a digital system than in an analog one. But if you record at too low a level in either format, the music will be buried in the noise. Imagine what it would sound like when the noise (mainly hiss) is as loud as the average music level. This would be totally unacceptable.[/FONT]

[FONT="]The S/N is the maximum recording level compared to the noise floor. In a top quality, magnetic tape, analog system, the S/N is about 72 dB. In an LP, it is about 40 dB. In a 16 bit, PCM digital system it is about 92 dB. In a 24 bit digital system, it is around 140 dB, although the analog circuits that are required on either side of the digital converters will limit that to around 120 dB at best.[/FONT]

[FONT="]The dynamic range of a symphony orchestra is approximately 80 dB. Since you can't cram 80 dB inside the 72 dB limit of an analog recorder, you can see that it is impossible to have a truly quiet background on an analog recording. [/FONT]

[FONT="]By comparison, the 80 dB dynamic range of a symphony orchestra will easily fit inside the 92 dB S/N of a 16 bit, digital PCM recording, which will the place the noise 12 dB below the quietest sound of a symphony orchestra. This is quiet enough subjectively to have a silent background. [/FONT]

[FONT="]But 92 dB is just barely enough. You have to optimize the recording level to get the dynamic range of the music accurately centered in the S/N "window" to have a silent background while not running into hard distortion on musical peaks.[/FONT]

[FONT="]Because the margin of error is quite small in a 16 bit system, most modern digital mastering recorders use 20 or 24 bits. This gives the operator a lot more leeway with the recording levels. It also makes post-mastering processing easier and less critical. Once all the mixdowns are made, the final product can then be accurately placed on a 16 bit CD without problems.[/FONT]

[FONT="]It is also worth noting that virtually all commercial recordings have had their dynamic range compressed. That means that they have been modified so that the quiet sections are made louder and the loud sections are made quieter. Few commercial recordings today have a dynamic range of even 30 dB. This is one of the reasons that modern recordings lack realistic sound.[/FONT]

[FONT="]The main reason that compression is used is to make recordings more listenable in the relatively noisy environment of an automobile, where most music is listened to today. If the full dynamic range was used, quiet passages of music could not be heard over the noise and loud passages would make it impossible to have a conversation with a passenger or (god forbid) on your cell phone. [/FONT]

[FONT="]This compression dramatically reduces the S/N demands on the medium. This makes it possible to use fewer bits and still have an acceptable S/N. This is one of the techniques used by the MP3 format, which is designed to greatly reduce the amount of data that must be handled. [/FONT]

[FONT="]In short, if your digital recording only involves making copies of music on albums, the S/N will be non-critical and you can easily get the correct recording levels. But if you are recording live musicians, you will have to be extremely careful to get the loudest sections of the music as close to saturation as you can -- without exceeding it.[/FONT]

[FONT="]Recording levels only apply when you are copying analog information to a digital format. If you are coping a digital source digitally, there are no recording levels involved. The copying process is only transferring numbers in the form of bits. This is an "all or none" process, so there is nothing you can or need to do about recording levels. [/FONT]

[FONT="]Now let's turn to a discussion of which type of digital medium you should use. You currently have several options. These fall into two broad categories, which are PCM and MP3. There is also SACD, but since it has been abandoned by the industry and there are no consumer-grade SACD recorders available, I will not discuss it here.[/FONT]

[FONT="]Linear PCM (Pulse Code Modulation) is what is used on a CD. This is currently the highest quality type of digital recording available. [/FONT]

[FONT="]PCM is available in a variety of formats, which are defined by their number of bits and sampling rates. The lower the number of bits and the lower the sampling rate, the less data must be stored. So you will get longer playing times with fewer bits and lower sampling rates.[/FONT]

[FONT="]As I discussed in a previous post, the number of bits defines the S/N (a bit is worth approximately 6 dB of S/N), and the sampling rate defines the highest frequency that can be recorded. The sampling rate must be twice the highest frequency, plus a little more to handle the required anti-aliasing filter.[/FONT]

[FONT="]The most common PCM format is the CD which uses a linear 16/44.1 (16 bit/44.1 KHz sampling) format. It will produce linear frequency response from 20 Hz to 20 KHz with a S/N of 92 dB, absolutely linear frequency response, and virtually unmeasurable distortion -- which is good enough to produce subjectively perfect recordings. [/FONT]

[FONT="]For less critical applications such as for recording voice, FM radio, and yes, LPs, you can use the non-linear, 12/32 PCM specification. This will record up to 15 KHz (which is the limit of FM and LP high frequency response) and can produce a S/N of 70 dB. Since FM and LPs have a S/N of around 40 dB, a 70 dB recording system will be sufficient to not add any noise to the recording. [/FONT]

[FONT="]Therefore 12/32 PCM is good enough that most listeners cannot tell any difference between it and the source when copying commercial source material. But you can hear a difference when recording live music with it. [/FONT]

[FONT="]Actually the S/N of 12/32 is subjectively improved by using noise shaping to move the critical midrange noise frequencies up into the supersonic region. This reduces the apparent noise that a listener hears so that the S/N effectively becomes around 80 dB. [/FONT]

[FONT="]I use the word "subjectively" because test instruments will measure the total noise without regard to its frequency. So instruments will not be tricked by noise shaping and will accurately report the noise that is present. But noise shaping remains a useful technique improving the human listening experience.[/FONT]

[FONT="]Many professional PCM recorders also give you to option of using noise shaping for the higher sampling rates. For example, you can subjectively improve the standard S/N of 16 bit recording above its natural 92 dB limit using noise shaping that is available in some recorders.[/FONT]

[FONT="]Yet another PCM format is 16/48. This increases the high frequency limit to 22 KHz from the CD's 20 KHz. "Super Bit Mapping" (noise shaping) is often used with this format to increase the S/N to around 100 dB. It is commonly used for live recording. [/FONT]

[FONT="]Note that this format is incompatible with CDs. So you are better off using 16/44.1 so that you can copy your music digitally to CD-R if you wish. [/FONT]

[FONT="]You can convert 48 KHz sampling to 44.1, but there is some slight loss of quality when you do. So I recommend that you use 44.1 KHz sampling for compatibility reasons.[/FONT]

[FONT="]The next PCM format is 24/96. This increases the bits so that the digital S/N is 140 dB, which is way beyond what humans can hear or that analog can match. It is overkill to say the least.[/FONT]

[FONT="]The 96 KHz sampling rate is also overkill as the high frequency limit is increased to 40 KHz, which is twice as high as any human can hear. Some audiophiles believe that frequencies above 20 KHz affect the sound they hear in the range of human hearing. There is no scientific evidence to support their beliefs, but even if they know something that science doesn't, the fact remains that no music microphone will record effectively above 20 KHz. [/FONT]

[FONT="]By using 96 KHz sampling, you are wasting fully half the bandwidth of the system and recording nothing but supersonic noise. What is the purpose of that? There is simply nothing to be gained by using such a high sampling rate and recording at this rate requires the storage of much more data.[/FONT]

[FONT="]Then there is the 24/192 PCM format. This increases the high frequency limit to 80 KHz. This is even more wasteful of data and just makes it possible to record even more supersonic noise. It makes no sense at all. [/FONT]

[FONT="]So which PCM format should you use? The CD standard of 16/44.1 will make subjectively perfect recordings where you cannot hear any difference between the source and the recording. That is all that is necessary and will require the minimum amount of data storage in the PCM format. [/FONT]

[FONT="]You can also use the 12/32 format for making copies of analog recordings since even its specifications are far better than analog. This will allow you to cut the amount of data storage in half and double your playing time. [/FONT]

[FONT="]If data storage costs are a concern, then you can seriously consider the 12/32 PCM format. However, you can save far more data space while maintaining higher quality sound by using one of the MP3 formats, which I will discuss later in this article. [/FONT]

[FONT="]The 24/96 PCM specification is becoming more popular. I think this is a marketing ploy rather than offering any real improvement in the sound quality. After all, once you have reached the point where you can't hear any difference between the source and the recording, you have perfection. How do you improve on perfection? You really can't. 24/96 requires vastly more data storage than 16/44.1 and although data storage is relatively cheap nowadays, it certainly isn't free. [/FONT]

[FONT="]The cost of data storage and transmission times brings us to the most popular digital format -- MP3. MP3 uses complex computer algorithms to reduce the amount of data required. The algorithms are highly detailed and there is insufficient time to discuss them in detail in this post. But there are three general things that they do to reduce data storage requirements. [/FONT]

[FONT="]First, they compress the dynamic range. Since commercial recordings are all compressed anyway, an MP3 can reduce its own dynamic range by 2 to 4 times without causing any affect on the recorded sound at all. [/FONT]

[FONT="]The second technique used is that of masking. To explain this technique, imagine a symphony orchestra were all the instruments are playing as loudly as possible. [/FONT]

[FONT="]Now eliminate one violin that is playing quietly amongst all this loud sound from the rest of the orchestra. Will you miss the violin? No you won't, simply because all the loud sound "masks" the sound of the violin so you can't hear it. [/FONT]

[FONT="]MP3 uses this technique to avoid recording some of the low level material during loud passages. Masking also can be used effectively to "hide" background noise. So masking can improve the S/N.[/FONT]

[FONT="]The third technique is reduction of high frequency response. By limiting the recording bandwidth to 15 KHz or less, a lot of data can be saved. Since most of us can't hear above 15 KHz, and analog sources are limited to 15 KHz, this generally does not degrade the subjective quality of the sound.[/FONT]

[FONT="]These and other techniques allow MP3 to record relatively high quality sound while dramatically reducing the data storage requirements. The use of MP3 is why iPods can store thousands of songs and why you can listen to music over the internet. [/FONT]

[FONT="]There are many levels of MP3 recording as defined by its sampling rate. MP3's sampling rate does not define its high frequency response as it does in PCM. It sampling rate defines the number of samples that are processed per second and greatly affects the sound quality. Basically this boils down to the fact that higher sampling improves the sound at the expense of higher data storage requirements.[/FONT]

[FONT="]MP3 sampling rates of 64 KHz and below compromise the quality of the sound. You usually can hear a difference between the source and the recording. The type of music and its inherent amount of compression has a big influence on whether you can hear the difference. But I do not consider 64 KHz MP3 recordings as being "high fidelity."[/FONT]

[FONT="]However, when you get to 128 KHz and higher sampling, it becomes extremely difficult to hear any difference between the source and the recording. In my tests with groups of "golden ear" audiophiles, most could not detect when the source and recording were playing when using 128 KHz MP3.[/FONT]

[FONT="]This is highly dependent on the source material. Typical "pop" recordings with lots of percussive sounds sounded perfect. The most critical material was quiet orchestral works. A sustained piano note was the most taxing test and most listeners could hear a slight difference at 128 KHz sampling.[/FONT]

[FONT="]Some of the better on-line music sources like pandora.com give you the option of selecting MP3 at 192 KHz. This sounds just as good as CD and no listener could hear any difference between it and the source on my tests.[/FONT]

[FONT="]So what format should you use? For audiophile use, I would suggest 16/44.1 PCM because this is the one used for CDs. You can record on any digital equipment (I suggest a flash recorder) that you can use for your own listening. But you can also easily make CD-Rs for sharing with friends or using in your car. The quality will be flawless, so there is really no need to go to 24/96.[/FONT]

[FONT="]But MP3 has big advantages with data storage and future compatibility. You can expect to record about 10 times more material on a given amount of data storage than you can with CD's 16/44.1 PCM format. CDs are likely to be phased out over the next few years and virtually all music will be on-line and in MP3 format. [/FONT]

[FONT="]The great thing about MP3 is that you can use your recordings on an iPod or similar MP3 player. This makes it even better than CD to use in your car or if you use such players during activities or while traveling on airplanes, etc. Since these are compromised listening environments with lots of background noise and relatively poor speakers, there is really nothing to be lost by using MP3 in such situations.[/FONT]

[FONT="]So although I don't consider MP3 to be quiet as good as linear PCM, as long as the MP3 sampling rate is 128 KHz or better, you will not actually hear any degradation of the sound unless you specialize in quiet classical music. In that case, you can move up to 192 Khz sampling.[/FONT]

[FONT="]Flash recorders and computers have built-in MP3 processors. So you can record in either linear PCM (usually limited to 16/44.1 and 16/48) or MP3 in the sampling rate you prefer (usually up to 256 KHz). You won't have this flexibility when using CD-R, although some hard disk recorders offer MP3 processing.[/FONT]

[FONT="]In summary, when I say a "properly produced" digital recording, I am referring to getting the recording levels right using a suitable, high-quality, digital format. If the recording levels are too low, you will hear background noise. If they are too high, you will hear massive amounts of distortion. But if they are just right, you will hear nothing but the music. The recording will then be indistinguishable from the source and you will have a subjectively perfect recording.[/FONT]

[FONT="]This means that you can record your LPs to a good digital medium and the playback from the digital medium will sound absolutely identical to the LP. That is why I say that no human can hear any difference between them. [/FONT]

[FONT="]Your second request to explain how I can prove that "No human can hear . . . " gets to the heart of audio, which is subjective testing techniques. This is a highly contentious issue that will require laying a careful foundation, explaining valid testing techniques in great detail, and providing logical proof at every step. Therefore, discussing testing will be a long article that I will have to attack over the next few days. [/FONT]

[FONT="]So stay in touch. I promise you a fascinating read soon . . .

-Roger

[/FONT]

Roger, your explanations are wonderful. They paint a very nice end-to-end picture of the topic at hand. So I hope you take this as a small criticism  . Your explanation at the extreme are somewhat inaccurate. For example, when we state a number for compressed audio, it is the data rate, not the sampling rate. The sampling rate of MP3 is 44.1 Khz at data rates >= 128Kbps and doesn't change. The number mentioned is the number of bits per second of audio. Uncompressed audio is 1.4 Mbit/sec. 128 Kbps, is 11 times lower data rate while maintaining the same sampling rate. Note that I said sampling rate, not frequency response. Standard MP3 codecs roll off the high frequencies above 16 Khz at all but the highest data rates in order to keep distortion under control so looking at the sampling rate alone can be misleading. Here is a quick test someone ran:http://www.lincomatic.com/mp3/mp3quality.html

. Your explanation at the extreme are somewhat inaccurate. For example, when we state a number for compressed audio, it is the data rate, not the sampling rate. The sampling rate of MP3 is 44.1 Khz at data rates >= 128Kbps and doesn't change. The number mentioned is the number of bits per second of audio. Uncompressed audio is 1.4 Mbit/sec. 128 Kbps, is 11 times lower data rate while maintaining the same sampling rate. Note that I said sampling rate, not frequency response. Standard MP3 codecs roll off the high frequencies above 16 Khz at all but the highest data rates in order to keep distortion under control so looking at the sampling rate alone can be misleading. Here is a quick test someone ran:http://www.lincomatic.com/mp3/mp3quality.html

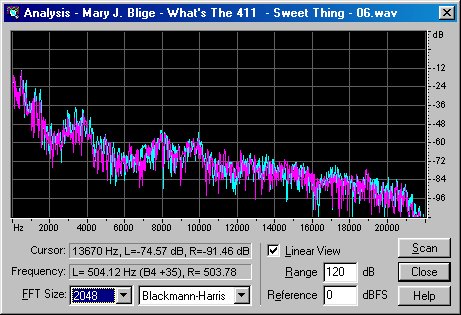

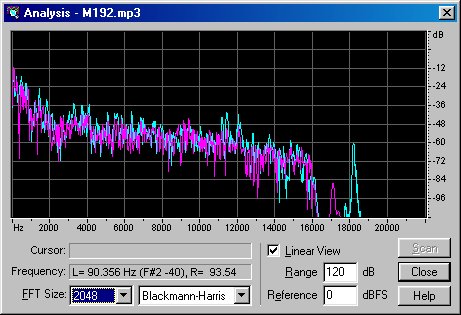

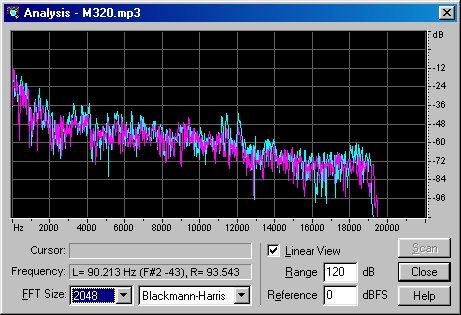

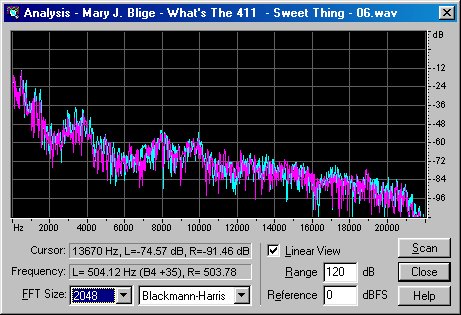

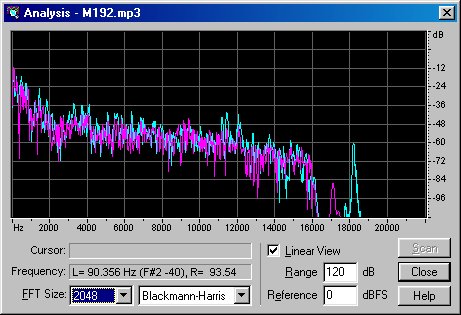

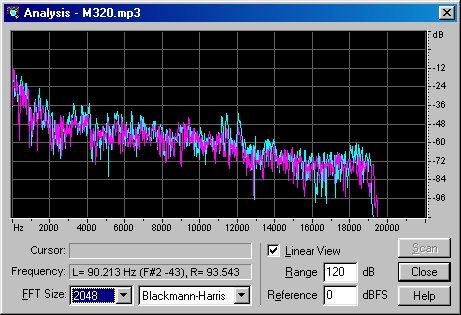

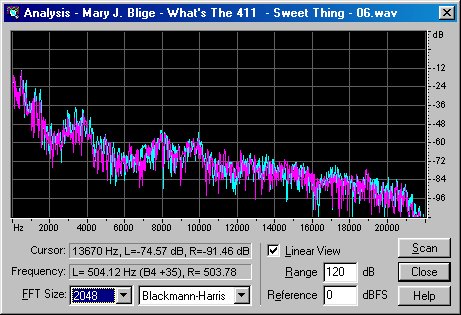

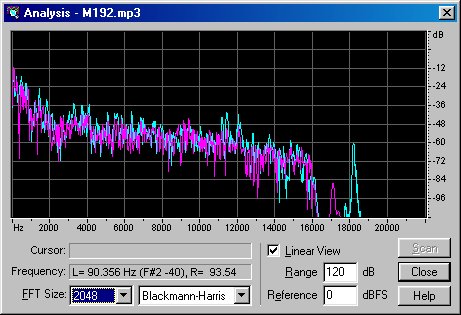

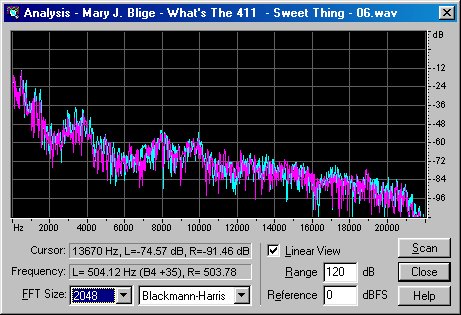

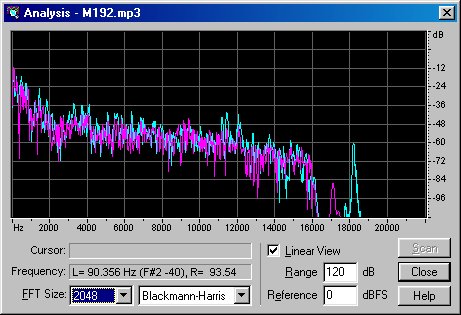

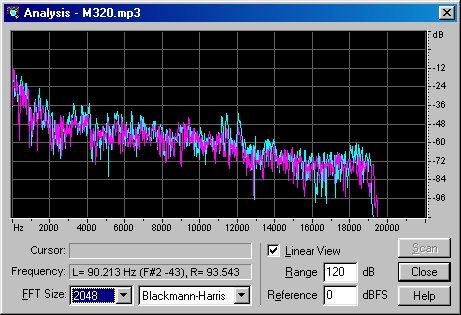

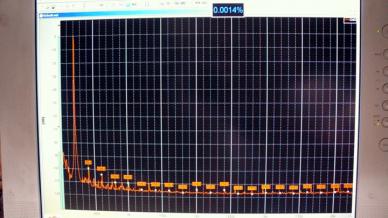

CD:

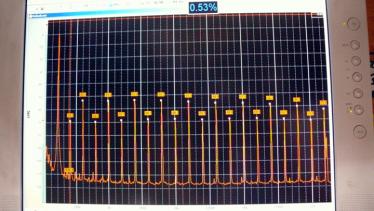

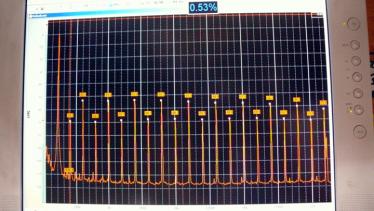

192 Kbps MP3:

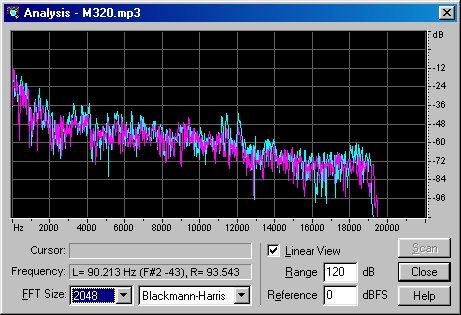

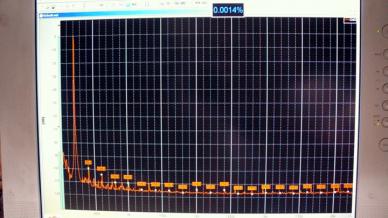

And even 320 Kbps:

MP3 was designed for backward compatibility with MPEG Layer 2 and to be implementable in really low-end hardware. As such, even putting aside the above filtering, it is not able to achieve transparency at any date rate -- your anecdotal observations not withstanding . To be sure, at 384 Kbps it can sound very good but it still sounds different to good number of audiophiles. AAC or WMA Pro on the other hand, can achieve extremely good quality at those rates perhaps fooling substantial majority of listeners. Still some people though, can tell the difference from uncompressed.

. To be sure, at 384 Kbps it can sound very good but it still sounds different to good number of audiophiles. AAC or WMA Pro on the other hand, can achieve extremely good quality at those rates perhaps fooling substantial majority of listeners. Still some people though, can tell the difference from uncompressed.

CD:

192 Kbps MP3:

And even 320 Kbps:

MP3 was designed for backward compatibility with MPEG Layer 2 and to be implementable in really low-end hardware. As such, even putting aside the above filtering, it is not able to achieve transparency at any date rate -- your anecdotal observations not withstanding

To answer this, let me tell you a story about the best recording of Respighi's "Pines of Rome" that I have ever heard. It was recorded in 1959 by Fritz Reiner and the Chicago Symphony by RCA "Red Seal."

The LP had superb dynamics, essentially full frequency range (30 Hz to 15 KHz), great "hall sound", and a high degree of realism. It was pure joy and very exciting to listen to, despite all the obvious faults that were epidemic in LPs of that era (surface noise, distortion, wow and flutter, poor S/N, and general instability).

When CD's became available years later, I couldn't wait for RCA to re-release that recording on CD so that I could eliminate all the faults heard on the LP. RCA finally did so in the late 80's and I couldn't wait to bring the CD home and play it.

Boy, was I disappointed. The CD had essentially no dynamic range, no bass, many of the instruments could barely be heard, and it in general sounded like I was listening through a telephone!

I was furious. I knew that digital recordings could (and should) be superb, since I was making them myself and knew this to be true. So I was determined to find out what was going on at RCA to ruin this recording.

After enduring considerable hassles finding my way through the telephone maze at RCA, I finally got to those responsible for releasing the recording. After hearing my complaint, they explained what had happened this way:

The original master tape recording was NOT made in 2-channel stereo. It was made using a 16 track recorder and multiple microphones -- in stereo -- on each orchestral section (violins had 2 mics, trumpets had 2 mics, etc.) They also placed mics out in the concert hall to record the sound of the hall sound.

They then mixed down the 16 track tape to get a 2-channel stereo recording that could be pressed to produce LPs. The recording engineer who did this work obviously really knew his stuff and did a great job of getting the right balance between the various orchestra sections, blending in the hall sound, and maintaining nearly full dynamic range and frequency response (particularly in the bass).

Although this was a mixdown, he kept it reasonably simple, and did not use compression, equalization, or artificial reverb. The performance was superb and his mix showed it off extremely well.

Twenty five years later, when RCA wanted to re-release the performance on CD, they did not have the mixdown used for the LP. So they had a different engineer do another mix of the original 16 channel tape for the CD. He totally butchered the job.

No matter how good the recording medium, if you put garbage in, you get garbage out. So the awful sound on the CD version of this recording was due to an horrible mix done by an incompetent sound engineer who had probably never been to a live, symphony orchestra concert.

Roger:

I'm going to have to take exception to some of your statements about the Pines of Rome. I've collected, studied and been at RCA when they pulled out these original master tapes including the Pines of Rome from the tape vaults. I've also done interviews with the engineers who were there assisting Layton and Mohr for the recordings including Jack Pfeiffer, Anthony Salvatore and Max Wilcox.

I think your source is totally confused on the 16 track. It was 1959 and I don't think they had that capability. I think your source was confusing the number of mikes with the number of tracks. These recordings other than the very early experimental stereo recordings like Zarathustra, weren't what we'd call purist, minimal miked recordings. Most RCAs, as opposed to Mercury of its day, used 7-9 mikes for their recordings. (of course Mercury stuck by their 3 mike setup.) I saw the master tape of POR and it was a three track tape, believe me. There's no way they would have mixed from 16 to 3 track.

Then let's go to the actual story of the release of Reiners Pines of Rome, one of the most powerful pieces of music out there! (Maazel and Wilkinson don't do a bad job either on Decca.) There were many pressings of the Pines released. The only one that truly represents the tape was the 1S pressing. Yes, one can hear some tape saturation and distortion on peaks on this tape. But RCA pulled this LP quickly off the shelves as the public was complaining that their tts of the day couldn't track the record (that's opposed to Mercury and George Piros who were known for their ability to put a dynamic cut down on the lacquer). Their tonearms literally jumped out of the grooves. So RCA went back to the studio, obviously cut four more lacquers before they could get it right (that's evidenced by the missing 2-4S stampers) and released a 5S stamper. The 5S could play on the tts of the day but RCA had compressed the dynamic range of the recording considerably. RCA then went another 5 stampers releasing a 10S that was markedly inferior to even the 5S and if I remember correctly, cut at a much, much lower level (but I'd have to go back and compare them to confirm). So essentially every stamper after 10S was not even close to what was originally put down on the first 1S stamper. Now the Chesky for some reason was also compressed; I haven't heard the Classic Records reissue but I wasn't a fan of their masterings. (somewhere in storage have the records of how many pressings were cut from the original POR and few other RCA releases that Chesky did as well as a pic or two of their mike setup.)

And the RCA 0.5 series? HP was being kind when he said they were 1/2 of what they should be

Are the "still some people though" annedotal as well?Roger, your explanations are wonderful. They paint a very nice end-to-end picture of the topic at hand. So I hope you take this as a small criticism. Your explanation at the extreme are somewhat inaccurate. For example, when we state a number for compressed audio, it is the data rate, not the sampling rate. The sampling rate of MP3 is 44.1 Khz at data rates >= 128Kbps and doesn't change. The number mentioned is the number of bits per second of audio. Uncompressed audio is 1.4 Mbit/sec. 128 Kbps, is 11 times lower data rate while maintaining the same sampling rate. Note that I said sampling rate, not frequency response. Standard MP3 codecs roll off the high frequencies above 16 Khz at all but the highest data rates in order to keep distortion under control so looking at the sampling rate alone can be misleading. Here is a quick test someone ran:http://www.lincomatic.com/mp3/mp3quality.html

CD:

192 Kbps MP3:

And even 320 Kbps:

MP3 was designed for backward compatibility with MPEG Layer 2 and to be implementable in really low-end hardware. As such, even putting aside the above filtering, it is not able to achieve transparency at any date rate -- your anecdotal observations not withstanding. To be sure, at 384 Kbps it can sound very good but it still sounds different to good number of audiophiles. AAC or WMA Pro on the other hand, can achieve extremely good quality at those rates perhaps fooling substantial majority of listeners. Still some people though, can tell the difference from uncompressed.

No. We performed extensive listening blind tests at Microsoft while my group was developing WMA and WMA Pro codecs and some people, including present companyAre the "still some people though" annedotal as well?

Note that Roger did point out one key thing: content type matters. Some content can be nearly transparent even at 128kbps (with good codecs -- not MP3). Others sound different at much higher data rate. In general, anything with transients is very difficult to encode. Roger gave one example: piano. A more common example is guitar strings. Even things like vocals or audience clapping in live concerts can be very difficult to encode. Put another way, if you have flexibility with what content you use, you can make any case about compressed music

All of this said, we were quite successful in fooling audiophiles. Many could be put in the bucket of general public in their inability to hear compression artifacts. Their ears simply doesn't know what to listen for. So in that sense Roger is right.

As a codec, MP3 is cast in concrete and cannot change. So in that sense, yes.Amir, have you been keeping up to date with advancements in MP3 encoding since you left MS?

But I suspect you probably mean the myriads of encoders out there. If so, I am aware of some of that work, including those which relax the frequency response (spec doesn't mandate that at all).

MP3 uses some outdated techniques such as fixed window size and poorly done entropy coders which limit its performance regardless of how hard one tries to do better with the encoder logic.

That said, I don't live and breath MP3 anymore (had to do it at Microsoft because of competitive reasons). Just about every device in the world today supports more advanced codecs whether it is AAC or WMA. Because of that, I don't see a reason for anyone focusing on it as a useful target. Unless of course, you are the 100th signal processing grad student who thinks he can take the open source code and make it better without knowing everything you need to know in doing so

Thanks-good info.No. We performed extensive listening blind tests at Microsoft while my group was developing WMA and WMA Pro codecs and some people, including present company, could tell the difference. The fraction was generally small though (1% to 5%).

Note that Roger did point out one key thing: content type matters. Some content can be nearly transparent even at 128kbps (with good codecs -- not MP3). Others sound different at much higher data rate. In general, anything with transients is very difficult to encode. Roger gave one example: piano. A more common example is guitar strings. Even things like vocals or audience clapping in live concerts can be very difficult to encode. Put another way, if you have flexibility with what content you use, you can make any case about compressed music.

All of this said, we were quite successful in fooling audiophiles. Many could be put in the bucket of general public in their inability to hear compression artifacts. Their ears simply doesn't know what to listen for. So in that sense Roger is right.

Roger:

I'm going to have to take exception to some of your statements about the Pines of Rome. I've collected, studied and been at RCA when they pulled out these original master tapes including the Pines of Rome from the tape vaults. I've also done interviews with the engineers who were there assisting Layton and Mohr for the recordings including Jack Pfeiffer, Anthony Salvatore and Max Wilcox.

I think your source is totally confused on the 16 track. It was 1959 and I don't think they had that capability. I think your source was confusing the number of mikes with the number of tracks. These recordings other than the very early experimental stereo recordings like Zarathustra, weren't what we'd call purist, minimal miked recordings. Most RCAs, as opposed to Mercury of its day, used 7-9 mikes for their recordings. (of course Mercury stuck by their 3 mike setup.) I saw the master tape of POR and it was a three track tape, believe me. There's no way they would have mixed from 16 to 3 track.

Then let's go to the actual story of the release of Reiners Pines of Rome, one of the most powerful pieces of music out there! (Maazel and Wilkinson don't do a bad job either on Decca.) There were many pressings of the Pines released. The only one that truly represents the tape was the 1S pressing. Yes, one can hear some tape saturation and distortion on peaks on this tape. But RCA pulled this LP quickly off the shelves as the public was complaining that their tts of the day couldn't track the record (that's opposed to Mercury and George Piros who were known for their ability to put a dynamic cut down on the lacquer). Their tonearms literally jumped out of the grooves. So RCA went back to the studio, obviously cut four more lacquers before they could get it right (that's evidenced by the missing 2-4S stampers) and released a 5S stamper. The 5S could play on the tts of the day but RCA had compressed the dynamic range of the recording considerably. RCA then went another 5 stampers releasing a 10S that was markedly inferior to even the 5S and if I remember correctly, cut at a much, much lower level (but I'd have to go back and compare them to confirm). So essentially every stamper after 10S was not even close to what was originally put down on the first 1S stamper. Now the Chesky for some reason was also compressed; I haven't heard the Classic Records reissue but I wasn't a fan of their masterings. (somewhere in storage have the records of how many pressings were cut from the original POR and few other RCA releases that Chesky did as well as a pic or two of their mike setup.)

And the RCA 0.5 series? HP was being kind when he said they were 1/2 of what they should be

[FONT="]Hi Myles,[/FONT]

[FONT="]Thanks for the fascinating information. No doubt, your sources are more accurate than mine as I only had a telephone call with which to work. And understandably, the representative at RCA to whom I spoke was not totally familiar with all the technical details from tens of years earlier, particularly when nobody who was involved in the work was available.[/FONT]

[FONT="]However, whether the master tapes were 3 track or 16 track doesn't change the fact that a mixdown was required to produce a 2-channel, stereo recording. My source was certain that the mixdowns from the LP pressings were not used on the CD. Therefore, the truly awful sound on the CD continues to be explained by the poor judgment of the "engineer" doing the mixdown rather than any inherent flaws in the digital recording process.[/FONT]

[FONT="]Best,[/FONT]

[FONT="]-Roger[/FONT]

Roger-Disgree or agrree you always present things in a common sense manner that translates into real world information the audiophile can act on. It appears you were discussing Stereo imaging-or left to right balance. Could you consider how we would go about measuring front to back imaging and the spatial relationships between musical istruments and voices? Often described as air around the instruments or voices.

gregadd

[FONT="]Hi Greg,[/FONT]

[FONT="]There is no way to directly measure front to back imaging information from speakers. That's because our brains process the information from the speakers into an image, and no machine can do that.[/FONT]

[FONT="]However, we can get some pretty good clues as to how a speaker will image by carefully evaluating its phase behavior. This should be done both with impulse measurements and by carefully examining its design.[/FONT]

[FONT="]Phasing are the time queues we hear in the sound. For example, at a concert we here that the violins are closer to us than the brass because their sound arrives at our ears sooner than that of the brass. A speaker needs to be able to duplicate this.[/FONT]

[FONT="]To do so, a uniform and coherent wave front must be produced by the speaker that is physically vertical and where all the frequencies are launched by the drivers at the same time. Those frequencies then need to arrive at our ears without interference. This is nearly impossible for a speaker to do for many reasons.[/FONT]

[FONT="]First, "cones and dome" speakers do not have planar surfaces. Just where is the wave formed on a cone or dome? Obviously, there is an average surface, but it is not well defined like it is on a truly planar speaker surface.[/FONT]

[FONT="]Keep in mind that the wave length of a 10 KHz tone is about on inch. So just a half inch of distance results in a phase error of fully 180 degrees. So physical accuracy of the moving surface is quite important.[/FONT]

[FONT="]Secondly, the drivers in many speakers are wired out of phase. This is deliberately done many manufacturers to compensate for the problem of the 3 dB peak at the crossover point that is inherent in 2nd order, Butterworth filters, which most manufacturers use. In other words, the phase is sacrificed to improve the frequency response. [/FONT]

[FONT="]Fortunately, some of the better speaker manufacturers have recognized this and they use steeper slopes and Linquitz/Riley filters which do not have the linearity problems of 2nd order Butterworth. They can then wire their drivers in phase. Despite this, I often see inverted phase on some drivers in even very expensive speakers showing that the drivers are still out of phase. [/FONT]

[FONT="]Thirdly, the drivers must be "time aligned." This means that the drivers must be positioned such that their wave fronts are aligned so that the sound from all of them arrives at your ears simultaneously. This can be quite difficult to do from a cosmetic standpoint, and it complicates construction a great deal. So usually only expensive speakers are time-aligned.[/FONT]

[FONT="]Today, it is possible to time-align the drivers using digital delay. This can be used to delay the early arrival driver's information until the later arriving information gets to your listening location. Digital crossovers have this feature. This makes it possible to build a speaker with high-quality cosmetics and still achieve excellent time-alignment.[/FONT]

[FONT="]Fourthly, multiple drivers can only be time-aligned for a specific vertical location in front of them. For example, if the speakers are designed with a woofer at the bottom and a tweeter at the top, they can only be in alignment at one point -- usually when you are sitting. If you are standing, you will be a greater distance from the woofer and closer to the tweeter and they will no longer be properly aligned. So it is best if the drivers are very close together.[/FONT]

[FONT="]Fifthly, shallow crossover slopes cause a lot of overlap between drivers. Since the drivers cannot be perfectly aligned, there will be some phase errors at the crossover region where both drivers are trying to reproduce the same information. Very steep crossover slopes greatly reduce the overlap and therefore the phase anomalies. [/FONT]

[FONT="]Finally there is the issue of dispersion. Speakers that disperse sound widely cause most of the sound to be heard off various surfaces in the room rather than directly from the speakers. The reflections off the room surfaces travel much longer distances than the direct sound from the speaker. [/FONT]

[FONT="]To add insult to injury, there are hundreds or thousands of reflections from the room. These reflections are all different lengths, so the phase from them will be different. The result is that your ears will hear tremendously confused phasing from wide dispersion speakers -- even if the speakers themselves have perfect phasing, which is highly unlikely.[/FONT]

[FONT="]As a result, most speakers have perfectly awful front to back imaging. Listeners often claim that the imaging from such and such a speaker is great, but that only because they have never heard a speaker with truly accurate phasing.[/FONT]

[FONT="]I try to avoid hyping my speaker designs on the forum. But in this case, understanding my design choices will help explain the information I am trying to convey. So allow me to point out that I build my electrostatic speakers out of a perfectly flat, one-piece, planar diaphragm that reproduces all frequencies from 172 Hz up through 32 KHz over its entire surface without crossovers. Therefore it produces a perfectly true wave front across the entire audio bandwidth where phase matters (bass excluded).[/FONT]

[FONT="]Even though the phasing is perfect from this type of speaker design, I go to great lengths to make sure that the phase information from the woofer is accurate as well -- even though bass phase is largely irrelevant to the imaging.[/FONT]

[FONT="]To do so, I mount the woofer high and as up as close to the electrostatic panel as possible. It is then driven by a digital crossover with appropriate time delay to achieve exact time-alignment. [/FONT]

[FONT="]You can even connect a microphone to the crossover and place it exactly at the height of your ears. It will then automatically send out test tones to the various drivers on both channels and it will measure the distance to each driver and the time it takes for its signal to reach the microphone. It will then calculate the correct delay to apply to the early-arriving driver (the ESL) so that sound from both the ESL and the woofer will arrive at your ears at precisely the same time.[/FONT]

[FONT="]The crossover uses extremely steep, 8th order slopes (48 dB/octave) so that there is very little overlap between the drivers. Also, since the overlap and phase error occurs at a very low frequency (172 Hz), there no phase error produced in the critical midrange region.[/FONT]

[FONT="]The speaker is highly directional so that room acoustics from the midrange up are virtually eliminated. Any delayed reflections that occur from the dipole radiation are so attenuated and delayed that they do not affect the phase information at the listening location.[/FONT]

[FONT="]The result is that my speakers produce truly accurate phase and the result are amazing images with a 3-dimensionality, depth, and holographic quality that you simply must hear to believe. The image floats out in the room between the speakers without appearing to be associated with the speakers at all. There is no "hole in the middle", no matter how widely you space the speakers. The image is highly realistic and no conventional speaker comes close to this type of performance. [/FONT]

[FONT="]In summary, there is no way to measure imaging. But if you closely study the phase behavior of the speaker and the way it interacts with the room, you can get a pretty good idea of the imaging you can expect from it. [/FONT]

[FONT="]In closing, let me also point out that the image from the speaker can only be as good as that captured on the recording. Regrettably, most recordings being made today are not done in a fine acoustic environment using stereo microphone techniques. They are done with a single mic in a sterile, nearly anechoic environment, and then artificial reverb is added during the mixdown. Such a recording cannot reproduce realistic imaging, although they can convey a sense of spaciousness that is pleasing. [/FONT]

[FONT="]As I've said before, garbage in gets you garbage out. So don't expect realism from modern recordings. But if you ever get the chance to come to Denver, plan on coming to my facilities to hear some of my stereo master tapes made with just two microphones of symphony orchestra and opera. You'll really feel like you are in the concert hall![/FONT]

[FONT="]-Roger[/FONT]

[FONT="]Hi Myles,[/FONT]

[FONT="]Thanks for the fascinating information. No doubt, your sources are more accurate than mine as I only had a telephone call with which to work. And understandably, the representative at RCA to whom I spoke was not totally familiar with all the technical details from tens of years earlier, particularly when nobody who was involved in the work was available.[/FONT]

[FONT="]However, whether the master tapes were 3 track or 16 track doesn't change the fact that a mixdown was required to produce a 2-channel, stereo recording. My source was certain that the mixdowns from the LP pressings were not used on the CD. Therefore, the truly awful sound on the CD continues to be explained by the poor judgment of the "engineer" doing the mixdown rather than any inherent flaws in the digital recording process.[/FONT]

[FONT="]Best,[/FONT]

[FONT="]-Roger[/FONT]

Yes, you're spot on there Roger. How one mixes that middle track in makes all the difference in the quality of the sound. Too little and there's a hole in the middle; a little more and the mids are recessed; too much and the sound is just intolerably congested.

Knowing the tape source is extremely important. That's why I won't buy an LP until I know first, that it was mastered from the tape and not an awful digital copy that the labels want to send out nowadays. Then I want to know what tape they used; I don't want it cut from a safety or later gen tape. That's why I think the Japanese pressings are usually overrated because they're re-eq'd and from later gen tapes. As my friend U47 here said to me one day, he'd rather have a first gen 71/2 ips copy than a later 15 ips copy.

Cheers!

Roger, your explanations are wonderful. They paint a very nice end-to-end picture of the topic at hand. So I hope you take this as a small criticism. Your explanation at the extreme are somewhat inaccurate. For example, when we state a number for compressed audio, it is the data rate, not the sampling rate. The sampling rate of MP3 is 44.1 Khz at data rates >= 128Kbps and doesn't change. The number mentioned is the number of bits per second of audio. Uncompressed audio is 1.4 Mbit/sec. 128 Kbps, is 11 times lower data rate while maintaining the same sampling rate. Note that I said sampling rate, not frequency response. Standard MP3 codecs roll off the high frequencies above 16 Khz at all but the highest data rates in order to keep distortion under control so looking at the sampling rate alone can be misleading. Here is a quick test someone ran:http://www.lincomatic.com/mp3/mp3quality.html

CD:

192 Kbps MP3:

And even 320 Kbps:

MP3 was designed for backward compatibility with MPEG Layer 2 and to be implementable in really low-end hardware. As such, even putting aside the above filtering, it is not able to achieve transparency at any date rate -- your anecdotal observations not withstanding. To be sure, at 384 Kbps it can sound very good but it still sounds different to good number of audiophiles. AAC or WMA Pro on the other hand, can achieve extremely good quality at those rates perhaps fooling substantial majority of listeners. Still some people though, can tell the difference from uncompressed.

[FONT="]Hi Amir,[/FONT]

[FONT="]Thank you for the additional information. I'm sure your readers appreciate it, as do I. [/FONT]

[FONT="]As I pointed out in my essay, I made no attempt to do a comprehensive analysis of the MP3 format due to limits on my time and the amount of writing involved. After all, a thorough study of MP3 would require a small book. So your additional information is most appreciated.[/FONT]

[FONT="]I just wanted to give readers a little taste of how the MP3 algorithm operates. I then focused on the only thing that users can do to control the quality of MP3's -- and that is the sampling rate. I wanted them to understand that it was essential to use high sampling rates to get high quality sound on MP3 recordings.[/FONT]

[FONT="]My studies and tests of MP3 were not anecdotal. They were carefully designed, controlled, and implemented scientific studies using double-blind, ABX testing and panels of listeners using electrostatic speakers so that the most subtle of details could be heard. My tests were similar to, but much more rigorous than the blind tests done by your on-line source -- and the results were very similar. [/FONT]

[FONT="]Specifically, at sampling rates above 128 KHz, it is really hard to hear any difference between MP3 and linear PCM. This means that for commercial recordings that typically are compressed, heavily processed, and contain a lot of transient material, nobody can hear any difference between them and a CD. Again, I am in agreement with your source that lower MP3 sampling rates introduce audible changes in the sound, so I didn't consider lower sampling rates to be "high fidelity" and I discouraged listeners from recording at those low rates.[/FONT]

[FONT="]The object of my discussion was to give guidelines for those who might want to copy their precious LPs to a digital format for ease of use and protection of their LP collections. Since LPs have rather poor frequency response (lower than that of MP3), very limited S/N, and high noise (all worse than MP3); high-rate, MP3 is a suitable for recording them since MP3 is capable of capturing all the information they have to offer. Therefore MP3 is one option to consider, particularly if the user wants to listen to the music in cars or on mobile devices where MP3 is the standard -- or if he wants to share files over the web.[/FONT]

[FONT="]I continue to prefer linear PCM when I want the best possible recording medium as it offers the best performance. But it certainly has serious disadvantages compared to MP3. These include requiring a lot more data storage, being awkward to use in portable devices, sharing music on CDs is more difficult than MP3 files, and CDs are rapidly becoming obsolete. [/FONT]

[FONT="]Whether we like it or not (I don't), MP3 appears to be the music medium of choice in the future as virtually all music will be internet, mobile phone, and computer based. Bandwidth is a critical issue in all this and there simply will be no place for the rather wasteful use of data storage demanded by linear PCM recording. So we might as well get used to using MP3.[/FONT]

[FONT="]I do most of my listening nowadays off the internet (www.pandora.com and others). All this music is only available in MP3 format, which can be obtained at 192 KHz sampling if you subscribe (lower rates if you don't). I am consistently impressed that I hear no problems in the quality of the sound with this format -- something I can't say for analog recordings. [/FONT]

[FONT="]The good news in all this (such as it is), is that high sampling rate MP3 actually does an excellent job of reproducing music. It isn't quite as good as linear PCM, but it is a lot better than analog. So if we are going to be stuck with an inferior recording medium (relative to linear PCM), it is nice to know that it is good enough that few (if any) listeners can hear the difference between it and the source. And any perceived differences are subtle at worst. So we need not despair over this turn of events.[/FONT]

[FONT="]In any case, it is nice for LP lovers to know that they have at least two good digital media upon which they can record their LPs. For absolutely flawless recording, they can use linear PCM at 16/44.1 or better. They can also use high sampling rate MP3 (at least 128 KHz and higher is better) that will produce essentially flawless copies of their LPs. The choice really comes down to how much money they are willing to spend on data storage and how much convenience they want. [/FONT]

[FONT="]The best choice is to do both. I recorded my LP collection on linear PCM 16/48 and then made MP3 copies of some of it for use on portable devices. In hindsight, I made the mistake of using 16/48 -- I should have used 16/44.1 so that I didn't have to convert the sampling rate when I wanted to make CD copies. Lesson learned.[/FONT]

[FONT="]It is always a good idea to have a back up copy. So for truly treasured LPs, more than one digital copy should be made. Since it is easy to copy the files once they are made in real time for the original LP, this is no problem. Note that one of the copies should be kept elsewhere so that if your home should burn, you won't loose everything. [/FONT]

[FONT="]It would be great if you or others on the forum would take the time to provide more detailed technical information on MP3. It is a big topic about which very little is known by audiophiles. Since it appears to be the medium of the future, we should all learn all we can about it. Again, thanks for your input. [/FONT]

[FONT="]-Roger[/FONT]

Steve Williams

Site Founder, Site Co-Owner, Administrator

It would be great if you or others on the forum would take the time to provide more detailed technical information on MP3. It is a big topic about which very little is known by audiophiles. Since it appears to be the medium of the future, we should all learn all we can about it. Again, thanks for your input.

I would second that suggestion.

Hopefully, Amir will sign up for that one with his experience at Microsoft.

In the meantime, here's some of Roger's writing on testing:

TESTING PART ONE (part 2 is next post)

Wouldn't you like to know for sure if that new, ten thousand dollar amplifier you want to buy is really better than your old one? Do different brands of tubes sound different from others? Do multi-thousand dollar interconnects really sound better than ordinary ones? Do high power solid state amps truly sound badly when playing quietly? Does negative feedback make an amp sound worse than one without feedback? Does the class of amplifier operation affect the sound? Do MOSFET amplifiers sound different from those using bipolar transistors? Do cables really sound better when connected in a particular direction?

These are just some of the questions audiophiles want answered. And they need to be answered with certainty before an audiophile drops tens of thousands of dollars on expensive audio equipment.

But there is something very strange about high end audio. Although it is a highly scientific, engineering exercise, most audiophiles base their purchase decisions almost totally on subjective listening tests, anecdotal information, and testimonials from self-proclaimed "experts" instead of from engineering measurements. Therefore, it is hard to know for a fact what components really have high sound quality.

Subjective listening tests can be useful and accurate. But if not done well, their results can be confusing and invalid. Worse yet, poor testing makes it possible for unsuspecting music lovers to be deceived and fail to get the performance they are seeking.

Unfortunately, there are many unscrupulous manufacturers and dealers who take advantage of this situation by making false claims based on "voodoo science" to sell inferior, grossly overpriced, or even worthless products to uninformed and confused audiophiles. I find it amazing that this state of affairs exists for such expensive products.

Audiophiles need to know -- and deserve to know the truth about the performance of the audio components they are considering. Only then can they make intelligent and informed decisions.

This requires accurate test information, which is not readily available. This information can be obtained by objective measurements by instruments and by valid listening tests. Unscrupulous businessmen in the audio industry have somehow managed to convince audiophiles that measurements cannot be trusted. So most audiophiles use listening tests to compare two similar items to evaluate which sounds better.

But most listening tests produce conflicting and unreliable results as proven by all the controversy one hears about the merits of various components. After all, quality testing will clearly and unquestionably reveal the superior product, so there should be no confusion or disputes about it.

For example, the ability of a digital camera to produce detailed images is intrinsically linked to the number of megapixels in its CCD. If one camera has more megapixels than the other, there simply is no question of which one can provide the most detailed image. Therefore, you don't find vidiophiles arguing over this.

The same is true of the technical aspects of audio equipment like frequency response, noise, and distortion. But because the results of most audiophile subjective testing is so variable and uncertain, different listeners come to different conclusions about what they hear. As a result, there is very little agreement about the quality of the performance of audio equipment.

Why is this so? We all hear in a similar way, so what is going on with subjective listening tests that is so confusing?

The purpose of this paper is to investigate testing and answer that question. Actually, the answer is simple, but requires great elaboration of the details to explain the problem and what must be done to correct it.

So let's eliminate the suspense and immediately answer the question of why the typical audiophile listening test produces vague and conflicting results. The answer is that most listening tests contain multiple, uncontrolled variables. Therefore, there is no way to know what is causing the differences in sound that are heard. Allow me to explain this in detail.

This issue of controlling the variables in a test lies at the heart of all testing. Audiophiles need to understand this and control the variables so that they can do accurate listening tests that produce reliable results.

What is a "variable?" A variable is any factor that can affect the result of a test.

An "uncontrolled" variable is the one variable in a test that is allowed to vary because we are trying to evaluate its effect. It is absolutely essential that any and all other variables in a test be "controlled" so that they do not influence the results.

If there is more than one uncontrolled variable in a test, then you will not be able to determine which variable caused the results you heard. Therefore, having multiple uncontrolled variables makes it impossible to draw any cause/effect conclusions from the test. Since most tests are trying to find cause/effect relationships, a test done with multiple, uncontrolled variables can't answer the question, so is simply worthless and invalid.

Let me make this very clear by giving an example of how a typical audiophile listening test is performed and then analyze it for uncontrolled variables. Let's assume that an audiophile is considering buying a new amplifier that costs $10,000 and wants to know if the new amplifier is really better than his current one and worth the large price that is being asked for it. His testing will go something like this:

He may listen to his old amp briefly before listening to the new one, or he may not even bother and just assume he can remember the sound of it from long experience. He will then turn off his system, unplug the cables from his old amp, put the new amp in place, hook up all the cables, turn everything back on, then listen to the new amp for awhile. He will then make a judgment regarding which amp sounded better.

He will usually go one step further and draw some sort of cause/effect relationship as to the CAUSE of why one amp sounded better. Typical examples of such cause/effect relationships might be that one amp had feedback while the other didn't, one was Class A while the other was Class D, one had tubes while the other had transistors, one had the latest boutique capacitors or resistors, while the other one didn't, etc. For the remainder of this article, I will refer to this type of test as "open loop" testing.

Now what would happen if I were to intervene in the above test and change the loudspeakers at the same time that the audiophile switched amplifiers? I think we would all agree that changing the loudspeakers would add another variable and that this make it impossible to determine the cause of the difference in sound that would be heard. We simply would have no way of knowing if the different sound that we heard was caused by the speakers or the amplifier (or both) because there are two uncontrolled variables in the test. Therefore, the test would be invalid and the results could not be used to determine which amplifier sounds better.

Now all rational audiophiles understand this concept of only having one uncontrolled variable in a test. They readily agree that you can only test one thing at a time. They made a sincere attempt to follow this process by only testing one component at a time in their listening tests.

But they unknowingly break the "one variable" rule in their listening tests. Let's look carefully at the above amplifier test and analyze it for uncontrolled variables.

When asked, the audiophile will honestly claim that his test only had one uncontrolled variable, which would be the amplifier. But he would be mistaken. His test actually had five uncontrolled variables. Any of them, or multiples of them could have caused the differences in sound he heard. He needs to control all the variables except for the amplifier under test. So what are the other uncontrolled variables?

1) LEVEL DIFFERENCES. If one amplifier played louder than the other, then it will sound better. Louder music sounds better. That is why we like to listen to our music loudly. Up to a certain limit, louder sounds better to us.

The gain and power of amplifiers varies. Therefore, for a specific volume control setting on the preamp used in the test, different amplifiers will play at slightly different loudness levels.

But the audiophile in the example probably didn't even attempt to set the preamp level at exactly the same level. He probably just turned up the level to where it sounded good. He made no attempt to match the levels at all because he was unaware that this was an uncontrolled variable.

Human hearing is extremely sensitive to loudness. Scientific tests show that we can hear and accurately detect very tiny differences in loudness (1/4 dB is possible). At the same time, we don't recognize obvious differences in the level of music until there are a couple of dB of difference. This is due to the transient and dynamic nature of music, which makes subtle level differences hard to recognize.

Therefore when music is just a little louder, we hear it as "better" rather than as "louder." It is essential that you recognize that two identical components can sound different simply by having one play a little louder than the other. The louder one will sound better to us.

This is a serious problem in listening tests. Consider the amplifier test above and for purposes of demonstration, let's assume that both amplifiers sound exactly the same, but that the new one will play a bit louder because it has slightly more gain. This means that the new amp will sound better than the old one in an open loop test even though the two actually sound identical.

Hearing this, the audiophile will then conclude that the new amp is better and will spend $10,000 to buy it. But in fact, the new amp doesn't intrinsically sound any better and it was the difference in loudness that caused the listener to perceive that it was better.

So the audiophile would have drawn a false conclusion about the amp. This erroneous conclusion cost him $10,000. I think you can see from this example that you absolutely, positively must not have more than one uncontrolled variable in your tests.

2) TIME DELAY. Humans can only remember SUBTLE differences in sound for about two seconds. Oh sure, you can tell the difference between your mother's and your father's voices after many years. But those differences aren't subtle.

Most audiophiles are seeking differences like "air", "clarity", "imaging", "dynamics", "sweetness", "musicality", etc. that are elusive and rather hard to hear and define. They are not obvious. We cannot remember them for more than a few seconds. To be able to really hear subtle differences accurately and reliably requires that you be able to switch between components immediately.

Equally important is that you should make many comparisons as this will greatly improve the reliability of your testing. This is particularly important when dealing with music as different types of music have a big influence on the sensitivity of what you can hear during your testing. You really need to test with many types of music using many comparisons.

Open loop testing only provides a single comparison, which is separated by a relatively long delay while components are changed. This makes it very difficult to determine with certainty if subtle differences in sound are truly present.

3) PSYCHOLOGICAL BIAS. Humans harbor biases. These prejudices influence what we hear. In other words, if you EXPECT one component to sound better than another -- it will.

It doesn't matter what causes your bias. The audiophile in the previous test had a bias towards the new amp, which is why he brought it home for testing. He expected it to sound better than his old amp.

That bias may have been because he expects tubes to sound better (or worse) than transistors, or that it had (or didn't have) feedback, or it was more expensive than his old amp, or that it looked better, or that he read a great review on it, or that is had a particular class of operation, etc. Bias is bias regardless of the cause and it will affect an audiophile's perception of performance. It must be eliminated from the test.

Don't think you are immune from the effects of bias. Even if you try hard to be fair and open-minded in a test, you simply can't will your biases away. You are human. You have biases. Accept that fact and design your testing to eliminate them.

4) CLIPPING. It doesn't matter what features an amplifier has -- if it is clipping, it is performing horribly and any potentially subtle improvements in sound due to a particular feature will be totally swamped by the massive distortion and general misbehavior of an amplifier when clipping. Therefore no test is valid if either amplifier is clipping.

If one amplifier in the above test was clipping, while the other wasn't, then of course the two will sound different from each other. But you are not supposed to be testing a clipping amp and comparing it to one that isn't clipping.

Most audiophiles simply don't recognize when their amps are clipping. This is because the clipping usually only occurs on musical peaks where it is very transient. Transient clipping is not recognized as clipping by most listeners because the average levels are relatively much longer than the peaks. Since the average levels aren't obviously distorted, the listeners think the amp is performing within its design parameters -- even when it is not.

Peak clipping really messes up the performance of the amplifier as its power supply voltages and circuits take several milliseconds to recover from clipping. During that time, the amp is operating far outside its design parameters, has massive distortion, and it will not sound good, even though it doesn't sound grossly distorted to the listener.

Instead of distortion, the listener will describe a clipping amp as sounding "dull" or "lifeless" (due to compressed dynamics), muddy (due to high transient distortion and compressed dynamics), "congested", "harsh", "strained", etc. In other words, the listener will recognize that the amp doesn't sound good, but he won't recognize the cause as clipping. Instead, he will likely assume that the differences in sound he hears is due to some minor feature like feedback, capacitors, type of tubes, bias level, class of operation, etc.) rather than simply lack of power.

But his opinion would be just that -- an assumption that is totally unsupported and unproven by any valid evidence. Most likely his guess would not be the actual cause of the problem. Because different audiophiles will make different assumptions about the causes of the differences they hear, it is easy to see why there is so much confusion and inaccuracy about the performance of components when open loop testing is used.

It is easy to show that most speaker systems require about 500 watts to play musical peaks cleanly. Most audiophiles use amps of around 100 watts. Therefore audiophiles are comparing clipping amps most of the time. This variable must be eliminated if you want to compare amplifiers operating as their designers intended.

5) The last uncontrolled variable is the amplifier. This is the one variable that we want to test. So we do not need to control it.

The above information should make it clear why open loop testing is fraught with error and confusion. It is easy to see why we can easily be tricked by open loop testing, particularly when there is a significant time delay which will allow our bias to strongly influence what we hear. All these uncontrolled variables simply make it impossible to draw valid conclusions from open loop testing, even though we may be doing our best and being totally sincere in our attempt to do quality testing.

But it doesn't have to be that way. It is possible to control all the variables so that subjective listening test results are accurate and useful. Here's how:

1) LEVEL DIFFERENCES are easily eliminated by matching the levels before starting the listening test. This is done by feeding a continuous sound (anything from a sine wave to white or pink noise) into the amps and measuring the output using an ordinary AC volt meter. The input level to the louder of the two amps will need to be attenuated until the levels of the amps are matched as closely as possible (must be matched to within 1/10 dB).

Need a signal generator? You can buy a dedicated one for around $50 on eBay. Or you can download one for free as software for your laptop at this link:

http://www.dr-jordan-design.de/signalgen.htm

You can also use an FM tuner to generate white noise. You can get it as interstation hiss by turning off the muting. An old analog tape deck will generate white noise by playing a blank tape at a high output level.

2) TIME DELAY must be eliminated by using a switch to compare the amps instantly and repeatedly. This is done by placing a double pole, double throw switch or relay in a box that will switch the amplifier outputs. Attenuators can also be placed on the box so you can adjust levels on amplifiers that have no input level controls. Of course, the box will have both input and output connectors for the amplifiers so that they can simply be plugged into the box for testing. For testing line-level components, you will use the same technique, but use the switch to control RCA or XLR connectors.

Need a test box? You can make one or borrow mine. You can reach me at roger@sanderssoundsystems.com or by phone at 303 838 8130.

3) PSYCHOLOGICAL BIAS must be eliminated by doing the test "blind." This means that listeners must not know which component they are hearing during the test. This will force them to make judgments based solely on the sound they hear and prevent their biases from influencing the results.

Scientists are so concerned about biases that they do double-blind testing. This should be done during audio tests too.

Double blind audio testing means that the person plugging in the equipment (who will know which component is connected to which side of the test box) must not be involved in the listening tests. If he is present during the test, he may give clues to the listeners either deliberately or accidentally about which component is playing.

There is one more thing that must be done to assure that bias is eliminated. There must be an "X" condition during the tests. By this I mean that you can't simply do an "A-B" test where you switch back and forth between components. A straight "A-B" test will make it possible for listeners to cheat and claim they hear a difference each time the switch is thrown, even if there are no differences.

So you need to do an "ABX" test where when the switch is thrown, it sometimes continues to test the same component rather than switching to the other. Of course, if the component is not switched out, the sound will not change, so a listener cannot claim that there is a difference in sound every time the switch is thrown. Listeners are told prior to the test that this will be an ABX test so sometimes there will be no difference and they must be careful and be sure they really hear a difference.

4) CLIPPING can be eliminated by connecting an oscilloscope to the amps and monitoring it during the test. A 'scope is very fast and can accurately follow the musical peaks -- something your volt meter cannot do. Clipping is easy to see as the trace will appear to run into a brick wall. If clipping is seen during initial testing, the listening level must be turned down until it no longer occurs. Then you may proceed with the test.

Need an oscilloscope? You can easily find a good used one on eBay for around $100. If you use a spectrum analyzer (to be discussed shortly), it probably will have an oscilloscope function built into it, so you don't need to buy a separate one.

The variables above apply to amplifiers. There usually are different variables involved for different components. You have to use some thoughtful logic to determine what variables are present and design your test to control them.

For example, preamplifiers and CD players don't clip in normal operation. So you don't need to bother with a 'scope. Cables, interconnects, power conditioners, and power cords don't have any gain. So you don't need to do any level matching. Just use a switch and do the test blind.

Also, consider your comparison references. In the case of an amplifier, you can only compare it to another amplifier because power and gain are required. But when testing a preamp, you don't have to compare it to another preamp. You can compare it to the most perfect reference possible -- a straight, short, piece of wire.

This usually takes the form of a short interconnect, or you can go one better and use a very short piece of wire soldered across the terminals of the test box switch. You need then only set the preamp to unity gain to match the wire and do your testing blind.

There are many variations of the ABX test. A rigorous, scientifically valid ABX test will be done with a panel of listeners to eliminate any hearing faults that might be present with a single listener, and it will always be done double-blind.

But you can cut corners a little bit and still have a valid test. For example, you can do the test single-blind with one listener. What this means of course, is that you will do the listening test by yourself.

You must "blind" yourself. The best way to do this is to have someone else connect the cables to the equipment so you don't know which one is "A" and which one is "B." You can then set levels and proceed to listening tests.

When doing ABX testing with others, it is helpful to give them a little bit of training and let them practice. Tell them that they will only be asked if they can hear any DIFFERENCE between components. Obviously, if one component sounds "better" (or worse) than the other, it must also sound different.

You need not be concerned about making judgments on subjective quality factors initially. Just ask the listeners if they hear any differences of any type and if any exist, you can test that separately later.

Let them know that you will be including an "X" factor in testing where sometimes the switch will not actually change to the other component. Tell them this to eliminate guessing. Point out that the purpose of the test is not to trick them, but to assure accuracy.

Because many comparisons are made, I use a score sheet for listening groups. The sheet has a check box for "different" and "same" that they check after each comparison. I have a master sheet that shows where differences are possible (A-B test) and where they are not (A-X or B-X). I can then score their sheets quickly after the test. I find that listeners are very accurate and that there is usually complete agreement on what is heard.

When testing by yourself, you don't use a score sheet and therefore can only use A-B testing. This type of testing isn't well controlled, but you can usually get a good idea of what to expect. If you need really reliable results, you should back up your personal testing with others using a full ABX test to be certain of the results.

When training a new group of listeners, I deliberately make a small error in setup (usually a level difference of 1 dB on one channel) and have them start listening. The ABX test is extremely revealing and much more sensitive than open loop testing. So even with such a tiny difference, even unskilled listeners quickly become very good at detecting them. Once the listeners are confident in how the test operates, we move on to the actual testing.

During testing, you may use any source equipment and source material you and the listeners like. I let listeners take turns doing the switching. I encourage them to listen for as long as they wish and switch whenever and as often they like while listening to any music they wish. I want them to be sure of what they hear.

Different types of source material make a big difference in how easy it is to hear differences. Generally, it is more difficult to hear differences in highly dynamic, transient music than slow, sustained music.

Actually, music isn't even the best material for hearing some types of differences. Steady-state white noise, pink noise, and MLS test tones are far more revealing of frequency response errors than is music. So I usually include some noise during a part of my testing.

You don't have to use "golden ear" listeners for an ABX test. I encourage the disinterested wives of audiophiles to join in the test. I find that they are just as good or better than their audiophile husbands at hearing differences. They also find the test entertaining. Since I often have visitors at my factory with whom I do these tests, it is nice to keep their spouses occupied as well.

If you find differences, you can then explore their cause by being a bit more creative in controlling the variables. For example, let's say you want to know if negative feedback affects the sound. To do so, you will need to have one amplifier with feedback and one without that you can compare.