For those who just started reading up on Olympus, Olympus I/O, and XDMI, please note that all information in this thread has been summarized in a single PDF document that can be downloaded from the Taiko Website.

https://taikoaudio.com/taiko-2020/taiko-audio-downloads

The document is frequently updated.

Scroll down to the 'XDMI, Olympus Music Server, Olympus I/O' section and click 'XDMI, Olympus, Olympus I/O Product Introduction & FAQ' to download the latest version.

Good morning WBF!

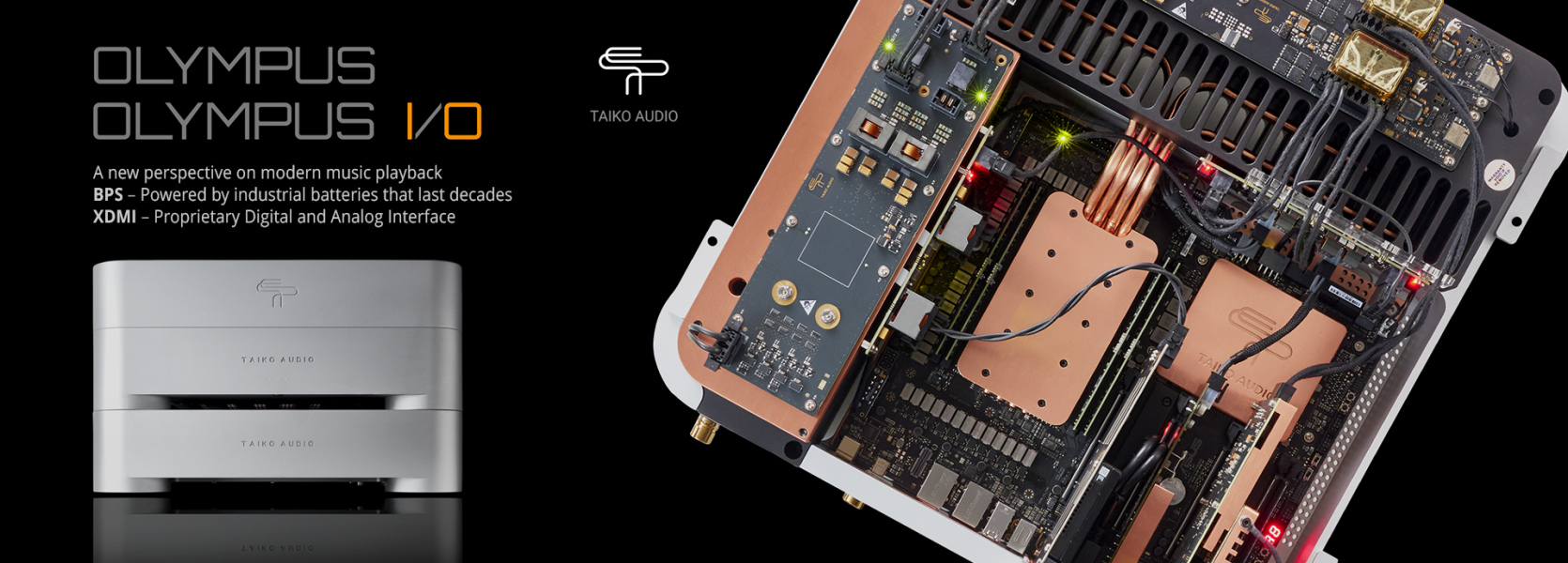

We are introducing the culmination of close to 4 years of research and development. As a bona fide IT/tech nerd with a passion for music, I have always been intrigued by the potential of leveraging the most modern of technologies in order to create a better music playback experience. This, amongst others, led to the creation of our popular, perhaps even revolutionary, Extreme music server 5 years ago, which we have been steadily improving and updating with new technologies throughout its life cycle. Today I feel we can safely claim it's holding its ground against the onslaught of new server releases from other companies, and we are committed to keep improving it for years to come.

We are introducing a new server model called the Olympus. Hierarchically, it positions itself above the Extreme. It does provide quite a different music experience than the Extreme, or any other server I've heard, for that matter. Conventional audiophile descriptions such as sound staging, dynamics, color palette, etc, fall short to describe this difference. It does not sound digital or analog, I would be inclined to describe it as coming closer to the intended (or unintended) performance of the recording engineer.

Committed to keeping the Extreme as current as possible, we are introducing a second product called the Olympus I/O. This is an external upgrade to the Extreme containing a significant part of the Olympus technology, allowing it to come near, though not entirely at, Olympus performance levels. The Olympus I/O can even be added to the Olympus itself to elevate its performance even further, though not as dramatic an uplift as adding it to the Extreme. Consider it the proverbial "cherry on top".

Last edited by a moderator: